Difference between revisions of "Roles and Competencies"

| Line 3: | Line 3: | ||

==Relationship of SE Competencies and KSAAs== | ==Relationship of SE Competencies and KSAAs== | ||

| − | There are many ways to define competency. One definition, based on the US Office of Personnel Management is, "an observable, measurable pattern of skills, knowledge, abilities, behaviors and other characteristics that an individual needs to perform work roles or occupational functions successfully” (Whitcomb, Delgado, Khan, Alexander, White, Grambow, Walter, 2015). Another definition is “a measure of an individual’s ability in terms of their knowledge, skills, and behavior to perform a given role” (Holt and Perry, 2011). Competency can be thought of as the ability to use the appropriate KSAAs to successfully complete specific job-related tasks (Whitcomb, Khan, White, 2014). Competencies align with the tasks that are expected to be accomplished for the job position. KSAAs belong to the | + | There are many ways to define competency. One definition, based on the US Office of Personnel Management is, "an observable, measurable pattern of skills, knowledge, abilities, behaviors and other characteristics that an individual needs to perform work roles or occupational functions successfully” (Whitcomb, Delgado, Khan, Alexander, White, Grambow, Walter, 2015). Another definition is “a measure of an individual’s ability in terms of their knowledge, skills, and behavior to perform a given role” (Holt and Perry, 2011). Competency can be thought of as the ability to use the appropriate KSAAs to successfully complete specific job-related tasks (Whitcomb, Khan, White, 2014). Competencies align with the tasks that are expected to be accomplished for the job position. KSAAs belong to the individual. In the process of filling a position, organizations have a specific set of competencies associated with tasks that are directly related to the job. A position person possesses the KSAAs that enable them to perform the desired tasks at an acceptable level of competency. |

The KSAAs are obtained and developed from a combination of several sources of learning including education, training, and on-the-job experience. By defining the KSAAs in terms of a standard taxonomy, they can be used as learning objectives for competency development (Whitcomb, Khan, White, 2014). Bloom’s Taxonomy for the cognitive and affective domains provides this structure (Bloom 1956, Krathwohl 2002). The cognitive domain includes knowledge, critical thinking and the development of intellectual skills, while the affective domain describes growth in awareness, attitude, emotion, changes in interest, judgment and the development of appreciation (Bloom, 1956). Cognitive and affective processes within Bloom’s taxonomic classification schema refer to levels of observable actions, which indicate learning is occurring. Bloom’s Taxonomy for the cognitive and affective domains define terms as categories of levels that can be used for consistently defining KSAA statements (Krathwohl 2002): | The KSAAs are obtained and developed from a combination of several sources of learning including education, training, and on-the-job experience. By defining the KSAAs in terms of a standard taxonomy, they can be used as learning objectives for competency development (Whitcomb, Khan, White, 2014). Bloom’s Taxonomy for the cognitive and affective domains provides this structure (Bloom 1956, Krathwohl 2002). The cognitive domain includes knowledge, critical thinking and the development of intellectual skills, while the affective domain describes growth in awareness, attitude, emotion, changes in interest, judgment and the development of appreciation (Bloom, 1956). Cognitive and affective processes within Bloom’s taxonomic classification schema refer to levels of observable actions, which indicate learning is occurring. Bloom’s Taxonomy for the cognitive and affective domains define terms as categories of levels that can be used for consistently defining KSAA statements (Krathwohl 2002): | ||

Cognitive Domain | Cognitive Domain | ||

| − | + | - Remember | |

| − | + | - Understand | |

| − | + | - Apply | |

| − | + | - Analyze | |

| − | + | - Evaluate | |

| − | + | - Create | |

| − | |||

Affective Domain | Affective Domain | ||

| − | + | - Receive | |

| − | + | - Respond | |

| − | + | - Value | |

| − | + | - Organize | |

| − | + | - Characterize | |

| − | |||

Both cognitive and affective domains should be included in the development of systems engineering competency models, as aspects such as teaming and leadership depend on abilities related to affective processes. Using the affective domain in the specification of KSAAs, is also important as every piece of information we process in our brains goes through our affective (emotional) processors before it is integrated by our cognitive processors (Whitcomb and Whitcomb, 2013). | Both cognitive and affective domains should be included in the development of systems engineering competency models, as aspects such as teaming and leadership depend on abilities related to affective processes. Using the affective domain in the specification of KSAAs, is also important as every piece of information we process in our brains goes through our affective (emotional) processors before it is integrated by our cognitive processors (Whitcomb and Whitcomb, 2013). | ||

Revision as of 19:53, 3 June 2015

Enabling individuals to perform systems engineering (SE) requires understanding SE roles, knowledge, skills, abilities, and attitudes (KSAA), tasks, and competencies. Within a business or enterprise, SE responsibilities are allocated to individuals through the definition of SE roles. For an individual, a set of KSAAs enables the fulfillment of the competencies needed to perform tasks associated with the assigned SE role. SE competencies reflect the individual’s KSAAs, which are developed through education, training, and on-the-job experience. Traditionally, SE competencies build on innate personal qualities and have been developed primarily through experience. Recently, education and training have taken on a greater role in the development of SE competencies.

Relationship of SE Competencies and KSAAs

There are many ways to define competency. One definition, based on the US Office of Personnel Management is, "an observable, measurable pattern of skills, knowledge, abilities, behaviors and other characteristics that an individual needs to perform work roles or occupational functions successfully” (Whitcomb, Delgado, Khan, Alexander, White, Grambow, Walter, 2015). Another definition is “a measure of an individual’s ability in terms of their knowledge, skills, and behavior to perform a given role” (Holt and Perry, 2011). Competency can be thought of as the ability to use the appropriate KSAAs to successfully complete specific job-related tasks (Whitcomb, Khan, White, 2014). Competencies align with the tasks that are expected to be accomplished for the job position. KSAAs belong to the individual. In the process of filling a position, organizations have a specific set of competencies associated with tasks that are directly related to the job. A position person possesses the KSAAs that enable them to perform the desired tasks at an acceptable level of competency.

The KSAAs are obtained and developed from a combination of several sources of learning including education, training, and on-the-job experience. By defining the KSAAs in terms of a standard taxonomy, they can be used as learning objectives for competency development (Whitcomb, Khan, White, 2014). Bloom’s Taxonomy for the cognitive and affective domains provides this structure (Bloom 1956, Krathwohl 2002). The cognitive domain includes knowledge, critical thinking and the development of intellectual skills, while the affective domain describes growth in awareness, attitude, emotion, changes in interest, judgment and the development of appreciation (Bloom, 1956). Cognitive and affective processes within Bloom’s taxonomic classification schema refer to levels of observable actions, which indicate learning is occurring. Bloom’s Taxonomy for the cognitive and affective domains define terms as categories of levels that can be used for consistently defining KSAA statements (Krathwohl 2002):

Cognitive Domain - Remember - Understand - Apply - Analyze - Evaluate - Create

Affective Domain - Receive - Respond - Value - Organize - Characterize

Both cognitive and affective domains should be included in the development of systems engineering competency models, as aspects such as teaming and leadership depend on abilities related to affective processes. Using the affective domain in the specification of KSAAs, is also important as every piece of information we process in our brains goes through our affective (emotional) processors before it is integrated by our cognitive processors (Whitcomb and Whitcomb, 2013).

SE Competency Models

Contexts in which individual competency models are typically used include

- Recruitment and Selection: Competencies define categories for behavioral event interviewing (BEI), increasing the validity and reliability of selection and promotion decisions.

- Human Resources Planning and Placements: Competencies are used to identify individuals to fill specific positions and/or identify gaps in key competency areas.

- Education, Training, and Development: Explicit competency models let employees know which competencies are valued within their organization. Curriculum and interventions can be designed around desired competencies.

Commonality and Domain Expertise

No single individual is expected to be proficient in all the competencies found in any model. The organization, overall, must satisfy the required proficiency in sufficient quantity to support business needs. Organizational capability is not a direct summation of the competency of the individuals in the organization, since organizational dynamics play an important role that can either raise or lower overall proficiency and performance. The articles Enabling Teams and Enabling Businesses and Enterprises explore this further.

SE competency models generally agree that systems thinking, taking a holistic view of the system that includes the full life cycle, and specific knowledge of both technical and managerial SE methods are required to be a fully capable systems engineer. It is also generally accepted that an accomplished systems engineer will have expertise in at least one domain of practice. General models, while recognizing the need for domain knowledge, typically do not define the competencies or skills related to a specific domain. Most organizations tailor such models to include specific domain KSAAs and other peculiarities of their organization.

INCOSE Certification

Certification is a formal process whereby a community of knowledgeable, experienced, and skilled representatives of an organization, such as the International Council on Systems Engineering (INCOSE), provides formal recognition that a person has achieved competency in specific areas (demonstrated by education, experience, and knowledge). (INCOSE nd). The most popular credential in SE is offered by INCOSE, which requires an individual to pass a test to confirm knowledge of the field, requires experience in SE, and recommendations from those who have knowledge about the individual's capabilities and experience. Like all such credentials, the INCOSE certificate does not guarantee competence or suitability of an individual for a particular role, but is a positive indicator of an individual's ability to perform. Individual workforce needs often require additional KSAAs for any given systems engineer, but certification provides an acknowledged common baseline.

Domain- and Industry-specific Models

No community consensus exists on a specific competency model or small set of related competency models. Many SE competency models have been developed for specific contexts or for specific organizations, and these models are useful within these contexts.

Among the domain- and industry-specific models is the Aerospace Industry Competency Model (ETA 2010), developed by the Employment and Training Administration (ETA) in collaboration with the Aerospace Industries Association (AIA) and the National Defense Industrial Association (NDIA), and available online. This model is designed to evolve along with changing skill requirements in the aerospace industry. The ETA makes numerous competency models for other industries available online (ETA 2010). The NASA Competency Management System (CMS) Dictionary is predominately a dictionary of domain-specific expertise required by the US National Aeronautics and Space Administration (NASA) to accomplish their space exploration mission (NASA 2009).

Users of models should be aware of the development method and context for the competency model they plan to use, since the primary competencies for one organization might differ from those for another organization. These models often are tailored to the specific business characteristics, including the specific product and service domain in which the organization operates. Each model typically includes a set of applicable competencies along with a scale for assessing the level of proficiency.

SE Competency Models — Examples

Though many organizations have proprietary SE competency models, several published SE competency models can be used for reference, including

- The International Council on Systems Engineering (INCOSE) UK Advisory Board model (Cowper et al. 2005; INCOSE 2010);

- The ENG model (DAU 2013);

- The Academy of Program/Project & Engineering Leadership (APPEL) model (Menrad and Lawson 2008); and

- The MITRE model (MITRE 2007).

Other models and lists of traits include: Hall (1962), Frank (2000; 2002; 2006), Kasser et al. (2009), Squires et al. (2011), and Armstrong et al. (2011). Ferris (2010) provides a summary and evaluation of the existing frameworks for personnel evaluation and for defining SE education. Squires et al. (2010) provide a competency-based approach that can be used by universities or companies to compare their current state of SE capability development against a government-industry defined set of needs. SE competencies can also be inferred from standards such as ISO-15288 (ISO/IEC/IEEE 15288 2015) and from sources such as the INCOSE Systems Engineering Handbook (INCOSE 2012), the INCOSE Systems Engineering Certification Program, and CMMI criteria (SEI 2007). Table 1 lists information about several SE competency models. Each model was developed for a unique purpose within a specific context and validated in a particular way. It is important to understand the unique environment surrounding each competency model to determine its applicability in any new setting.

| Competency Model

Individual Level |

Date | Author(s) | Purpose | Development Method | Competency Model |

|---|---|---|---|---|---|

| INCOSE UK WG | 2010 | INCOSE | Identify the competencies required to conduct good systems engineering | INCOSE Working Group | (INCOSE 2010), (INCOSE UK 2009) |

| MITRE Competency Model | 2007 | MITRE | To define new curricula systems engineering and to assess personnel and organizational capabilities | Focus groups as described in (Trudeau 2005) | (Trudeau 2005), (MITRE 2007) |

| ENG Competency Model | 2013 | DAU | Identify competencies required for the DoD acquisition engineering professional. | DoD and DAU internal development | (DAU 2013) |

| NASA APPEL Competency Model | 2009 | NASA | To improve project management and systems engineering at NASA | NASA internal development - UPDATE IN WORK | (NASA 2009) |

| CMMI for Development | 2007 | SEI | Process improvement maturity model for the development of products and services | SEI Internal Development | (SEI 2007), (SEI 2004) |

To provide specific examples for illustration, three SE competency model examples follow.

INCOSE SE Competency Model

The INCOSE model was developed by a working group in the United Kingdom (Cowper et al. 2005). As Table 2 shows, the INCOSE framework is divided into three theme areas - systems thinking, holistic life cycle view, and systems management - with a number of competencies in each. The INCOSE UK model was later adopted by the broader INCOSE organization (INCOSE 2010b).

<html>

|

Systems Thinking: |

System Concepts |

|

|

Super System Capability Issues |

||

|

Enterprise and Technology Environment |

||

|

Hollistic Lifecycle View |

Determining and Managing Stakeholder Requirements |

|

|

Systems Design |

Architectural Design |

|

|

Concept Generation |

||

|

Design For... |

||

|

Functional Analysis |

||

|

Interface Management |

||

|

Maintain Design Integrity |

||

|

Modeling and Simulation |

||

|

Select Preferred Solution |

||

|

System Robustness |

||

|

Systems Intergration & Verification |

||

|

Validation |

||

|

Transition to Operation |

||

|

Systems Engineering Management |

Concurrent Engineering |

|

|

Enterprise Integration |

||

|

Integration of Specialties |

||

|

Lifecycle Process Definition |

||

</html>

United States DoD Engineering Competency Model

The model for US Department of Defense (DoD) acquisition engineering professionals (ENG) includes 41 competency areas, as shown in Table 3 (DAU 2013). Each is grouped according to a “Unit of Competence” as listed in the left-hand column. For this model, the four top-level groupings are analytical, technical management, professional, and business acumen. The life cycle view used in the INCOSE model is evident in the ENG analytical grouping, but is not cited explicitly. Technical management is the equivalent of the INCOSE SE management, but additional competencies are added, including software engineering competencies and acquisition. Selected general professional skills have been added to meet the needs for strong leadership required of the acquisition engineering professionals. The business acumen competencies were added to meet the needs of these professionals to be able to support contract development and oversight activities and to engage with the defense industry.

<html>

| Analytical (11) | 1. Mission-Level Assessment |

| 2. Stakeholder Requirements Definition | |

| 3. Requirements Analysis | |

| 4. Architecture Design | |

| 5. Implementation | |

| 6. Intergration | |

| 7. Verification | |

| 8. Validation | |

| 9. Transition | |

| 10. Design Considerations | |

| 11. Tools and Techniques | |

| Technical Management (10) | 12. Decision Analysis |

| 13. Technical Planning | |

| 14. Technical Assessment | |

| 15. Configuration Management | |

| 16. Requirements Management | |

| 17. Risk Management | |

| 18. Data Management | |

| 19. Interface Management | |

| 20. Software Engineering | |

| 23. Acquisition | |

| Professional (10) | 24. Problem Solving |

| 25. Strategic Thinking | |

| 26. Professional Ethics | |

| 27. Leading High-Performance Teams | |

| 28. Communication | |

| 29. Coaching and Mentoring | |

| 30. Managing Stakeholders | |

| 31. Mission and Results Focus | |

| 32. Personal Effectiveness/Peer Interaction | |

| 33. Sound Judgment | |

| Business Acumen (10) | 34. Industry Landscape |

| 35. Organization | |

| 36. Cost, Pricing, and Rates | |

| 37. Cost Estimating | |

| 38. Financial Reporting and Metrics | |

| 39. Business Strategy | |

| 40. Capture Planning and Proposal Process | |

| 41. Supplier Management | |

| 42. Industry Motivation, Incentives, Rewards | |

| 43. Negotiations |

</html>

NASA SE Competency Model

The US National Aeronautics and Space Administration (NASA) APPEL website provides a competency model that covers both project engineering and systems engineering (APPEL 2009). There are three parts to the model, one that is unique to project engineering, one that is unique to systems engineering, and a third that is common to both disciplines. Table 4 below shows the SE aspects of the model. The project management items include project conceptualization, resource management, project implementation, project closeout, and program control and evaluation. The common competency areas are NASA internal and external environments, human capital and management, security, safety and mission assurance, professional and leadership development, and knowledge management. This 2010 model is adapted from earlier versions. (Squires at al. 2010, 246-260) offer a method that can be used to analyze the degree to which an organization’s SE capabilities meet government-industry defined SE needs.

<html>

| System Design | SE 1.1 - Stakeholder Expectation Definition |

| SE 1.2 - Technical Requirements Definition | |

| SE 1.3 - Logical Decomposition | |

| SE 1.4 - Design Solution Definition | |

| Product Realization | SE 2.1 - Product Implementation |

| SE 2.2 - Product Integration | |

| SE 2.3 - Product Verification | |

| SE 2.4 - Product Validation | |

| SE 2.5 - Product Transition | |

| Technical Management | SE 3.1 - Technical Planning |

| SE 3.2 - Requirements Management | |

| SE 3.3 - Interface Management | |

| SE 3.4 - Technical Risk Management | |

| SE 3.5 - Configuration Management | |

| SE 3.6 - Technical Data Management | |

| SE 3.7 - Technical Assessment | |

| SE 3.8 - Technical Decision Analysis |

</html>

Relationship of SE Competencies to Other Competencies

SE is one of many engineering disciplines. A competent SE must possess KSAAs that are unique to SE, as well as many other KSAAs that are shared with other engineering and non-engineering disciplines.

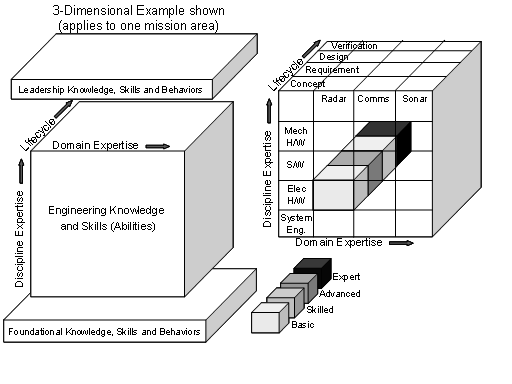

One approach for a complete engineering competency model framework has multiple dimensions where each of the dimensions has unique KSAAs that are independent of the other dimensions (Wells 2008). The number of dimensions depends on the engineering organization and the range of work performed within the organization. The concept of creating independent axes for the competencies was presented in Jansma and Derro (2007), using technical knowledge (domain/discipline specific), personal behaviors, and process as the three axes. An approach that uses process as a dimension is presented in Widmann et al. (2000), where the competencies are mapped to process and process maturity models. For a large engineering organization that creates complex systems solutions, there are typically four dimensions:

- Discipline (e.g., electrical, mechanical, chemical, systems, optical);

- Life Cycle (e.g., requirements, design, testing);

- Domain (e.g., aerospace, ships, health, transportation); and

- Mission (e.g., air defense, naval warfare, rail transportation, border control, environmental protection).

These four dimensions are built on the concept defined in Jansma and Derro (2007) and Widmann et al. (2000) by separating discipline from domain and by adding mission and life cycle dimensions. Within many organizations, the mission may be consistent across the organization and this dimension would be unnecessary. A three-dimensional example is shown in Figure 1, where the organization works on only one mission area so that dimension has been eliminated from the framework.

The discipline, domain, and life cycle dimensions are included in this example, and some of the first-level areas in each of these dimensions are shown. At this level, an organization or an individual can indicate which areas are included in their existing or desired competencies. The sub-cubes are filled in by indicating the level of proficiency that exists or is required. For this example, blank indicates that the area is not applicable, and colors (shades of gray) are used to indicate the levels of expertise. The example shows a radar electrical designer that is an expert at hardware verification, is skilled at writing radar electrical requirements, and has some knowledge of electrical hardware concepts and detailed design. The radar electrical designer would also assess his or her proficiency in the other areas, the foundation layer, and the leadership layer to provide a complete assessment.

References

Works Cited

Armstrong, J.R., D. Henry, K. Kepcher, and A. Pyster. 2011. "Competencies Required for Successful Acquisition of Large, Highly Complex Systems of Systems." Paper presented at 21st Annual International Council on Systems Engineering (INCOSE) International Symposium (IS), 20-23 June 2011, Denver, CO, USA.

Cowper, D., S. Bennison, R. Allen-Shalless, K. Barnwell, S. Brown, A. El Fatatry, J. Hooper, S. Hudson, L. Oliver, and A. Smith. 2005. Systems Engineering Core Competencies Framework. Folkestone, UK: International Council on Systems Engineering (INCOSE) UK Advisory Board (UKAB).

DAU. 2013. ENG Competency Model, 12 June 2013 version. in Defense Acquisition University (DAU)/U.S. Department of Defense Database Online. Accessed on June 3, 2015. Available at https://dap.dau.mil/workforce/Documents/Comp/ENG%20Competency%20Model%2020130612_Final.pdf.

ETA. 2010. Career One Stop: Competency Model Clearing House: Aerospace Competency Model. in Employment and Training Administration (ETA)/U.S. Department of Labor. Washington, DC. Accessed on September 15, 2011. Available at http://www.careeronestop.org//competencymodel/pyramid.aspx?AEO=Y.

Ferris, T.L.J. 2010. "Comparison of Systems Engineering Competency Frameworks." Paper presented at the 4th Asia-Pacific Conference on Systems Engineering (APCOSE), Systems Engineering: Collaboration for Intelligent Systems, 3-6 October 2010, Keelung, Taiwan.

Frank, M. 2000. "Engineering Systems Thinking and Systems Thinking." Systems Engineering. 3(3): 163-168.

Frank, M. 2002. "Characteristics of Engineering Systems Thinking – A 3-D Approach for Curriculum Content." IEEE Transaction on System, Man, and Cybernetics. 32(3) Part C: 203-214.

Frank, M. 2006. "Knowledge, Abilities, Cognitive Characteristics and Behavioral Competences of Engineers with High Capacity for Engineering Systems Thinking (CEST)." Systems Engineering. 9(2): 91-103. (Republished in IEEE Engineering Management Review. 34(3)(2006):48-61).

Hall, A.D. 1962. A Methodology for Systems Engineering. Princeton, NJ, USA: D. Van Nostrand Company Inc.

INCOSE. 2011. "History of INCOSE Certification Program." Accessed April 13, 2015 at http://www.incose.org/certification/CertHistory

INCOSE. 2012. Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities, version 3.2.2. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.2.

INCOSE. 2010. Systems Engineering Competencies Framework 2010-0205. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2010-003.

INCOSE UK. 2009. "Systems Engineering Competency Framework," Accessed on June 3, 2015. Available at, <http://www.incoseonline.org.uk/Normal_Files/Publications/Framework.aspx?CatID=Publications&SubCat=INCOSEPublications>>.

Jansma, P.A. and M.E. Derro. 2007. "If You Want Good Systems Engineers, Sometimes You Have to Grow Your Own!." Paper presented at IEEE Aerospace Conference. 3-10 March, 2007. Big Sky, MT, USA.

Kasser, J.E., D. Hitchins, and T.V. Huynh. 2009. "Reengineering Systems Engineering." Paper presented at the 3rd Annual Asia-Pacific Conference on Systems Engineering (APCOSE), 2009, Singapore.

Menrad, R. and H. Lawson. 2008. "Development of a NASA Integrated Technical Workforce Career Development Model Entitled: Requisite Occupation Competencies and Knowledge – The ROCK." Paper presented at the 59th International Astronautical Congress (IAC), 29 September-3 October, 2008, Glasgow, Scotland.

MITRE. 2007. "MITRE Systems Engineering (SE) Competency Model." Version 1.13E. September 2007. Accessed on June 3, 201. Available at, http://www.mitre.org/publications/technical-papers/systems-engineering-competency-model.

NASA. 2009. NASA Competency Management Systems (CMS): Workforce Competency Dictionary, revision 7a. U.S. National Aeronautics and Space Administration (NASA). Washington, D.C.

NASA. 2009. Project Management and Systems Engineering Competency Model. Academy of Program/Project & Engineering Leadership (APPEL). Washington, DC, USA: US National Aeronautics and Space Administration (NASA). Accessed on June 3, 2015. Available at http://appel.nasa.gov/competency-model/.

SEI. 2007. Capability Maturity Model Integrated (CMMI) for Development, version 1.2, Measurement and Analysis Process Area. Pittsburg, PA, USA: Software Engineering Institute (SEI)/Carnegie Mellon University (CMU).

SEI. 2004. CMMI-Based Professional Certifications: The Competency Lifecycle Framework, Software Engineering Institute, CMU/SEI-2004-SR-013. Accessed on June 3, 2015. Available at http://resources.sei.cmu.edu/library/asset-view.cfm?assetid=6833.

Squires, A., W. Larson, and B. Sauser. 2010. "Mapping space-based systems engineering curriculum to government-industry vetted competencies for improved organizational performance." Systems Engineering. 13 (3): 246-260. Available at, http://dx.doi.org/10.1002/sys.20146.

Squires, A., J. Wade, P. Dominick, and D. Gelosh. 2011. "Building a Competency Taxonomy to Guide Experience Acceleration of Lead Program Systems Engineers." Paper presented at the Conference on Systems Engineering Research (CSER), 15-16 April 2011, Los Angeles, CA.

Wells, B.H. 2008. "A Multi-Dimensional Hierarchical Engineering Competency Model Framework." Paper presented at IEEE International Systems Conference, March 2008, Montreal, Canada.

Widmann, E.R., G.E. Anderson, G.J. Hudak, and T.A. Hudak. 2000. "The Taxonomy of Systems Engineering Competency for The New Millennium." Presented at 10th Annual INCOSE Internal Symposium, 16-20 July 2000, Minneapolis, MN, USA.

Primary References

DAU. 2013. ENG Competency Model, 12 June 2013 version. in Defense Acquisition University (DAU)/U.S. Department of Defense Database Online. Accessed on June 3, 2015. Available at https://dap.dau.mil/workforce/Documents/Comp/ENG%20Competency%20Model%2020130612_Final.pdf.

INCOSE. 2010. Systems Engineering Competencies Framework 2010-0205. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2010-003.

Additional References

None.

SEBoK Discussion

Please provide your comments and feedback on the SEBoK below. You will need to log in to DISQUS using an existing account (e.g. Yahoo, Google, Facebook, Twitter, etc.) or create a DISQUS account. Simply type your comment in the text field below and DISQUS will guide you through the login or registration steps. Feedback will be archived and used for future updates to the SEBoK. If you provided a comment that is no longer listed, that comment has been adjudicated. You can view adjudication for comments submitted prior to SEBoK v. 1.0 at SEBoK Review and Adjudication. Later comments are addressed and changes are summarized in the Letter from the Editor and Acknowledgements and Release History.

If you would like to provide edits on this article, recommend new content, or make comments on the SEBoK as a whole, please see the SEBoK Sandbox.

blog comments powered by Disqus