Difference between revisions of "System Resilience"

Wikiexpert (talk | contribs) m (Removed protection from "Resilience Engineering") |

Wikiexpert (talk | contribs) |

||

| Line 94: | Line 94: | ||

[[Category: Part 6]][[Category:Topic]] | [[Category: Part 6]][[Category:Topic]] | ||

[[Category:Systems Engineering and Specialty Engineering]] | [[Category:Systems Engineering and Specialty Engineering]] | ||

| + | {{DISQUS}} | ||

Revision as of 17:30, 23 May 2012

According to the Oxford English Dictionary (OED 1973, , p.1807), resilience is “the act of rebounding or springing back.” This definition most directly fits the situation of materials which return to their original shape after deformation. For human-made systems this definition can be extended to say “the ability of a system to recover from a disruption .” The US government (DHS 2010) definition for infrastructure systems is the “ability of systems, infrastructures, government, business, communities, and individuals to resist, tolerate, absorb, recover from, prepare for, or adapt to an adverse occurrence that causes harm, destruction, or loss of national significance.” The concept of creating a resilient human-made system or resilience engineering is discussed by (Hollnagel, Woods, and Leveson 2006). The principles are elaborated by (Jackson 2010). Further literature on resilience is (Jackson 2007) and (Madni and Jackson 2009).

Topic Overview

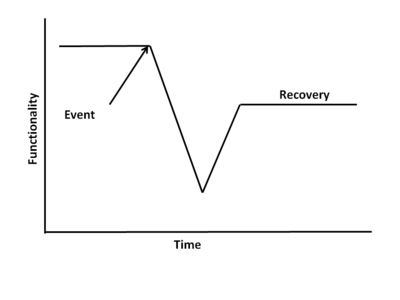

The purpose of resilience engineering and architecting is to achieve the full or partial recovery of a system following an encounter with a threat that disrupts the functionality of that system. Threats can be natural, such as earthquakes, hurricanes, tornadoes, or tsunamis. Threats can be internal and human-made such as reliability flaws and human error. Threats can be external and human made, such as terrorist attacks. A single incident can often be the result of multiple threats, such as a human error committed in the attempt to recover from another threat. The attached diagram depicts the loss and recovery of the functionality of a system. System types include product systems of a technological nature or enterprise systems such as civil infrastructures. They can be either individual systems or systems of systems. A resilient system possesses four attributes: capacity , flexibility , tolerance , and cohesion . These attributes are adapted from (Hollnagel, Woods, and Leveson 2006), There are 13 top level design principles identified that will achieve these attributes. They are extracted from Hollnagel et al and are elaborated on in (Jackson 2010).

The Capacity Attribute

Capacity is the attribute of a system that allows it to withstand a threat. Resilience allows that the capacity of a system may be exceeded forcing the system to rely on the remaining attributes to achieve recovery. The following design principles apply to the capacity attribute:

- The absorption design principle calls for the system to be designed to withstand a design level threat including adequate margin.

- The physical redundancy design principle states that the resilience of a system will be enhanced when critical components are physically redundant.

- The functional redundancy design principle calls for critical functions to be duplicated using different means.

- The layered defense design principle states that single point failures should be avoided.

The absorption design principle requires the implementation of traditional specialties, such as Reliability and Safety.

The Flexibility Attribute

Flexibility is the attribute of a system that allows it to restructure itself in the fact of a threat. The following design principles apply to the capacity attribute:

- The reorganization design principle says that the system should be able to change its own architecture before, during, or after the encounter with a threat. This design principle is applicable particularly to human systems.

- The human backup design principle requires that humans be involved to back up automated systems especially when unprecedented threats are involved.

- The complexity avoidance design principle calls for the minimization of complex elements, such as software and humans, except where they are essential (see human backup design principle.

- The drift correction design principle states that detected threats or conditions should be corrected before the encounter with the threat. The condition can either be immediate as for example the approach of a threat, or they can be latent within the design or the organization..

The Tolerance Attribute

Tolerance is the attribute of a system that allows it to degrade gracefully following an encounter with a threat. The following design principles apply to the tolerance attribute.

- The localized capacity design principle states that, when possible, the functionality of a system should be concentrated in individual nodes of the system and stay independent of the other nodes.

- The loose coupling design principle states that cascading failures in systems should be checked by inserting pauses between the nodes. According to (Perrow 1999) humans at these nodes have been found to be the most effective.

- The neutral state design principle states that systems should be brought into a neutral state before actions are taken.

- The reparability design principle states that systems should be reparable to bring the system back to full or partial functionality.

Most resilience design principles affect the system design processes, such as architecting. The reparability design principle affects the design of the sustainment system.

The Cohesion Attribute

Cohesion is the attribute that allows it to operate as a system before, during, and after an encounter with a threat. According to (Hitchins 2009), cohesion is a basic characteristic of a system. The following global design principle applies to the cohesion attribute.

- The inter-node interaction design principle requires that node (elements) of a system be capable of communicating, cooperating, and collaborating with each other. This design principle also calls for all nodes to understand the intent of all the other nodes as described by (Billings 1991).

The Resilience Process

Implementation of resilience in a system requires the execution of both analytic and holistic processes. In particular, the use of architecting with the associated heuristics is required. Inputs are the desired level of resilience and the characteristics of a threat or disruption. The outputs are the characteristics of the system, particularly the architectural characteristics and the nature of the elements (e.g., hardware, software, or humans). Artifacts will depend on the domain of the system. For technological systems, specification and architectural descriptions will result. For enterprise systems, enterprise plans will result. Both analytic and holistic methods will be required, including the principles of architecting. Analytic methods will determine required capacity. Holistic methods will determine required flexibility, tolerance, and cohesion. The only aspect of resilience that is easily measureable is the capacity aspect. For the attributes of flexibility, tolerance, and cohesion, the measures are either Boolean (yes/no) or qualitative. Finally, as an overall measure of resilience, the four attributes (capacity, flexibility, tolerance, and cohesion) could be weighted, resulting in an overall resilience score.

The greatest pitfall is to ignore resilience and fall back on the assumption of protection. The Critical Thinking project (CIPP February 2007) lays out the path from protection to resilience. Since resilience depends in large part on holistic analysis, it is a pitfall to resort to reductionist thinking and analysis. It is also a pitfall to fail to consider the systems of systems philosophy, especially in the analysis of infrastructure systems. There are many examples that show that systems are more resilient when they employ the cohesion attribute. The New York Power Restoration case study by (Mendoca and Wallace 2006, 209-219) is an example. The lesson is that all the component systems in a system of systems must recognize themselves as part of the system of systems and not as independent systems.

Practical Considerations

Resilience is difficult to achieve for infrastructure systems because the nodes (cities, counties, states, and private entities) are reluctant to cooperate with each other. Another barrier to resilience is cost. For example, achieving redundancy in dams and levees can be prohibitively expensive. Other aspects, such as communicating on common frequencies, can be low or moderate cost; even there, cultural barriers have to be overcome for implementation.

References

Works Cited

Billings, C. 1991. Aviation Automation: A Concept and Guidelines. Moffett Field, California: National Aeronautics and Space Administration (NASA).

CIPP. February 2007. Critical Thinking: Moving from Infrastructure Protection to Infrastructure Resilience, CIP Program Discussion Paper Series. Fairfax, VA, USA: Critical Infrastructure Protection (CIP) Program/School of Law/George Mason University (GMU).

DHS. 2010. Department of Homeland Security Risk Lexicon. Washington, DC, USA: US Department of Homeland Security, Risk Steering Committee. September 2010. Available at: http://www.dhs.gov/xlibrary/assets/dhs-risk-lexicon-2010.pdf.

Hitchins, D. 2009. "What Are The General Principles Applicable to Systems?? INCOSE Insight: 59-63.

Hollnagel, E., D. Woods, and N. Leveson (eds) 2006. Resilience Engineering: Concepts and Precepts. Aldershot, UK: Ashgate Publishing Limited.

Jackson, S. 2010. "Architecting Resilient Systems: Accident Avoidance and Survival and Recovery from Disruptions." in the Wiley Series in Systems Engineering and Management, A. P. Sage (ed.). Hoboken, NJ, USA: John Wiley & Sons.

Jackson, S. 2007. "A Multidisciplinary Framework for Resilience to Disasters and Disruptions." Journal of Design and Process Science 11: 91-108.

Madni, A. and S. Jackson. 2009. "Towards A Conceptual Framework for Resilience Engineering." IEEE Systems Journal. 3(2): 181-191.

Mendoca, D. and W. Wallace. 2006. "Adaptive Capacity: Electric Power Restoration in New York City Following the 11 September 2001 Attacks." Paper presented at 2nd Resilience Engineering Symposium, 8-10 November 2006, Juan-les-Pins, France.

Oxford English Dictionary on Historical Principles, 3rd edition, s.v. "Resilience". C. T. Onions (ed.). Oxford, UK: Oxford Univeristy Press. 1973.

Perrow, C. 1999. Normal Accidents. Princeton, NJ, USA: Princeton University Press.

Primary References

DHS. 2010. Department of Homeland Security Risk Lexicon. Washington, DC, USA: US Department of Homeland Security, Risk Steering Committee. September 2010. Available at: http://www.dhs.gov/xlibrary/assets/dhs-risk-lexicon-2010.pdf.

Jackson, S. 2010. Architecting Resilient Systems: Accident Avoidance and Survival and Recovery from Disruptions. Edited by A. P. Sage, Wiley Series in Systems Engineering and Management. Hoboken, NJ, USA: John Wiley & Sons.

Additional References

Billings, C. 1991. Aviation Automation: A Concept and Guidelines. Moffett Field, CA, USA: National Aeronautics and Space Administration (NASA).

Hitchins, D. 2009. "What are The General Principles Applicable to Systems?" INCOSE Insight. 59-63.

Hollnagel, E., D.D. Woods, and N. Leveson (eds.). 2006. Resilience Engineering: Concepts and Precepts. Aldershot, UK: Ashgate Publishing Limited.

Jackson, S. 2007. "A Multidisciplinary Framework for Resilience to Disasters and Disruptions." Journal of Design and Process Science. 11:91-108.

Madni, A. and S. Jackson. 2009. "Towards A Conceptual Framework for Resilience Engineering." IEEE Systems Journal. 3(2): 181-191.

MITRE. 2011. "Systems Engineering for Mission Assurance." System Engineering Guide. Accessed 7 March 2012 at [[1]].

Perrow, C. 1999. Normal Accidents. Princeton, NJ, USA: Princeton University Press.

SEBoK Discussion

Please provide your comments and feedback on the SEBoK below. You will need to log in to DISQUS using an existing account (e.g. Yahoo, Google, Facebook, Twitter, etc.) or create a DISQUS account. Simply type your comment in the text field below and DISQUS will guide you through the login or registration steps. Feedback will be archived and used for future updates to the SEBoK. If you provided a comment that is no longer listed, that comment has been adjudicated. You can view adjudication for comments submitted prior to SEBoK v. 1.0 at SEBoK Review and Adjudication. Later comments are addressed and changes are summarized in the Letter from the Editor and Acknowledgements and Release History.

If you would like to provide edits on this article, recommend new content, or make comments on the SEBoK as a whole, please see the SEBoK Sandbox.

blog comments powered by Disqus