Difference between revisions of "System Verification"

| Line 115: | Line 115: | ||

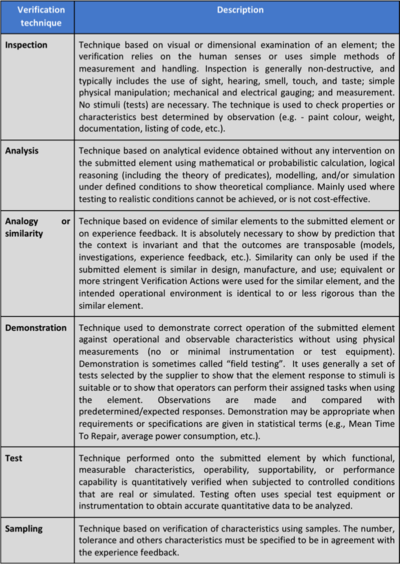

There are several verification techniques to check that an element or a system conforms to its design references, or its specified requirements. These techniques are almost the same as those used for validation, though the application of the techniques may differ slightly. In particular, the purposes are different; verification is used to detect faults/defects, whereas validation is used to provide evidence for the satisfaction of (system and/or stakeholder) requirements. Table 3 below provides synthetic descriptions of some techniques for verification. | There are several verification techniques to check that an element or a system conforms to its design references, or its specified requirements. These techniques are almost the same as those used for validation, though the application of the techniques may differ slightly. In particular, the purposes are different; verification is used to detect faults/defects, whereas validation is used to provide evidence for the satisfaction of (system and/or stakeholder) requirements. Table 3 below provides synthetic descriptions of some techniques for verification. | ||

| − | <center>'''Table 3 | + | <center>'''Table 3. Verification Techniques.''' (SEBoK Original)</center> |

[[File:Verification_techniques.png|400px|thumb|center|]] | [[File:Verification_techniques.png|400px|thumb|center|]] | ||

Revision as of 18:54, 14 August 2012

Verification is a set of actions used to check the "correctness" of any element, such as a system element, a system, a document, a service, a task, a requirement, etc. These types of actions are planned and carried out throughout the life cycle of the system. "Verification" is a generic term that needs to be instantiated within the context it occurs.

As a process, verification is a transverse activity to every life cycle stage of the system. In particular, during the development cycle of the system, the verification process is performed in parallel with the system definition and system realization processes, and applies to any activity and any product resulting from the activity. The activities of every life cycle process and those of the verification process can work together. For example, the integration process frequently uses the verification process. It is important to remember that verification, while separate from validation, is intended to be performed in conjunction with validation.

Definition and Purpose

verification is the confirmation, through the provision of objective evidence, that specified requirements have been fulfilled.

With a note added in ISO/IEC/IEEE 15288, verification is a set of activities that compares a system or system element against the required characteristics. (ISO/IED 2008) This may include, but is not limited to, specified requirements, design description, and the system itself.

The purpose of verification, as a generic action, is to identify the faults/defects introduced at the time of any transformation of inputs into outputs. Verification is used to provide information and evidence that the transformation was made according to the selected and appropriate methods, techniques, standards, or rules.

Verification is based on tangible evidence; i.e., it is based on information whose veracity can be demonstrated by factual results obtained from techniques such as inspection, measurement, test, analysis, calculation, etc. Thus, the process of verifying a system (product, service, enterprise, or system of systems) consists of comparing the realized characteristics or properties of the product, service, or enterprise against its expected design properties.

Principles and Concepts

Concept of Verification Action

Why Verify?

In the context of human realization, any conscious person knows that human thought is susceptible to error. It is important to note that this is the case with any engineering activity. Studies in human reliability have shown that people trained to perform a specific operation make around 1-3 errors per hour in best case scenarios. In any activity, or resulting outcome of an activity, the search for potential errors should not be neglected, regardless of whether or not one thinks they will happen or that they should not happen; the consequences of errors can cause extremely significant failures or threats.

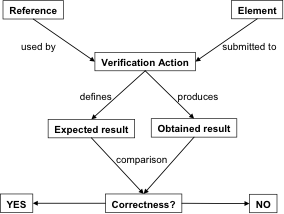

A verification action is defined, and then performed.

The definition of a verification action applied to an engineering element includes the following:

- identification of the element on which the verification action will be performed; and

- identification of the reference to define the expected result of the verification action (see examples of reference in Table 1).

The performance of a verification action includes the following:

- obtaining a result by performing the verification action onto the submitted element;

- comparing the obtained result with the expected result; and

- deducing the degree of correctness of the element.

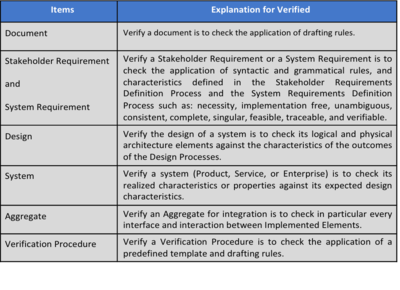

What to Verify?

Any engineering element can be verified using a specific reference for comparison: stakeholder requirement, system requirement, function, system element, document, etc. Examples are provided in Table 1.

Verification versus Validation

The term verification is often associated with the term validation and understood as a single concept of "V&V". Validation is used to ensure that “one is working the right problem,” whereas verification is used to ensure that “one has solved the problem right” (Martin 1997).

From an actual and etymological meaning, the term verification comes from the Latin "verus," which means truth, and "facere," which means to make/perform. Thus, verification means to prove that something is “true” or correct (a property, a characteristic, etc.). The term validation comes from the Latin "valere," which means to become strong, and has the same etymological root as the word “value.” Thus, validation means to prove that something has the right features to produce the expected effects. (Adapted from "Verification and validation in plain English" (Jerome, Lake, INCOSE 1999).)

The main differences between the verification process and the validation process concern the references used to check the correctness of an element, and the acceptability of the effective correctness.

- Within verification, comparison between the expected result and the obtained result is generally binary, whereas within validation, the result of the comparison may require a judgment of value regarding whether or not to accept the obtained result compared to a threshold or limit.

- Verification relates more to one element, whereas validation relates more to a set of elements and considers this set as a whole.

- Validation presupposes that verification actions have already been performed.

- The techniques used to define and perform the verification actions and those for validation actions are very similar.

Integration, Verification, and Validation of the System

There is sometimes a misconception that verification occurs after integration and before validation. In most cases, it is more appropriate to begin verification activities during development and to continue them into deployment and use.

Once the system elements have been realized, they’re integrated to form the complete system. Integration consists of assembling and performing verification actions as stated in the integration process. A final validation activity generally occurs when the system is integrated, but a certain number of validation actions are also performed parallel to the system integration in order to reduce the number of verification actions and validation actions while controlling the risks that could be generated if some checks are excluded. Integration, verification, and validation are intimately processed together due to the necessity of optimizing the strategy of verification and validation, as well as the strategy of integration.

Process Approach

Purpose and Principle of the Approach

The purpose of the verification process is to confirm that the system fulfills the specified design requirements. This process provides the information required to effect the remedial actions that correct non-conformances in the realized system or the processes that act on it - see ISO/IEC/IEEE 15288 (ISO/IEC/IEEE 2008).

Each system element and the complete system itself should be compared against its own design references (specified requirements). As stated by Dennis Buede, “verification is the matching of [configuration items], components, sub-systems, and the system to corresponding requirements to ensure that each has been built right” (Buede 2009). This means that the verification process is instantiated as many times as necessary during the global development of the system.

Because of the generic nature of a process, the verification process can be applied to any engineering element that has conducted to the definition and realization of the system elements and the system itself.

Facing the huge number of potential verification actions that may be generated by the normal approach, it is necessary to optimize the verification strategy. This strategy is based on the balance between what should be verified as a must and constraints, such as time, cost, and feasibility of testing, that naturally limit the number of verification actions and the risks one accepts when excluding some verification actions.

Several approaches exist that may be used for defining the verification process. The International Council on Systems Engineering (INCOSE) dictates that two main steps are necessary for verification: planning and performing verification actions (INCOSE 2010). NASA has a slightly more detailed approach that includes five main steps: prepare verification, perform verification, analyze outcomes, produce a report, and capture work products (NASA December 2007, 1-360, p. 102).

Any approach may be used, provided that it is appropriate to the scope of the system, the constraints of the project, includes the activities of the process listed below in some way, and is appropriately coordinated with other activities.

Generic inputs are baseline references of the submitted element. If the element is a system, inputs are the logical and physical architecture elements as described in a system design document, the design description of internal interfaces to the system and interfaces requirements external to the system, and by extension, the system requirements.

Generic outputs define the verification plan that includes verification strategy, selected verification actions, verification procedures, verification tools, the verified element or system, verification reports, issue/trouble reports, and change requests on design.

Activities of the Process

To establish the verification strategy drafted in a verification plan (this activity is carried out concurrently to system definition activities), the following steps are necessary:

- Identify verification scope by listing as many characteristics or properties as possible that should be checked; the number of Verification Actions can be extremely high.

- Identify constraints according to their origin (technical feasibility, management constraints as cost, time, availability of verification means or qualified personnel, and contractual constraints that are critical to the mission) that limit potential Verification Actions.

- Define appropriate verification techniques to be applied, such as inspection, analysis, simulation, peer-review, testing, etc., based on the best step of the project to perform every verification action according to the given constraints.

- Consider a tradeoff of what should be verified (scope) taking into account all constraints or limits and deduce what can be verified; the selection of verification actions would be made according to the type of system, objectives of the project, acceptable risks, and constraints.

- Optimize the verification strategy by defining the most appropriate verification technique for every verification action while defining necessary verification means (tools, test-benches, personnel, location, and facilities) according to the selected verification technique.

- Schedule the execution of verification actions in the project steps or milestones and define the configuration of elements submitted to verification actions (this mainly involves testing on physical elements).

Performing verification actions includes the following tasks:

- Detail each verification action; in particular, note the expected results, the verification techniques to be applied, and the corresponding means required (equipment, resources, and qualified personnel).

- Acquire verification means used during system definition steps (qualified personnel, modeling tools, mocks-up, simulators, and facilities), and then those used during the integration step (qualified personnel, verification tools, measuring equipment, facilities, verification procedures, etc.).

- Carry out verification procedures at the right time, in the expected environment, with the expected means, tools, and techniques.

- Capture and record the results obtained when performing verification actions using verification procedures and means.

The obtained results must be analyzed and compared to the expected results so that the the status may be recorded as either compliant or non-compliant. The systems engineering will likely need to generate verification reports, as well as potential issue/trouble reports, and change requests on design as necessary. Controlling the process includes following tasks:

- Update the verification plan according to the progress of the project; in particular, planned verification actions can be redefined because of unexpected events.

- Coordinate verification activities with the project manager: review the schedule and the acquisition of means, personnel, and resources. Coordinate with designers for issues/trouble/non-conformance reports and with the configuration manager for versions of the physical elements, design baselines, etc.

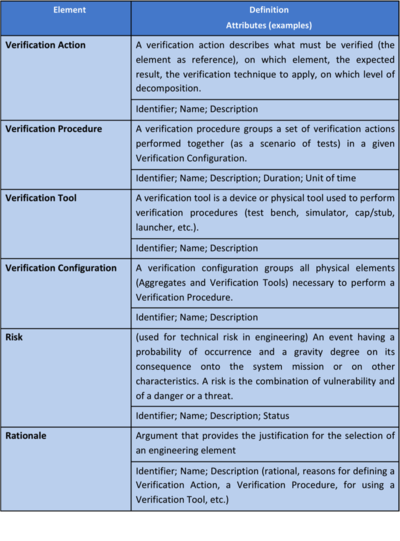

Artifacts and Ontology Elements

This process may create several artifacts:

- verification plans (contain the verification strategy);

- verification matrices (contain the verification action, submitted element, applied technique, step of execution, system block concerned, expected result, obtained result, etc.);

- verification procedures (describe verification actions to be performed, verification tools needed, the verification configuration, resources and personnel needed, the schedule, etc.);

- verification reports;

- verification tools;

- verified elements;

- issue / non-conformance / trouble reports; and

- change requests to the design.

This process handles the ontology elements displayed in Table 2 below.

Methods and Techniques

There are several verification techniques to check that an element or a system conforms to its design references, or its specified requirements. These techniques are almost the same as those used for validation, though the application of the techniques may differ slightly. In particular, the purposes are different; verification is used to detect faults/defects, whereas validation is used to provide evidence for the satisfaction of (system and/or stakeholder) requirements. Table 3 below provides synthetic descriptions of some techniques for verification.

Practical Considerations

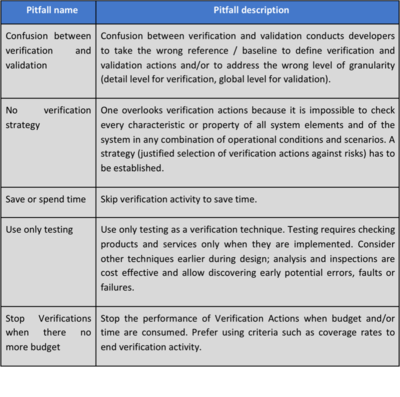

Major pitfalls encountered with system verification are presented in Table 4.

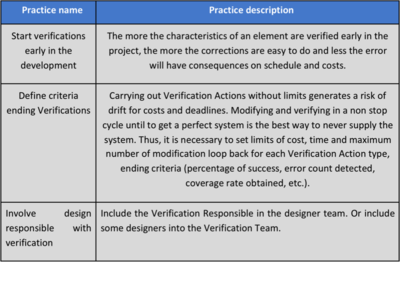

Major proven practices encountered with system verification are presented in Table 5.

References

Works Cited

Buede, D.M. 2009. The Engineering Design of Systems: Models and Methods. 2nd ed. Hoboken, NJ, USA: John Wiley & Sons Inc.

INCOSE. 2011. INCOSE Systems Engineering Handbook. Version 3.2. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.1.

ISO/IEC 2008. Systems and Software Engineering -- System Life Cycle Processes. Geneva, Switzerland: International Organisation for Standardisation / International Electrotechnical Commissions. ISO/IEC/IEEE 15288:2008 (E).

NASA. 2007. Systems Engineering Handbook. Washington, DC, USA: National Aeronautics and Space Administration (NASA), December 2007. NASA/SP-2007-6105.

Primary References

INCOSE. 2011. INCOSE Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities, version 3.2.1. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.1.

ISO/IEC/IEEE. 2008. Systems and Software Engineering - System Life Cycle Processes. Geneva, Switzerland: International Organization for Standardization (ISO)/International Electronical Commission (IEC), Institute of Electrical and Electronics Engineers. ISO/IEC/IEEE 15288:2008 (E).

NASA. 2007. Systems Engineering Handbook. Washington, D.C.: National Aeronautics and Space Administration (NASA), December 2007. NASA/SP-2007-6105.

Additional References

Buede, D.M. 2009. The Engineering Design of Systems: Models and Methods. 2nd ed. Hoboken, NJ, USA: John Wiley & Sons Inc.

DAU. 2010. Defense Acquisition Guidebook (DAG). Ft. Belvoir, VA, USA: Defense Acquisition University (DAU)/U.S. Department of Defense (DoD). February 19, 2010.

ECSS. 2009. Systems Engineering General Requirements. Noordwijk, Netherlands: Requirements and Standards Division, European Cooperation for Space Standardization (ECSS), 6 March 2009. ECSS-E-ST-10C.

MITRE. 2011. "Verification and Validation." Systems Engineering Guide. Accessed 11 March 2012 at [[1]].

SAE International. 1996. Certification Considerations for Highly-Integrated or Complex Aircraft Systems. Warrendale, PA, USA: SAE International, ARP475.

SEI. 2007. "Measurement and Analysis Process Area" in Capability Maturity Model Integrated (CMMI) for Development, version 1.2. Pittsburg, PA, USA: Software Engineering Institute (SEI)/Carnegie Mellon University (CMU).

SEBoK Discussion

Please provide your comments and feedback on the SEBoK below. You will need to log in to DISQUS using an existing account (e.g. Yahoo, Google, Facebook, Twitter, etc.) or create a DISQUS account. Simply type your comment in the text field below and DISQUS will guide you through the login or registration steps. Feedback will be archived and used for future updates to the SEBoK. If you provided a comment that is no longer listed, that comment has been adjudicated. You can view adjudication for comments submitted prior to SEBoK v. 1.0 at SEBoK Review and Adjudication. Later comments are addressed and changes are summarized in the Letter from the Editor and Acknowledgements and Release History.

If you would like to provide edits on this article, recommend new content, or make comments on the SEBoK as a whole, please see the SEBoK Sandbox.

blog comments powered by Disqus