Difference between revisions of "Assessing Individuals"

m (Text replacement - "<center>'''SEBoK v. 2.0, released 1 June 2019'''</center>" to "<center>'''SEBoK v. 2.1, released 31 October 2019'''</center>") |

m (Text replacement - "SEBoK v. 2.9, released 20 November 2023" to "SEBoK v. 2.10, released 06 May 2024") |

||

| (13 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | The ability to fairly assess individuals is a critical aspect of [[Enabling Individuals|enabling individuals]]. This article describes how to assess the {{Term|Systems Engineering (glossary)|systems engineering | + | ---- |

| + | '''''Lead Author:''''' ''Heidi Davidz'', '''''Contributing Authors:''''' ''Alice Squires, Art Pyster'' | ||

| + | ---- | ||

| + | The ability to fairly assess individuals is a critical aspect of [[Enabling Individuals|enabling individuals]]. This article describes how to assess the {{Term|Systems Engineering (glossary)|systems engineering}} (SE) competencies needed and possessed by an individual, as well as that individual’s SE performance. | ||

==Assessing Competency Needs== | ==Assessing Competency Needs== | ||

| Line 5: | Line 8: | ||

===Determining Context=== | ===Determining Context=== | ||

| − | Prior to understanding what SE competencies are needed, it is important for an organization to examine the situation in which it is embedded, including environment, history, and strategy. As Figure 1 shows, MITRE has developed a framework characterizing different levels of systems complexity. (MITRE 2007, 1-12) | + | Prior to understanding what SE competencies are needed, it is important for an organization to examine the situation in which it is embedded, including environment, history, and strategy. As Figure 1 shows, MITRE has developed a framework characterizing different levels of systems complexity. (MITRE 2007, 1-12) This framework may help an organization identify which competencies are needed. An organization working primarily in the ''traditional program domain'' may need to emphasize a different set of competencies than an organization working primarily in the ''messy frontier.'' If an organization seeks to improve existing capabilities in one area, extensive technical knowledge in that specific area might be very important. For example, if stakeholder involvement is characterized by multiple equities and distrust, rather than collaboration and concurrence, a higher level of competency in being able to balance stakeholder requirements might be needed. If the organization's desired outcome builds a fundamentally new capability, technical knowledge in a broader set of areas might be useful. |

[[File:MITRE_Enterprise_Systems_Engineering_Framework.PNG|thumb|750px|center|'''Figure 1. MITRE Enterprise Systems Engineering Framework (MITRE 2007).''' Reprinted with permission of © 2011. The MITRE Corporation. All Rights Reserved. All other rights are reserved by the copyright owner.]] | [[File:MITRE_Enterprise_Systems_Engineering_Framework.PNG|thumb|750px|center|'''Figure 1. MITRE Enterprise Systems Engineering Framework (MITRE 2007).''' Reprinted with permission of © 2011. The MITRE Corporation. All Rights Reserved. All other rights are reserved by the copyright owner.]] | ||

| − | + | Additionally, an organization might consider both its current situation and its forward strategy. For example, if an organization has previously worked in a traditional systems engineering context (MITRE 2007) but has a strategy to transition into enterprise systems engineering (ESE) work in the future, that organization might want to develop a competency model both for what was important in the traditional SE context and for what will be required for ESE work. This would also hold true for an organization moving to a different contracting environment where competencies, such as the ability to properly tailor the SE approach to ''right size'' the SE effort and balance cost and risk, might be more important. | |

===Determining Roles and Competencies=== | ===Determining Roles and Competencies=== | ||

| Line 15: | Line 18: | ||

==Assessing Individual SE Competency== | ==Assessing Individual SE Competency== | ||

| − | In order to demonstrate competence, there must be some way to qualify and measure it, and this is where competency assessment is used (Holt and Perry | + | In order to demonstrate competence, there must be some way to qualify and measure it, and this is where competency assessment is used (Holt and Perry 2011). This assessment informs the interventions needed to further develop individual SE KSAA upon which competency is based. Described below are possible methods which may be used for assessing an individual's current competency level; an organization should choose the correct model based on their context, as identified previously. |

===Proficiency Levels=== | ===Proficiency Levels=== | ||

| Line 24: | Line 27: | ||

*'''Understand''': Read and understand descriptions, communications, reports, tables, diagrams, directions, regulations, etc. | *'''Understand''': Read and understand descriptions, communications, reports, tables, diagrams, directions, regulations, etc. | ||

*'''Apply''': Know when and how to use ideas, procedures, methods, formulas, principles, theories, etc. | *'''Apply''': Know when and how to use ideas, procedures, methods, formulas, principles, theories, etc. | ||

| − | *'''Analyze''': Break down information into its constituent parts and recognize their | + | *'''Analyze''': Break down information into its constituent parts and recognize their relationships to one another and how they are organized; identify sublevel factors or salient data from a complex scenario. |

*'''Evaluate''': Make judgments about the value of proposed ideas, solutions, etc., by comparing the proposal to specific criteria or standards. | *'''Evaluate''': Make judgments about the value of proposed ideas, solutions, etc., by comparing the proposal to specific criteria or standards. | ||

*'''Create''': Put parts or elements together in such a way as to reveal a pattern or structure not clearly there before; identify which data or information from a complex set is appropriate to examine further or from which supported conclusions can be drawn. | *'''Create''': Put parts or elements together in such a way as to reveal a pattern or structure not clearly there before; identify which data or information from a complex set is appropriate to examine further or from which supported conclusions can be drawn. | ||

| − | One way to assess competency is to assign KSAAs to proficiency level categories within each competency. Examples of proficiency levels include the INCOSE competency model, with proficiency levels of: awareness, supervised practitioner, practitioner, and expert (INCOSE 2010). The Academy of Program/Project & Engineering Leadership (APPEL) competency model includes the levels: participate, apply, manage, and guide, respectively (Menrad and Lawson 2008). The U.S. National Aeronautics and Space Administration (NASA), as part of the APPEL (APPEL 2009), has also defined proficiency levels: technical engineer/project team member, subsystem lead/manager, project manager/project systems engineer, and program manager/program systems engineer. The Defense Civilian Personnel Advisory Service (DCPAS) defines a 5-tier framework to indicate the degree to which employees perform | + | One way to assess competency is to assign KSAAs to proficiency level categories within each competency. Examples of proficiency levels include the INCOSE competency model, with proficiency levels of: awareness, supervised practitioner, practitioner, and expert (INCOSE 2010). The Academy of Program/Project & Engineering Leadership (APPEL) competency model includes the levels: participate, apply, manage, and guide, respectively (Menrad and Lawson 2008). The U.S. National Aeronautics and Space Administration (NASA), as part of the APPEL (APPEL 2009), has also defined proficiency levels: technical engineer/project team member, subsystem lead/manager, project manager/project systems engineer, and program manager/program systems engineer. The Defense Civilian Personnel Advisory Service (DCPAS) defines a 5-tier framework to indicate the degree to which employees perform compentencies as awareness, basic, intermediate, advanced, and expert. |

| − | The KSAAs defined in the lower levels of the cognitive domain (remember, understand) are typically foundational, and involve demonstration of basic knowledge. The higher levels (apply, analyze, evaluate, and create) reflect higher cognitive ability. Cognitive and affective processes within Bloom’s taxonomy refer to levels of observable actions that indicate learning is occuring (Whitcomb | + | The KSAAs defined in the lower levels of the cognitive domain (remember, understand) are typically foundational, and involve demonstration of basic knowledge. The higher levels (apply, analyze, evaluate, and create) reflect higher cognitive ability. Cognitive and affective processes within Bloom’s taxonomy refer to levels of observable actions that indicate learning is occuring (Whitcomb et al. 2015). The Bloom’s domain levels should not be used exclusively to determine the proficiency levels required for attainment or assessment of a competency. Higher level cognitive capabilities belong across proficiency levels, and should be used as appropriate to the KSAA involved. These higher-level terms infer some observable action or outcome, so the context for assessing the attainment of the KSAA, or a group of KSAAs, related to a competency needs to be defined. For example, applying SE methods can be accomplished on simple subsystems or systems and so perhaps belong in a lower proficiency level such as supervised practitioner. Applying SE methods to complex enterprise or systems of systems, may belong in the practitioner or even the expert level. The determination of what proficiency level is desired for each KSAA is determined by the organization and may vary among different organizations. |

===Quality of Competency Assessment=== | ===Quality of Competency Assessment=== | ||

| Line 45: | Line 48: | ||

Academy of Program/Project & Engineering Leadership (APPEL). 2009. [[NASA's Systems Engineering Competencies]]. Washington, DC, USA: U.S. National Aeronautics and Space Administration. Available at: http://www.nasa.gov/offices/oce/appel/pm-development/pm_se_competency_framework.html. | Academy of Program/Project & Engineering Leadership (APPEL). 2009. [[NASA's Systems Engineering Competencies]]. Washington, DC, USA: U.S. National Aeronautics and Space Administration. Available at: http://www.nasa.gov/offices/oce/appel/pm-development/pm_se_competency_framework.html. | ||

| − | Bloom, B. S. 1984. ''Taxonomy of Educational Objectives.'' New York, NY, USA: Longman. | + | Bloom, B.S. 1984. ''Taxonomy of Educational Objectives.'' New York, NY, USA: Longman. |

| − | Holt, | + | Holt, J., and S. Perry. 2011. ''A Pragmatic Guide to Competency, Tools, Frameworks, and Assessment.'' Swindon, UK: BCS, The Chartered Institute for IT. |

INCOSE. 2010. ''[[Systems Engineering Competencies Framework 2010-0205]]''. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2010-003. | INCOSE. 2010. ''[[Systems Engineering Competencies Framework 2010-0205]]''. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2010-003. | ||

| − | Menrad, R. and H. Lawson. 2008. "Development of a NASA | + | Menrad, R. and H. Lawson. 2008. "Development of a NASA integrated technical workforce career development model entitled: Requisite occupation competencies and knowledge – The ROCK." Paper presented at the 59th International Astronautical Congress (IAC). 29 September-3 October 2008. Glasgow, Scotland. |

| − | MITRE. 2007. ''Enterprise Architecting for Enterprise Systems Engineering''. Warrendale, PA, USA: SEPO Collaborations, SAE International | + | MITRE. 2007. ''Enterprise Architecting for Enterprise Systems Engineering''. Warrendale, PA, USA: SEPO Collaborations, SAE International. |

| − | Whitcomb, | + | Whitcomb, C., J. Delgado, R. Khan, J. Alexander, C. White, D. Grambow, P. Walter. 2015. "The Department of the Navy systems engineering career competency model." Proceedings of the Twelfth Annual Acquisition Research Symposium. Naval Postgraduate School, Monterey, CA. |

===Primary References=== | ===Primary References=== | ||

| Line 63: | Line 66: | ||

===Additional References=== | ===Additional References=== | ||

| − | Holt, J., and S. Perry. 2011. ''A | + | Holt, J., and S. Perry. 2011. ''A Pragmatic Guide to Competency: Tools, Frameworks and Assessment''. Swindon, UK: British Computer Society. |

---- | ---- | ||

<center>[[Roles and Competencies|< Previous Article]] | [[Enabling Individuals|Parent Article]] | [[Developing Individuals|Next Article >]]</center> | <center>[[Roles and Competencies|< Previous Article]] | [[Enabling Individuals|Parent Article]] | [[Developing Individuals|Next Article >]]</center> | ||

| − | <center>'''SEBoK v. 2. | + | <center>'''SEBoK v. 2.10, released 06 May 2024'''</center> |

[[Category:Part 5]][[Category:Topic]] | [[Category:Part 5]][[Category:Topic]] | ||

[[Category:Enabling Individuals]] | [[Category:Enabling Individuals]] | ||

Latest revision as of 21:52, 2 May 2024

Lead Author: Heidi Davidz, Contributing Authors: Alice Squires, Art Pyster

The ability to fairly assess individuals is a critical aspect of enabling individuals. This article describes how to assess the systems engineering (SE) competencies needed and possessed by an individual, as well as that individual’s SE performance.

Assessing Competency Needs

If an organization wants to use its own customized competency model, an initial decision is make vs. buy. If there is an existing SE competency model that fits the organization's context and purpose, the organization might want to use the existing SE competency model directly. If existing models must be tailored or a new SE competency model developed, the organization should first understand its context.

Determining Context

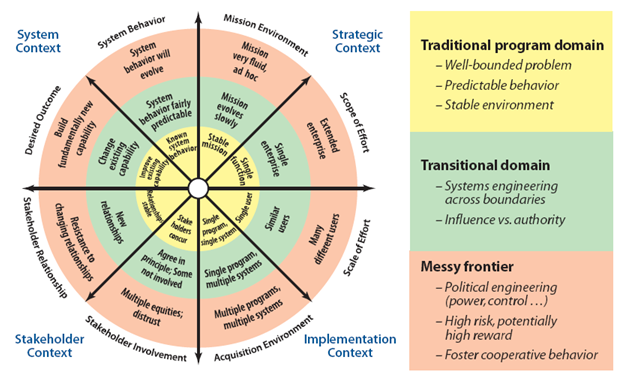

Prior to understanding what SE competencies are needed, it is important for an organization to examine the situation in which it is embedded, including environment, history, and strategy. As Figure 1 shows, MITRE has developed a framework characterizing different levels of systems complexity. (MITRE 2007, 1-12) This framework may help an organization identify which competencies are needed. An organization working primarily in the traditional program domain may need to emphasize a different set of competencies than an organization working primarily in the messy frontier. If an organization seeks to improve existing capabilities in one area, extensive technical knowledge in that specific area might be very important. For example, if stakeholder involvement is characterized by multiple equities and distrust, rather than collaboration and concurrence, a higher level of competency in being able to balance stakeholder requirements might be needed. If the organization's desired outcome builds a fundamentally new capability, technical knowledge in a broader set of areas might be useful.

Additionally, an organization might consider both its current situation and its forward strategy. For example, if an organization has previously worked in a traditional systems engineering context (MITRE 2007) but has a strategy to transition into enterprise systems engineering (ESE) work in the future, that organization might want to develop a competency model both for what was important in the traditional SE context and for what will be required for ESE work. This would also hold true for an organization moving to a different contracting environment where competencies, such as the ability to properly tailor the SE approach to right size the SE effort and balance cost and risk, might be more important.

Determining Roles and Competencies

Once an organization has characterized its context, the next step is to understand exactly what SE roles are needed and how those roles will be allocated to teams and individuals. To assess the performance of an individual, it is essential to explicitly state the roles and competencies required for that individual. See the references in Roles and Competencies for guides to existing SE standards and SE competency models.

Assessing Individual SE Competency

In order to demonstrate competence, there must be some way to qualify and measure it, and this is where competency assessment is used (Holt and Perry 2011). This assessment informs the interventions needed to further develop individual SE KSAA upon which competency is based. Described below are possible methods which may be used for assessing an individual's current competency level; an organization should choose the correct model based on their context, as identified previously.

Proficiency Levels

In order to provide a context for individuals and organizations to develop competencies, a consistent system of defining KSAAs should be created. One popular method is based on Bloom’s taxonomy (Bloom 1984), presented below for the cognitive domain in order from least complex to most complex cognitive ability.

- Remember: Recall or recognize terms, definitions, facts, ideas, materials, patterns, sequences, methods, principles, etc.

- Understand: Read and understand descriptions, communications, reports, tables, diagrams, directions, regulations, etc.

- Apply: Know when and how to use ideas, procedures, methods, formulas, principles, theories, etc.

- Analyze: Break down information into its constituent parts and recognize their relationships to one another and how they are organized; identify sublevel factors or salient data from a complex scenario.

- Evaluate: Make judgments about the value of proposed ideas, solutions, etc., by comparing the proposal to specific criteria or standards.

- Create: Put parts or elements together in such a way as to reveal a pattern or structure not clearly there before; identify which data or information from a complex set is appropriate to examine further or from which supported conclusions can be drawn.

One way to assess competency is to assign KSAAs to proficiency level categories within each competency. Examples of proficiency levels include the INCOSE competency model, with proficiency levels of: awareness, supervised practitioner, practitioner, and expert (INCOSE 2010). The Academy of Program/Project & Engineering Leadership (APPEL) competency model includes the levels: participate, apply, manage, and guide, respectively (Menrad and Lawson 2008). The U.S. National Aeronautics and Space Administration (NASA), as part of the APPEL (APPEL 2009), has also defined proficiency levels: technical engineer/project team member, subsystem lead/manager, project manager/project systems engineer, and program manager/program systems engineer. The Defense Civilian Personnel Advisory Service (DCPAS) defines a 5-tier framework to indicate the degree to which employees perform compentencies as awareness, basic, intermediate, advanced, and expert.

The KSAAs defined in the lower levels of the cognitive domain (remember, understand) are typically foundational, and involve demonstration of basic knowledge. The higher levels (apply, analyze, evaluate, and create) reflect higher cognitive ability. Cognitive and affective processes within Bloom’s taxonomy refer to levels of observable actions that indicate learning is occuring (Whitcomb et al. 2015). The Bloom’s domain levels should not be used exclusively to determine the proficiency levels required for attainment or assessment of a competency. Higher level cognitive capabilities belong across proficiency levels, and should be used as appropriate to the KSAA involved. These higher-level terms infer some observable action or outcome, so the context for assessing the attainment of the KSAA, or a group of KSAAs, related to a competency needs to be defined. For example, applying SE methods can be accomplished on simple subsystems or systems and so perhaps belong in a lower proficiency level such as supervised practitioner. Applying SE methods to complex enterprise or systems of systems, may belong in the practitioner or even the expert level. The determination of what proficiency level is desired for each KSAA is determined by the organization and may vary among different organizations.

Quality of Competency Assessment

When using application as a measure of competency, it is important to have a measure of goodness. If someone is applying a competency in an exceptionally complex situation, they may not necessarily be successful in this application. An individual may be managing and guiding, but this is only helpful to the organization if it is being done well. In addition, an individual might be fully proficient in a particular competency, but not be given an opportunity to use that competency; for this reason, it is important to understand the context in which these competencies are being assessed.

Individual SE Competency versus Performance

Even when an individual is highly proficient in an SE competency, context may preclude exemplary performance of that competency. For example, an individual with high competency in risk management may be embedded in a team or an organization which ignores that talent, whether because of flawed procedures or some other reason. Developing individual competencies is not enough to ensure exemplary SE performance.

When SE roles are clearly defined, performance assessment at least has a chance to be objective. However, since teams are most often tasked with accomplishing the SE tasks on a project, it is the team's performance which ends up being assessed. (See Team Capability). The final execution and performance of SE is a function of competency, capability, and capacity. (See Enabling Teams and Enabling Businesses and Enterprises.)

References

Works Cited

Academy of Program/Project & Engineering Leadership (APPEL). 2009. NASA's Systems Engineering Competencies. Washington, DC, USA: U.S. National Aeronautics and Space Administration. Available at: http://www.nasa.gov/offices/oce/appel/pm-development/pm_se_competency_framework.html.

Bloom, B.S. 1984. Taxonomy of Educational Objectives. New York, NY, USA: Longman.

Holt, J., and S. Perry. 2011. A Pragmatic Guide to Competency, Tools, Frameworks, and Assessment. Swindon, UK: BCS, The Chartered Institute for IT.

INCOSE. 2010. Systems Engineering Competencies Framework 2010-0205. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2010-003.

Menrad, R. and H. Lawson. 2008. "Development of a NASA integrated technical workforce career development model entitled: Requisite occupation competencies and knowledge – The ROCK." Paper presented at the 59th International Astronautical Congress (IAC). 29 September-3 October 2008. Glasgow, Scotland.

MITRE. 2007. Enterprise Architecting for Enterprise Systems Engineering. Warrendale, PA, USA: SEPO Collaborations, SAE International.

Whitcomb, C., J. Delgado, R. Khan, J. Alexander, C. White, D. Grambow, P. Walter. 2015. "The Department of the Navy systems engineering career competency model." Proceedings of the Twelfth Annual Acquisition Research Symposium. Naval Postgraduate School, Monterey, CA.

Primary References

Academy of Program/Project & Engineering Leadership (APPEL). 2009. NASA's Systems Engineering Competencies. Washington, DC, USA: U.S. National Aeronautics and Space Administration (NASA). Accessed on May 2, 2014. Available at http://appel.nasa.gov/career-resources/project-management-and-systems-engineering-competency-model/.

INCOSE. 2010. Systems Engineering Competencies Framework 2010-0205. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2010-003.

Additional References

Holt, J., and S. Perry. 2011. A Pragmatic Guide to Competency: Tools, Frameworks and Assessment. Swindon, UK: British Computer Society.