Difference between revisions of "Assessing Systems Engineering Performance of Business and Enterprises"

m (Text replacement - "SEBoK v. 2.9, released 13 November 2023" to "SEBoK v. 2.9, released 20 November 2023") |

|||

| (156 intermediate revisions by 12 users not shown) | |||

| Line 1: | Line 1: | ||

| − | At the project level, systems engineering [[measurement]] focuses on indicators of project and system success that are relevant to the project and its stakeholders. At the | + | ---- |

| + | '''''Lead Authors:''''' ''Hillary Sillitto, Alice Squires, Heidi Davidz'', '''''Contributing Authors:''''' ''Art Pyster, Richard Beasley'' | ||

| + | ---- | ||

| + | At the project level, {{Term|Systems Engineering (glossary)|systems engineering}} (SE) [[measurement]] focuses on indicators of project and system success that are relevant to the project and its stakeholders. At the enterprise level there are additional concerns. SE {{Term|Governance (glossary)|governance}} should ensure that the performance of systems engineering within the enterprise adds value to the organization, is aligned to the organization's purpose, and implements the relevant parts of the organization's strategy. | ||

| − | + | For enterprises that are traditional {{Term|Business (glossary)|businesses}} this is easier, because such organizations typically have more control levers than more loosely structured enterprises. The governance levers that can be used to improve performance include people (selection, training, culture, incentives), process, tools and infrastructure, and organization; therefore, the assessment of systems engineering performance in an enterprise should cover these dimensions. | |

| − | + | Being able to aggregate high quality data about the performance of teams with respect to SE activities is certainly of benefit when trying to guide team activities. Having access to comparable data, however, is often difficult, especially in organizations that are relatively autonomous, use different technologies and tools, build products in different domains, have different types of customers, etc. Even if there is limited ability to reliably collect and aggregate data across teams, having a policy that consciously decides how the enterprise will address data collection and analysis is valuable. | |

| − | + | == Performance Assessment Measures == | |

| − | *SE | + | Typical measures for assessing SE performance of an enterprise include the following: |

| + | |||

| + | *Effectiveness of SE process | ||

*Ability to mobilize the right resources at the right time for a new project or new project phase | *Ability to mobilize the right resources at the right time for a new project or new project phase | ||

| − | * | + | *Quality of SE process outputs |

| + | *Timeliness of SE process outputs | ||

*SE added value to project | *SE added value to project | ||

*System added value to end users | *System added value to end users | ||

| Line 16: | Line 22: | ||

*Individuals' SE competence development | *Individuals' SE competence development | ||

*Resource utilization, current and forecast | *Resource utilization, current and forecast | ||

| − | *Deployment and consistent usage of tools and methods | + | *Productivity of systems engineers |

| + | *Deployment and consistent usage of tools and methods | ||

| + | == How Measures Fit in the Governance Process and Improvement Cycle == | ||

| + | Since collecting data and analyzing it takes effort that is often significant, measurement is best done when its purpose is clear and is part of an overall strategy. The "goal, question, metric" paradigm (Basili 1992) should be applied, in which measurement data is collected to answer specific questions, the answer to which helps achieve a goal, such as decreasing the cost of creating a system architecture or increasing the value of a system to a particular stakeholder. Figure 1 shows one way in which appropriate measures inform enterprise level governance and drive an improvement cycle such as the Six Sigma DMAIC (Define, Measure, Analyze, Improve, Control) model. | ||

| − | |||

| − | |||

| − | + | [[File:Picture1_HGS.png|thumb|center|800px|'''Figure 1. Assessing Systems Engineering Performance in Business or Enterprise: Part of Closed Loop Governance.''' (SEBoK Original)]] | |

| − | [[File:Picture1_HGS.png|thumb| | ||

== Discussion of Performance Assessment Measures == | == Discussion of Performance Assessment Measures == | ||

| Line 29: | Line 35: | ||

=== Assessing SE Internal Process (Quality and Efficiency)=== | === Assessing SE Internal Process (Quality and Efficiency)=== | ||

| − | Process | + | A {{Term|Process (glossary)}} is a ''"set of interrelated or interacting activities which transforms inputs into outputs."'' The SEI CMMI Capability Maturity Model (SEI 2010) provides a structured way for businesses and enterprises to assess their SE processes. In the CMMI, a process area is a cluster of related practices in an area that, when implemented collectively, satisfies a set of goals considered important for making improvement in that area. There are CMMI models for acquisition, for development, and for services (SEI 2010, 11). CMMI defines how to assess individual process areas against Capability Levels on a scale from 0 to 3, and overall organizational maturity on a scale from 1 to 5. |

| − | |||

| − | The SEI CMMI Capability Maturity Model (2010) provides a structured way for businesses and enterprises to assess their SE processes. In the CMMI, a process area is a cluster of related practices in an area that, when implemented collectively, satisfies a set of goals considered important for making improvement in that area. There are CMMI models for acquisition, for development, and for services | ||

| − | |||

| − | CMMI defines how to assess individual process areas against Capability Levels on a scale from 0 to 3, and overall organizational maturity on a scale from 1 to 5. | ||

=== Assessing Ability to Mobilize for a New Project or New Project Phase === | === Assessing Ability to Mobilize for a New Project or New Project Phase === | ||

| − | Successful and timely project initiation and execution depends on having the right people available at the right time. If key resources are deployed they cannot be applied to new projects at the early stages when these resources make the most difference. | + | Successful and timely project initiation and execution depends on having the right people available at the right time. If key resources are deployed elsewhere, they cannot be applied to new projects at the early stages when these resources make the most difference. Queuing theory shows that if a resource pool is running at or close to capacity, delays and queues are inevitable. |

| − | The ability to manage teams through their lifecycle | + | The ability to manage teams through their lifecycle is an organizational capability that has substantial leverage on project and organizational efficiency and effectiveness. This includes being able to |

| + | *mobilize teams rapidly; | ||

| + | *establish and tailor an appropriate set of processes, metrics and systems engineering plans; | ||

| + | *support them to maintain a high level of performance; | ||

| + | *capitalize acquired knowledge; and | ||

| + | *redeploy team members expeditiously as the team winds down. | ||

| − | Specialists and experts are used to a review | + | Specialists and experts are used to a review process, critiquing solutions, creating novel solutions, and solving critical problems. Specialists and experts are usually a scarce resource. Few businesses have the luxury of having enough experts with all the necessary skills and behaviors on tap to allocate to all teams just when needed. If the skills are core to the business' competitive position or governance approach, then it makes sense to manage them through a governance process that ensures their skills are applied to greatest effect across the business. |

| − | + | Businesses typically find themselves balancing between having enough headroom to keep projects on schedule when things do not go as planned and utilizing resources efficiently. | |

=== Project SE Outputs (Cost, Schedule, Quality) === | === Project SE Outputs (Cost, Schedule, Quality) === | ||

| − | Many | + | Many SE outputs in a project are produced early in the life cycle to enable downstream activities. Hidden defects in the early phase SE work products may not become fully apparent until the project hits problems in integration, verification and validation, or transition to operations. Intensive peer review and rigorous modeling are the normal ways of detecting and correcting defects in and lack of coherence between SE work products. |

| − | + | Leading indicators could be monitored at the organizational level to help direct support to projects or teams heading for trouble. For example, the INCOSE Leading Indicators report (Roedler et al. 2010) offers a set of indicators that is useful at the project level. Lean Sigma provides a tool for assessing benefit delivery throughout an enterprise value stream. Lean Enablers for Systems Engineering are now being developed (Oppenheim et al. 2010). An emerging good practice is to use {{Term|Lean Value Stream Mapping (glossary)}} to aid the optimization of project plans and process application. | |

| − | + | In a mature organization, one good measure of [[Quality Management|SE quality]] is the number of defects that have to be corrected "out of phase"; i.e., at a later phase in the life cycle than the one in which the defect was introduced. This gives a good measure of process performance and the quality of SE outputs. Within a single project, the Work Product Approval, Review Action Closure, and Defect Error trends contain information that allows residual defect densities to be estimated (Roedler et al. 2010; Davies and Hunter 2001). | |

| − | + | Because of the leverage of front-end SE on overall project performance, it is important to focus on quality and timeliness of SE deliverables (Woodcock 2009). | |

| − | |||

| − | + | === SE Added Value to Project === | |

| + | SE that is properly [[Systems Engineering Management|managed]] and performed should add value to the project in terms of quality, risk avoidance, improved coherence, better management of issues and dependencies, right-first-time integration and formal verification, stakeholder management, and effective scope management. Because quality and quantity of SE are not the only factors that influence these outcomes, and because the effect is a delayed one (good SE early in the project pays off in later phases) there has been a significant amount of research to establish evidence to underpin the asserted benefits of SE in projects. | ||

| − | + | A summary of the main results is provided in the [[Economic Value of Systems Engineering]] article. | |

| − | |||

| − | + | === System Added Value to End Users === | |

| + | System-added value to end users depends on system effectiveness and on alignment of the requirements and design to the end users' purpose and mission. System end users are often only involved indirectly in the procurement process. | ||

| − | + | Research on the value proposition of SE shows that good project outcomes do not necessarily correlate with good end user experience. Sometimes systems developers are discouraged from talking to end users because the acquirer is afraid of requirements creep. There is experience to the contrary – that end user involvement can result in more successful and simpler system solutions. | |

| − | |||

| − | + | Two possible measures indicative of end user satisfaction are: | |

| − | + | #The use of user-validated mission scenarios (both nominal and "rainy day" situations) to validate requirements, drive trade-offs and organize testing and acceptance; | |

| − | + | #The use of {{Term|Technical Performance Measure (TPM) (glossary)}} to track critical performance and non-functional system attributes directly relevant to operational utility. The INCOSE SE Leading Indicators Guide (Roedler et al. 2010, 10 and 68) defines "technical measurement trends" as ''"Progress towards meeting the {{Term|Measure of Effectiveness (MoE) (glossary)}} / {{Term|Measure of Performance (MoP) (glossary)}} / Key Performance Parameters (KPPs) and {{Term|Technical Performance Measure (TPM) (glossary)}}"''. A typical TPM progress plot is shown in Figure 2. | |

| − | + | [[File:TPM_Chart_from_INCOSE_SELIG.png|thumb|center|600px|'''Figure 2. Technical Performance Measure (TPM) Tracking (Roedler et al. 2010).''' This material is reprinted with permission from the International Council on Systems Engineering (INCOSE). All other rights are reserved by the copyright owner.]] | |

| − | |||

| − | + | === SE Added Value to Organization === | |

| + | SE at the business/enterprise level aims to develop, deploy and enable effective SE to add value to the organization’s business. The SE function in the business/enterprise should understand the part it has to play in the bigger picture and identify appropriate performance measures - derived from the business or enterprise goals, and coherent with those of other parts of the organization - so that it can optimize its contribution. | ||

| − | === | + | === Organization's SE Capability Development === |

| − | + | The CMMI (SEI 2010) provides a means of assessing the process capability and maturity of businesses and enterprises. The higher CMMI levels are concerned with systemic integration of capabilities across the business or enterprise. | |

| − | + | CMMI measures one important dimension of capability development, but CMMI maturity level is not a direct measure of business effectiveness unless the SE measures are properly integrated with business performance measures. These may include bid success rate, market share, position in value chain, development cycle time and cost, level of innovation and re-use, and the effectiveness with which SE capabilities are applied to the specific problem and solution space of interest to the business. | |

| − | |||

| − | === | + | === Individuals' SE Competence Development === |

| − | ' | + | Assessment of Individuals' SE competence development is described in [[Assessing Individuals]]. |

| − | + | === Resource Utilization, Current and Forecast === | |

| + | Roedler et al. (2010, 58) offer various metrics for staff ramp-up and use on a project. Across the business or enterprise, key indicators include the overall manpower trend across the projects, the stability of the forward load, levels of overtime, the resource headroom (if any), staff turnover, level of training, and the period of time for which key resources are committed. | ||

| − | Deployment of | + | === Deployment and Consistent Usage of Tools and Methods === |

| + | It is common practice to use a range of software tools in an effort to manage the complexity of system development and in-service management. These range from simple office suites to complex logical, virtual reality and physics-based modeling environments. | ||

| − | + | Deployment of SE tools requires careful consideration of purpose, business objectives, business effectiveness, training, aptitude, method, style, business effectiveness, infrastructure, support, integration of the tool with the existing or revised SE process, and approaches to ensure consistency, longevity and appropriate configuration management of information. Systems may be in service for upwards of 50 years, but storage media and file formats that are 10-15 years old are unreadable on most modern computers. It is desirable for many users to be able to work with a single common model; it can be that two engineers sitting next to each other using the same tool use sufficiently different modeling styles that they cannot work on or re-use each others' models. | |

| − | |||

| − | + | License usage over time and across sites and projects is a key indicator of extent and efficiency of tool deployment. More difficult to assess is the consistency of usage. Roedler et al. (2010, 73) recommend metrics on "facilities and equipment availability". | |

| − | + | == Practical Considerations == | |

| − | + | Assessment of SE performance at the business/enterprise level is complex and needs to consider soft issues as well as hard issues. Stakeholder concerns and satisfaction criteria may not be obvious or explicit. Clear and explicit reciprocal expectations and alignment of purpose, values, goals and incentives help to achieve synergy across the organization and avoid misunderstanding. | |

| − | + | ''"What gets measured gets done."'' Because metrics drive behavior, it is important to ensure that metrics used to manage the organization reflect its purpose and values, and that they do not drive perverse behaviors (Roedler et al. 2010). | |

| − | + | Process and measurement cost money and time, so it is important to get the right amount of process definition and the right balance of investment between process, measurement, people and skills. Any process flexible enough to allow innovation will also be flexible enough to allow mistakes. If process is seen as excessively restrictive or prescriptive, it may inhibit innovation and demotivate the innovators in an effort to prevent mistakes, leading to excessive risk avoidance. | |

| − | + | It is possible for a process improvement effort to become an end in itself rather than a means to improve business performance (Sheard 2003). To guard against this, it is advisable to remain clearly focused on purpose (Blockley and Godfrey 2000) and on added value (Oppenheim et al. 2010) as well as to ensure clear and sustained top management commitment to driving the process improvement approach to achieve the required business benefits. Good process improvement is as much about establishing a performance culture as about process. | |

| − | + | <blockquote> ''The Systems Engineering process is an essential complement to, and is not a substitute for, individual skill, creativity, intuition, judgment etc. Innovative people need to understand the process and how to make it work for them, and neither ignore it nor be slaves to it. Systems Engineering measurement shows where invention and creativity need to be applied. SE process creates a framework to leverage creativity and innovation to deliver results that surpass the capability of the creative individuals – results that are the emergent properties of process, organisation, and leadership.'' (Sillitto 2011)</blockquote> | |

| + | ==References== | ||

| + | ===Works Cited=== | ||

| + | Basili, V. 1992. "[[Software Modeling and Measurement: The Goal/Question/Metric Paradigm]]" Technical Report CS-TR-2956. University of Maryland: College Park, MD, USA. Accessed on August 28, 2012. Available at http://www.cs.umd.edu/~basili/publications/technical/T78.pdf. | ||

| + | |||

| + | Blockley, D. and P. Godfrey. 2000. ''Doing It Differently – Systems for Rethinking Construction''. London, UK: Thomas Telford Ltd. | ||

| + | |||

| + | Davies, P. and N. Hunter. 2001. "System Test Metrics on a Development-Intensive Project." Paper presented at the 11th Annual International Council on System Engineering (INCOSE) International Symposium. 1-5 July 2001. Melbourne, Australia. | ||

| − | + | Oppenheim, B., E. Murman, and D. Sekor. 2010. ''[[Lean Enablers for Systems Engineering]]''. Systems Engineering. 14(1). New York, NY, USA: Wiley and Sons, Inc. | |

| − | + | ||

| − | + | Roedler, G. D. Rhodes, H. Schimmoller, and C. Jones (eds.). 2010. ''[[Systems Engineering Leading Indicators Guide]],'' version 2.0. January 29, 2010, Published jointly by LAI, SEARI, INCOSE, and PSM. INCOSE-TP-2005-001-03. Accessed on September 14, 2011. Available at http://seari.mit.edu/documents/SELI-Guide-Rev2.pdf. | |

| − | + | ||

| − | + | SEI. 2010. ''CMMI for Development,'' version 1.3. Pittsburgh, PA, USA: Software Engineering Institute/Carnegie Mellon University. CMU/SEI-2010-TR-033. Accessed on September 14, 2011. Available at http://www.sei.cmu.edu/reports/10tr033.pdf. | |

| − | + | ||

| − | + | Sheard, S, 2003. "The Lifecycle of a Silver Bullet." ''Crosstalk: The Journal of Defense Software Engineering.'' (July 2003). Accessed on September 14, 2011. Available at http://www.crosstalkonline.org/storage/issue-archives/2003/200307/200307-Sheard.pdf. | |

| − | + | ||

| − | + | Sillitto, H. 2011. Panel on "People or Process, Which is More Important". Presented at the 21st Annual International Council on Systems Engineering (INCOSE) International Symposium. 20-23 June 2011. Denver, CO, USA. | |

| − | + | ||

| + | Woodcock, H. 2009. "Why Invest in Systems Engineering." INCOSE UK Chapter. Z-3 Guide, Issue 3.0. March 2009. Accessed on September 14, 2011. Available at http://www.incoseonline.org.uk/Documents/zGuides/Z3_Why_invest_in_SE.pdf. | ||

===Primary References=== | ===Primary References=== | ||

| − | Frenz, | + | Basili, V. 1992. "[[Software Modeling and Measurement: The Goal/Question/Metric Paradigm]]". College Park, MD, USA: University of Maryland. Technical Report CS-TR-2956. Accessed on August 28, 2012. Available at http://www.cs.umd.edu/~basili/publications/technical/T78.pdf. |

| + | |||

| + | Frenz, P., et al. 2010. ''[[Systems Engineering Measurement Primer]]: A Basic Introduction to Measurement Concepts and Use for Systems Engineering,'' version 2.0. San Diego, CA, USA: International Council on System Engineering (INCOSE). INCOSE–TP–2010–005–02. | ||

| + | |||

| + | Oppenheim, B., E. Murman, and D. Sekor. 2010. ''[[Lean Enablers for Systems Engineering]]''. Systems Engineering. 14(1). New York, NY, USA: Wiley and Sons, Inc. | ||

| − | Roedler,G., D | + | Roedler, G., D. Rhodes, H. Schimmoller, and C. Jones (eds.). 2010. ''[[Systems Engineering Leading Indicators Guide]],'' version 2.0. January 29, 2010, Published jointly by LAI, SEARI, INCOSE, PSM. INCOSE-TP-2005-001-03. Accessed on September 14, 2011. Available at http://seari.mit.edu/documents/SELI-Guide-Rev2.pdf. |

===Additional References=== | ===Additional References=== | ||

| − | + | Jelinski, Z. and P.B. Moranda. 1972. "Software Reliability Research". In W. Freiberger. (ed.), ''Statistical Computer Performance Evaluation.'' New York, NY, USA: Academic Press. p. 465-484. | |

| − | + | Alhazmi O.H. and Y.K. Malaiya. 2005. ''Modeling the Vulnerability Discovery Process''. 16th IEEE International Symposium on Software Reliability Engineering (ISSRE'05). 8-11 November 2005. Chicago, IL, USA. | |

| − | + | Alhazmi, O.H. and Y.K. Malaiya. 2006. "Prediction Capabilities of Vulnerability Discovery Models." Paper presented at Annual Reliability and Maintainability Symposium (RAMS). 23-26 January 2006. p 86-91. Newport Beach, CA, USA. Accessed on September 14, 2011. Available at http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=1677355&isnumber=34933. | |

---- | ---- | ||

| − | + | <center>[[Organizing Business and Enterprises to Perform Systems Engineering|< Previous Article]] | [[Enabling Businesses and Enterprises|Parent Article]] | [[Developing Systems Engineering Capabilities within Businesses and Enterprises|Next Article >]]</center> | |

| − | [[ | + | |

| + | <center>'''SEBoK v. 2.9, released 20 November 2023'''</center> | ||

| − | + | [[Category: Part 5]][[Category:Topic]] | |

| + | [[Category:Enabling Businesses and Enterprises]] | ||

Latest revision as of 22:02, 18 November 2023

Lead Authors: Hillary Sillitto, Alice Squires, Heidi Davidz, Contributing Authors: Art Pyster, Richard Beasley

At the project level, systems engineering (SE) measurement focuses on indicators of project and system success that are relevant to the project and its stakeholders. At the enterprise level there are additional concerns. SE governance should ensure that the performance of systems engineering within the enterprise adds value to the organization, is aligned to the organization's purpose, and implements the relevant parts of the organization's strategy.

For enterprises that are traditional businesses this is easier, because such organizations typically have more control levers than more loosely structured enterprises. The governance levers that can be used to improve performance include people (selection, training, culture, incentives), process, tools and infrastructure, and organization; therefore, the assessment of systems engineering performance in an enterprise should cover these dimensions.

Being able to aggregate high quality data about the performance of teams with respect to SE activities is certainly of benefit when trying to guide team activities. Having access to comparable data, however, is often difficult, especially in organizations that are relatively autonomous, use different technologies and tools, build products in different domains, have different types of customers, etc. Even if there is limited ability to reliably collect and aggregate data across teams, having a policy that consciously decides how the enterprise will address data collection and analysis is valuable.

Performance Assessment Measures

Typical measures for assessing SE performance of an enterprise include the following:

- Effectiveness of SE process

- Ability to mobilize the right resources at the right time for a new project or new project phase

- Quality of SE process outputs

- Timeliness of SE process outputs

- SE added value to project

- System added value to end users

- SE added value to organization

- Organization's SE capability development

- Individuals' SE competence development

- Resource utilization, current and forecast

- Productivity of systems engineers

- Deployment and consistent usage of tools and methods

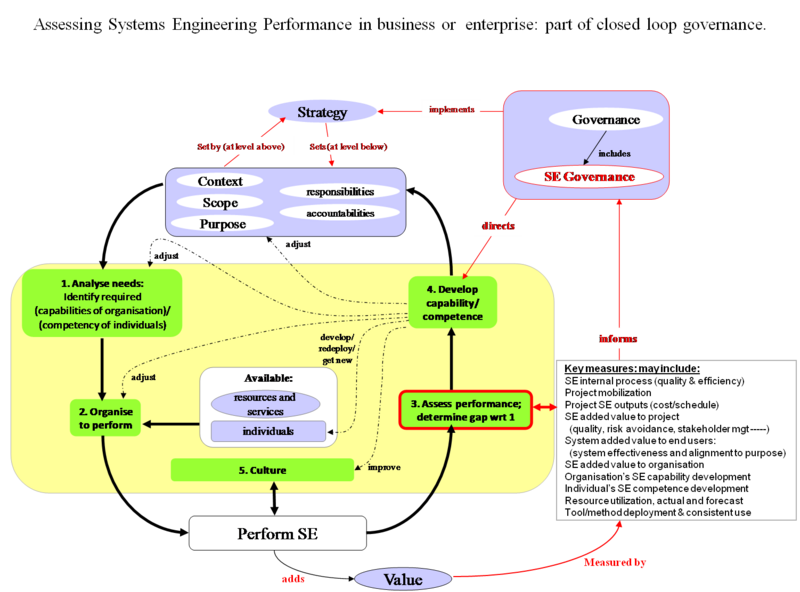

How Measures Fit in the Governance Process and Improvement Cycle

Since collecting data and analyzing it takes effort that is often significant, measurement is best done when its purpose is clear and is part of an overall strategy. The "goal, question, metric" paradigm (Basili 1992) should be applied, in which measurement data is collected to answer specific questions, the answer to which helps achieve a goal, such as decreasing the cost of creating a system architecture or increasing the value of a system to a particular stakeholder. Figure 1 shows one way in which appropriate measures inform enterprise level governance and drive an improvement cycle such as the Six Sigma DMAIC (Define, Measure, Analyze, Improve, Control) model.

Discussion of Performance Assessment Measures

Assessing SE Internal Process (Quality and Efficiency)

A process is a "set of interrelated or interacting activities which transforms inputs into outputs." The SEI CMMI Capability Maturity Model (SEI 2010) provides a structured way for businesses and enterprises to assess their SE processes. In the CMMI, a process area is a cluster of related practices in an area that, when implemented collectively, satisfies a set of goals considered important for making improvement in that area. There are CMMI models for acquisition, for development, and for services (SEI 2010, 11). CMMI defines how to assess individual process areas against Capability Levels on a scale from 0 to 3, and overall organizational maturity on a scale from 1 to 5.

Assessing Ability to Mobilize for a New Project or New Project Phase

Successful and timely project initiation and execution depends on having the right people available at the right time. If key resources are deployed elsewhere, they cannot be applied to new projects at the early stages when these resources make the most difference. Queuing theory shows that if a resource pool is running at or close to capacity, delays and queues are inevitable.

The ability to manage teams through their lifecycle is an organizational capability that has substantial leverage on project and organizational efficiency and effectiveness. This includes being able to

- mobilize teams rapidly;

- establish and tailor an appropriate set of processes, metrics and systems engineering plans;

- support them to maintain a high level of performance;

- capitalize acquired knowledge; and

- redeploy team members expeditiously as the team winds down.

Specialists and experts are used to a review process, critiquing solutions, creating novel solutions, and solving critical problems. Specialists and experts are usually a scarce resource. Few businesses have the luxury of having enough experts with all the necessary skills and behaviors on tap to allocate to all teams just when needed. If the skills are core to the business' competitive position or governance approach, then it makes sense to manage them through a governance process that ensures their skills are applied to greatest effect across the business.

Businesses typically find themselves balancing between having enough headroom to keep projects on schedule when things do not go as planned and utilizing resources efficiently.

Project SE Outputs (Cost, Schedule, Quality)

Many SE outputs in a project are produced early in the life cycle to enable downstream activities. Hidden defects in the early phase SE work products may not become fully apparent until the project hits problems in integration, verification and validation, or transition to operations. Intensive peer review and rigorous modeling are the normal ways of detecting and correcting defects in and lack of coherence between SE work products.

Leading indicators could be monitored at the organizational level to help direct support to projects or teams heading for trouble. For example, the INCOSE Leading Indicators report (Roedler et al. 2010) offers a set of indicators that is useful at the project level. Lean Sigma provides a tool for assessing benefit delivery throughout an enterprise value stream. Lean Enablers for Systems Engineering are now being developed (Oppenheim et al. 2010). An emerging good practice is to use lean value stream mapping to aid the optimization of project plans and process application.

In a mature organization, one good measure of SE quality is the number of defects that have to be corrected "out of phase"; i.e., at a later phase in the life cycle than the one in which the defect was introduced. This gives a good measure of process performance and the quality of SE outputs. Within a single project, the Work Product Approval, Review Action Closure, and Defect Error trends contain information that allows residual defect densities to be estimated (Roedler et al. 2010; Davies and Hunter 2001).

Because of the leverage of front-end SE on overall project performance, it is important to focus on quality and timeliness of SE deliverables (Woodcock 2009).

SE Added Value to Project

SE that is properly managed and performed should add value to the project in terms of quality, risk avoidance, improved coherence, better management of issues and dependencies, right-first-time integration and formal verification, stakeholder management, and effective scope management. Because quality and quantity of SE are not the only factors that influence these outcomes, and because the effect is a delayed one (good SE early in the project pays off in later phases) there has been a significant amount of research to establish evidence to underpin the asserted benefits of SE in projects.

A summary of the main results is provided in the Economic Value of Systems Engineering article.

System Added Value to End Users

System-added value to end users depends on system effectiveness and on alignment of the requirements and design to the end users' purpose and mission. System end users are often only involved indirectly in the procurement process.

Research on the value proposition of SE shows that good project outcomes do not necessarily correlate with good end user experience. Sometimes systems developers are discouraged from talking to end users because the acquirer is afraid of requirements creep. There is experience to the contrary – that end user involvement can result in more successful and simpler system solutions.

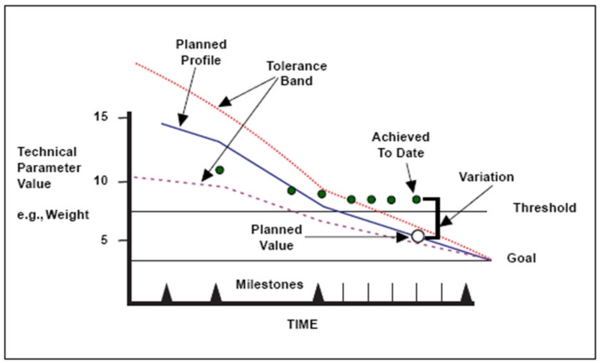

Two possible measures indicative of end user satisfaction are:

- The use of user-validated mission scenarios (both nominal and "rainy day" situations) to validate requirements, drive trade-offs and organize testing and acceptance;

- The use of technical performance measure (tpm) to track critical performance and non-functional system attributes directly relevant to operational utility. The INCOSE SE Leading Indicators Guide (Roedler et al. 2010, 10 and 68) defines "technical measurement trends" as "Progress towards meeting the measure of effectiveness (moe) / measure of performance (mop) / Key Performance Parameters (KPPs) and technical performance measure (tpm)". A typical TPM progress plot is shown in Figure 2.

SE Added Value to Organization

SE at the business/enterprise level aims to develop, deploy and enable effective SE to add value to the organization’s business. The SE function in the business/enterprise should understand the part it has to play in the bigger picture and identify appropriate performance measures - derived from the business or enterprise goals, and coherent with those of other parts of the organization - so that it can optimize its contribution.

Organization's SE Capability Development

The CMMI (SEI 2010) provides a means of assessing the process capability and maturity of businesses and enterprises. The higher CMMI levels are concerned with systemic integration of capabilities across the business or enterprise.

CMMI measures one important dimension of capability development, but CMMI maturity level is not a direct measure of business effectiveness unless the SE measures are properly integrated with business performance measures. These may include bid success rate, market share, position in value chain, development cycle time and cost, level of innovation and re-use, and the effectiveness with which SE capabilities are applied to the specific problem and solution space of interest to the business.

Individuals' SE Competence Development

Assessment of Individuals' SE competence development is described in Assessing Individuals.

Resource Utilization, Current and Forecast

Roedler et al. (2010, 58) offer various metrics for staff ramp-up and use on a project. Across the business or enterprise, key indicators include the overall manpower trend across the projects, the stability of the forward load, levels of overtime, the resource headroom (if any), staff turnover, level of training, and the period of time for which key resources are committed.

Deployment and Consistent Usage of Tools and Methods

It is common practice to use a range of software tools in an effort to manage the complexity of system development and in-service management. These range from simple office suites to complex logical, virtual reality and physics-based modeling environments.

Deployment of SE tools requires careful consideration of purpose, business objectives, business effectiveness, training, aptitude, method, style, business effectiveness, infrastructure, support, integration of the tool with the existing or revised SE process, and approaches to ensure consistency, longevity and appropriate configuration management of information. Systems may be in service for upwards of 50 years, but storage media and file formats that are 10-15 years old are unreadable on most modern computers. It is desirable for many users to be able to work with a single common model; it can be that two engineers sitting next to each other using the same tool use sufficiently different modeling styles that they cannot work on or re-use each others' models.

License usage over time and across sites and projects is a key indicator of extent and efficiency of tool deployment. More difficult to assess is the consistency of usage. Roedler et al. (2010, 73) recommend metrics on "facilities and equipment availability".

Practical Considerations

Assessment of SE performance at the business/enterprise level is complex and needs to consider soft issues as well as hard issues. Stakeholder concerns and satisfaction criteria may not be obvious or explicit. Clear and explicit reciprocal expectations and alignment of purpose, values, goals and incentives help to achieve synergy across the organization and avoid misunderstanding.

"What gets measured gets done." Because metrics drive behavior, it is important to ensure that metrics used to manage the organization reflect its purpose and values, and that they do not drive perverse behaviors (Roedler et al. 2010).

Process and measurement cost money and time, so it is important to get the right amount of process definition and the right balance of investment between process, measurement, people and skills. Any process flexible enough to allow innovation will also be flexible enough to allow mistakes. If process is seen as excessively restrictive or prescriptive, it may inhibit innovation and demotivate the innovators in an effort to prevent mistakes, leading to excessive risk avoidance.

It is possible for a process improvement effort to become an end in itself rather than a means to improve business performance (Sheard 2003). To guard against this, it is advisable to remain clearly focused on purpose (Blockley and Godfrey 2000) and on added value (Oppenheim et al. 2010) as well as to ensure clear and sustained top management commitment to driving the process improvement approach to achieve the required business benefits. Good process improvement is as much about establishing a performance culture as about process.

The Systems Engineering process is an essential complement to, and is not a substitute for, individual skill, creativity, intuition, judgment etc. Innovative people need to understand the process and how to make it work for them, and neither ignore it nor be slaves to it. Systems Engineering measurement shows where invention and creativity need to be applied. SE process creates a framework to leverage creativity and innovation to deliver results that surpass the capability of the creative individuals – results that are the emergent properties of process, organisation, and leadership. (Sillitto 2011)

References

Works Cited

Basili, V. 1992. "Software Modeling and Measurement: The Goal/Question/Metric Paradigm" Technical Report CS-TR-2956. University of Maryland: College Park, MD, USA. Accessed on August 28, 2012. Available at http://www.cs.umd.edu/~basili/publications/technical/T78.pdf.

Blockley, D. and P. Godfrey. 2000. Doing It Differently – Systems for Rethinking Construction. London, UK: Thomas Telford Ltd.

Davies, P. and N. Hunter. 2001. "System Test Metrics on a Development-Intensive Project." Paper presented at the 11th Annual International Council on System Engineering (INCOSE) International Symposium. 1-5 July 2001. Melbourne, Australia.

Oppenheim, B., E. Murman, and D. Sekor. 2010. Lean Enablers for Systems Engineering. Systems Engineering. 14(1). New York, NY, USA: Wiley and Sons, Inc.

Roedler, G. D. Rhodes, H. Schimmoller, and C. Jones (eds.). 2010. Systems Engineering Leading Indicators Guide, version 2.0. January 29, 2010, Published jointly by LAI, SEARI, INCOSE, and PSM. INCOSE-TP-2005-001-03. Accessed on September 14, 2011. Available at http://seari.mit.edu/documents/SELI-Guide-Rev2.pdf.

SEI. 2010. CMMI for Development, version 1.3. Pittsburgh, PA, USA: Software Engineering Institute/Carnegie Mellon University. CMU/SEI-2010-TR-033. Accessed on September 14, 2011. Available at http://www.sei.cmu.edu/reports/10tr033.pdf.

Sheard, S, 2003. "The Lifecycle of a Silver Bullet." Crosstalk: The Journal of Defense Software Engineering. (July 2003). Accessed on September 14, 2011. Available at http://www.crosstalkonline.org/storage/issue-archives/2003/200307/200307-Sheard.pdf.

Sillitto, H. 2011. Panel on "People or Process, Which is More Important". Presented at the 21st Annual International Council on Systems Engineering (INCOSE) International Symposium. 20-23 June 2011. Denver, CO, USA.

Woodcock, H. 2009. "Why Invest in Systems Engineering." INCOSE UK Chapter. Z-3 Guide, Issue 3.0. March 2009. Accessed on September 14, 2011. Available at http://www.incoseonline.org.uk/Documents/zGuides/Z3_Why_invest_in_SE.pdf.

Primary References

Basili, V. 1992. "Software Modeling and Measurement: The Goal/Question/Metric Paradigm". College Park, MD, USA: University of Maryland. Technical Report CS-TR-2956. Accessed on August 28, 2012. Available at http://www.cs.umd.edu/~basili/publications/technical/T78.pdf.

Frenz, P., et al. 2010. Systems Engineering Measurement Primer: A Basic Introduction to Measurement Concepts and Use for Systems Engineering, version 2.0. San Diego, CA, USA: International Council on System Engineering (INCOSE). INCOSE–TP–2010–005–02.

Oppenheim, B., E. Murman, and D. Sekor. 2010. Lean Enablers for Systems Engineering. Systems Engineering. 14(1). New York, NY, USA: Wiley and Sons, Inc.

Roedler, G., D. Rhodes, H. Schimmoller, and C. Jones (eds.). 2010. Systems Engineering Leading Indicators Guide, version 2.0. January 29, 2010, Published jointly by LAI, SEARI, INCOSE, PSM. INCOSE-TP-2005-001-03. Accessed on September 14, 2011. Available at http://seari.mit.edu/documents/SELI-Guide-Rev2.pdf.

Additional References

Jelinski, Z. and P.B. Moranda. 1972. "Software Reliability Research". In W. Freiberger. (ed.), Statistical Computer Performance Evaluation. New York, NY, USA: Academic Press. p. 465-484.

Alhazmi O.H. and Y.K. Malaiya. 2005. Modeling the Vulnerability Discovery Process. 16th IEEE International Symposium on Software Reliability Engineering (ISSRE'05). 8-11 November 2005. Chicago, IL, USA.

Alhazmi, O.H. and Y.K. Malaiya. 2006. "Prediction Capabilities of Vulnerability Discovery Models." Paper presented at Annual Reliability and Maintainability Symposium (RAMS). 23-26 January 2006. p 86-91. Newport Beach, CA, USA. Accessed on September 14, 2011. Available at http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=1677355&isnumber=34933.