Difference between revisions of "Decision Management"

m (Text replacement - "SEBoK v. 2.9, released 13 November 2023" to "SEBoK v. 2.9, released 20 November 2023") |

|||

| (94 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| − | The purpose of [[Decision | + | ---- |

| − | The | + | '''''Lead Author:''''' ''Ray Madachy'', '''''Contributing Authors:''''' ''Garry Roedler, Greg Parnell, Scott Jackson'' |

| + | ---- | ||

| + | Many systems engineering decisions are difficult because they include numerous stakeholders, multiple competing objectives, substantial uncertainty, and significant consequences. In these cases, good decision making requires a formal {{Term|Decision Management (glossary)|decision management}} process. The purpose of the decision management process is: | ||

| + | <blockquote>“…to provide a structured, analytical framework for objectively identifying, characterizing and evaluating a set of alternatives for a decision at any point in the life cycle and select the most beneficial course of action.”([[ISO/IEC/IEEE 15288]])</blockquote> | ||

| + | |||

| + | Decision situations ({{Term|Opportunity (glossary)|opportunities}}) are commonly encountered throughout a {{Term|Life Cycle (glossary)|system’s lifecycle}}. The decision management method most commonly employed by systems engineers is the trade study. Trade studies aim to define, measure, and assess shareholder and {{Term|stakeholder (glossary)|stakeholder}} {{Term|Value (glossary)|value}} to facilitate the decision maker’s search for an alternative that represents the best balance of competing objectives. By providing techniques for decomposing a trade decision into logical segments and then synthesizing the parts into a coherent whole, a decision management process allows the decision maker to work within human cognitive limits without oversimplifying the problem. Furthermore, by decomposing the overall decision problem, experts can provide assessments of alternatives in their area of expertise. | ||

| + | |||

| + | ==Decision Management Process== | ||

| + | The decision analysis process is depicted in Figure 1 below. The decision management process is based on several best practices, including: | ||

| + | *Utilizing sound mathematical technique of decision analysis for trade studies. Parnell (2009) provided a list of decision analysis concepts and techniques. | ||

| + | *Developing one master decision model, followed by its refinement, update, and use, as required for trade studies throughout the system life cycle. | ||

| + | *Using Value-Focused Thinking (Keeney 1992) to create better alternatives. | ||

| + | *Identifying uncertainty and assessing risks for each decision. | ||

| + | |||

| + | [[File:Decision_Mgt_Process_DM.png|thumb|center|500px|center|'''Figure 1. Decision Management Process (INCOSE DAWG 2013).''' Permission granted by Matthew Cilli who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | ||

| + | |||

| + | The center of the diagram shows the five trade space objectives (listed clockwise): Performance, Growth Potential, Schedule, Development & Procurement Costs, and Sustainment Costs . The ten blue arrows represent the decision management process activities and the white text within the green ring represents SE process elements. Interactions are represented by the small, dotted green or blue arrows. The decision analysis process is an iterative process. | ||

| + | A hypothetical UAV decision problem is used to illustrate each of the activities in the following sections. | ||

| + | |||

| + | ===Framing and Tailoring the Decision=== | ||

| + | To ensure the decision team fully understands the decision context, the analyst should describe the system baseline, boundaries and interfaces. The decision context includes: the system definition, the life cycle stage, decision milestones, a list of decision makers and stakeholders, and available resources. The best practice is to identify a decision problem statement that defines the decision in terms of the system life cycle. | ||

| + | |||

| + | ===Developing Objectives and Measures=== | ||

| + | Defining how an important decision will be made is difficult. As Keeney (2002) puts it: | ||

| + | <blockquote>''Most important decisions involve multiple objectives, and usually with multiple-objective decisions, you can't have it all. You will have to accept less achievement in terms of some objectives in order to achieve more on other objectives. But how much less would you accept to achieve how much more?''</blockquote> | ||

| + | The first step is to develop objectives and measures using interviews and focus groups with subject matter experts (SMEs) and stakeholders. | ||

| + | For systems engineering trade-off analyses, stakeholder value often includes competing objectives of performance, development schedule, unit cost, support costs, and growth potential. For corporate decisions, shareholder value would also be added to this list. For performance, a functional decomposition can help generate a thorough set of potential objectives. Test this initial list of fundamental objectives by checking that each fundamental objective is essential and controllable and that the set of objectives is complete, non-redundant, concise, specific, and understandable (Edwards et al. 2007). Figure 2 provides an example of an objectives hierarchy. | ||

| + | |||

| + | [[File:Fund_Obj_Hierarchy_DM.png|thumb|center|650px|center|'''Figure 2. Fundamental Objectives Hierarchy (INCOSE DAWG 2013).''' Permission granted by Matthew Cilli who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | ||

| + | |||

| + | For each objective, a measure must be defined to assess the value of each alternative for that objective. A measure (attribute, criterion, and metric) must be unambiguous, comprehensive, direct, operational, and understandable (Keeney & Gregory 2005). | ||

| + | A defining feature of multi-objective decision analysis is the transformation from measure space to value space. This transformation is performed by a value function which shows returns to scale on the measure range. When creating a value function, the walk-away point on the measure scale (x-axis) must be ascertained and mapped to a 0 value on the value scale (y-axis). A walk-away point is the measure score where regardless of how well an alternative performs in other measures, the decision maker will walk away from the alternative. He or she does this through working with the user, finding the measure score beyond, at which point an alternative provides no additional value, and labeling it "stretch goal" (ideal) and then mapping it to 100 (or 1 and 10) on the value scale (y-axis). Figure 3 provides the most common value curve shapes. The rationale for the shape of the value functions should be documented for traceability and defensibility (Parnell et al. 2011). | ||

| + | |||

| + | [[File:Value_Function_Example_DM.png|thumb|center|750px|center|'''Figure 3. Value Function Examples (INCOSE DAWG 2013).''' Permission granted by Matthew Cilli who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | ||

| + | |||

| + | The mathematics of multiple objective decision analysis (MODA) requires that the weights depend on importance of the measure and the range of the measure (walk away to stretch goal). A useful tool for determining priority weighting is the swing weight matrix (Parnell et al. 2011). For each measure, consider its importance through determining whether the measure corresponds to a defining, critical, or enabling function and consider the gap between the current capability and the desired capability; finally, put the name of the measure in the appropriate cell of the matrix (Figure 4). The highest priority weighting is placed in the upper-left corner and assigned an unnormalized weight of 100. The unnormalized weights are monotonically decreasing to the right and down the matrix. Swing weights are then assessed by comparing them to the most important value measure or another assessed measure. The swing weights are normalized to sum to one for the additive value model used to calculate value in a subsequent section. | ||

| + | |||

| + | [[File:Swing_Weight_Matrix_DM.png|thumb|center|750px|center|'''Figure 4. Swing Weight Matrix (INCOSE DAWG 2013).''' Permission granted by Gregory Parnell who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | ||

| + | |||

| + | ===Generating Creative Alternatives=== | ||

| + | |||

| + | To help generate a creative and comprehensive set of alternatives that span the decision space, consider developing an alternative generation table (also called a morphological box) (Buede, 2009; Parnell et al. 2011). It is a best practice to establish a meaningful product structure for the system and to be reported in all decision presentations (Figure 5). | ||

| + | |||

| + | [[File:Descript_of_Alt_DM.png|thumb|center|750px|center|'''Figure 5. Descriptions of Alternatives (INCOSE DAWG 2013).''' Permission granted by Matthew Cilli who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | ||

| − | + | ===Assessing Alternatives via Deterministic Analysis=== | |

| − | + | With objectives and measures established and alternatives having been defined, the decision team should engage SMEs, equipped with operational data, test data, simulations, models, and expert knowledge. Scores are best captured on scoring sheets for each alternative/measure combination which document the source and rationale. Figure 6 provides a summary of the scores. | |

| − | |||

| − | + | [[File:ALT_Scores_DM.png|thumb|center|750px|center|'''Figure 6. Alternative Scores (INCOSE DAWG 2013).''' Permission granted by Richard Swanson who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Note that in addition to identified alternatives, the score matrix includes a row for the ideal alternative. The ideal is a tool for value-focused thinking, which will be covered later. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | == | + | ===Synthesizing Results=== |

| − | |||

| − | + | Next, one can transform the scores into a value table, by using the value functions developed previously. A color heat map can be useful to visualize value tradeoffs between alternatives and identify where alternatives need improvement (Figure 7). | |

| − | [[File: | + | [[File:Value_Scorecard_w_Heat_Map_DM.png|thumb|center|850px|center|'''Figure 7. Value Scorecard with Heat Map (INCOSE DAWG 2013).''' Permission granted by Richard Swanson who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] |

| − | The | + | The additive value model uses the following equation to calculate each alternative’s value: |

| − | + | [[File:Eq_1.jpg|200px]] | |

| − | + | where | |

| − | + | [[File:Eq_2.jpg|400px]] | |

| − | |||

| − | + | The value component chart (Figure 8) shows the total value and the weighted value measure contribution of each alternative (Parnell et al. 2011). | |

| − | + | [[File:Value_Comp_Graph_DM.png|thumb|center|700px|center|'''Figure 8. Value Component Graph (INCOSE DAWG 2013).''' Permission granted by Richard Swanson who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | |

| − | + | The heart of a decision management process for system engineering trade off analysis is the ability to assess all dimensions of shareholder and stakeholder value. The stakeholder value scatter plot in Figure 9 shows five dimensions: unit cost, performance, development risk, growth potential, and operation and support costs for all alternatives. | |

| − | + | [[File:Ex_Stakeholder_Value_Scat_DM.png|thumb|center|700px|center|'''Figure 9. Example of a Stakeholder Value Scatterplot (INCOSE DAWG 2013).''' Permission granted by Richard Swanson who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | |

| − | + | Each system alternative is represented by a scatter plot marker (Figure 9). An alternative’s unit cost and performance value are indicated by x and y positions respectively. An alternative’s development risk is indicated by the color of the marker (green = low, yellow= medium, red = high), while the growth potential is shown as the number of hats above the circular marker (1 hat = low, 2 hats = moderate, 3 hats = high). | |

| − | + | ===Identifying Uncertainty and Conducting Probabilistic Analysis=== | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | As part of the assessment, the SME should discuss the potential uncertainty of the independent variables. The independent variables are the variables that impact one or more scores; the scores that are independent scores. Many times the SME can assess an upper, nominal, and lower bound by assuming low, moderate, and high performance. Using this data, a Monte Carlo Simulation summarizes the impact of the uncertainties and can identify the uncertainties that have the most impact on the decision. | |

| − | + | ===Accessing Impact of Uncertainty - Analyzing Risk and Sensitivity=== | |

| − | + | Decision analysis uses many forms of sensitivity analysis including line diagrams, tornado diagrams, waterfall diagrams and several uncertainty analyses including Monte Carlo Simulation, decision trees, and influence diagrams (Parnell et al. 2013). A line diagram is used to show the sensitivity to the swing weight judgment (Parnell et al. 2011). Figure 10 shows the results of a Monte Carlo Simulation of performance value. | |

| − | + | [[File:Uncertainty_on_Perf_Value_from_Monte_DM.png|thumb|center|700px|center|'''Figure 10. Uncertainty on Performance Value from Monte Carlo Simulation (INCOSE DAWG 2013).''' Permission granted by Matthew Cilli who prepared image for the INCOSE Decision Analysis Working Group (DAWG). All other rights are reserved by the copyright owner.]] | |

| − | + | ===Improving Alternatives=== | |

| − | + | Mining the data generated for the alternatives will likely reveal opportunities to modify some design choices to claim untapped value and/or reduce risk. Taking advantage of initial findings to generate new and creative alternatives starts the process of transforming the decision process from "alternative-focused thinking" to "value-focused thinking" (Keeney 1993). | |

| − | |||

| − | === | + | ===Communicating Tradeoffs=== |

| − | |||

| − | + | This is the point in the process where the decision analysis team identifies key observations about tradeoffs and the important uncertainties and risks. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | ===Presenting Recommendations and Implementing Action Plan=== |

| − | |||

| − | + | It is often helpful to describe the recommendation(s) in the form of a clearly-worded, actionable task-list in order to increase the likelihood of the decision implementation. Reports are important for historical traceability and future decisions. Take the time and effort to create a comprehensive, high-quality report detailing study findings and supporting rationale. Consider static paper reports augmented with dynamic hyper-linked e-reports. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ==The Cognitive Bias Effect on Decisions== | |

| + | Research by (Kahneman 2011) and (Thaler and Sunstein 2008) has concluded that [[Cognitive Bias (glossary)|cognitive bias]] can seriously distort decisions made by any decision maker. Both Kahneman and Thaler were awarded the Nobel prize for their work. The cause of this distortion is called the cognitive bias. These distorted decisions have contributed to major catastrophes, such as Challenger and Columbia. Other sources attributing major catastrophes are (Murata, Nakamura, and Karwowski 2015) and (Murata 2017). | ||

| + | |||

| + | (Kahneman 2011) and (Thaler and Sunstein 2008) have identified a large number of individual biases, the most well-known of which is the confirmation bias. This bias states that humans have a tendency to interpret new evidence as confirmation of one's existing beliefs or theories. Regarding mitigation of theses biases, there is general agreement that self-mitigation by the decision-maker is not feasible for most biases. (Thaler and Sunstein 2008) provide methods to influence the mitigation of most biases. They refer to these influences as “nudges”. | ||

| + | |||

| + | Considering cognitive biases in a systems engineering is discussed by (Jackson 2017, Jackson and Harel 2017), and (Jackson 2018). The primary theme of these references is that rational decisions are rarely possible and that cognitive bias must be taken into account. | ||

| + | |||

| + | ===Decisions with Cognitive Bias=== | ||

| + | According to (INCOSE 2015) ideal decisions are made while “objectively identifying, characterizing, and evaluating a set of alternatives for a decision…” Research in the field of behavioral economics has shown that these decisions can be distorted by a phenomenon known as cognitive bias. Furthermore, most decision makers are unaware of these biases. The literature also provides methods for mitigating these biases. | ||

| + | |||

| + | According to (Haselton, Nettle, and Andrews 2005, p. 2) a cognitive bias represents a situation in which “human cognition reliably produces representations that are systematically distorted compared to some aspect of objective reality.” Cognitive biases are typically stimulated by emotion and prior belief. The literature reveals large numbers of cognitive biases of which the following three are typical: | ||

| + | #The rankism bias. According to (Fuller 2011), rankism is simply the idea that persons of higher rank in an organization are better able to assert their authority over persons of lower rank regardless of the decision involved. Rankism frequently occurs in aircraft cockpits. According to (McCreary et al. 1998), rankism was a factor in the famous Tenerife disaster. | ||

| + | #The complacency bias. According to (Leveson 1995, pp. 54-55), complacency is the disregard for safety and the belief that current safety measures are adequate. According to (Leveson 1995, pp. 54-55), complacency played a role in the Three Mile Island and Bhopal disasters. | ||

| + | #The optimism bias. According to (Leveson 1995, pp. 54-55), famous physicist Richard Feynman states that NASA “exaggerates the reliability of the system.” This is an example of the optimism bias. | ||

| + | |||

| + | ===Mitigation of Cognitive Bias=== | ||

| + | Various sources have suggested methods to mitigate the effects of cognitive bias. Following are some of the major ones. | ||

| + | #Independent Review. The idea of independent review is that advice on decisions should come from an outside body, called by the Columbia Accident Investigation Board (CAIB) (NASA 2003, 227) as the Independent Technical Authority (ITA). This authority must be both organizationally and financially independent of the program in question. That is, the ITA cannot be subordinate to the program manager. | ||

| + | #Crew Resource Management. Following a period of high accident rate, several airlines have adopted the crew resource management (CRM) method. The primary purposes of this method are first to assure that all crew members do their job properly and secondly that they communicate with the pilot effectively when they have a concern. The impetus for this method was the judgment that many pilots were experiencing the rankism bias or were preoccupied with other tasks and simple did not understand the concerns of the other crew members. The result is that this strategy has been successful, and that the accident rate has fallen. | ||

| + | #The Premortem. (Kahneman 2011) (pp. 264-265) suggests this method of nudging in an organizational context. This method, like others, requires a certain amount of willingness on the part of the decision-maker to participate in this process. It calls for decision-makers to surround themselves with trusted experts in advance of major decisions. According to Kahneman the primary job of the experts is to present the negative argument against any decision. For example, the decision-maker should not authorize the launch now, perhaps later. | ||

==References== | ==References== | ||

===Works Cited=== | ===Works Cited=== | ||

| − | + | Buede, D.M. 2009. ''The engineering design of systems: Models and methods''. 2nd ed. Hoboken, NJ: John Wiley & Sons Inc. | |

| + | |||

| + | Edwards, W., R.F. Miles Jr., and D. Von Winterfeldt. 2007. ''Advances In Decision Analysis: From Foundations to Applications.'' New York, NY: Cambridge University Press. | ||

| + | |||

| + | Fuller, R.W. 2011. "What is Rankism and Why to We "Do" It?" ''Psychology Today''. 25 May 2011. https://www.psychologytoday.com/us/blog/somebodies-and-nobodies/201002/what-is-rankism-and-why-do-we-do-it | ||

| − | + | Haselton, M.G., D. Nettle, and P.W. Andrews. 2005. "The Evolution of Cognitive Bias." ''Handbook of Psychology''. | |

| − | + | INCOSE. 2015. ''Systems Engineering Handbook,'' 4th Ed. Edited by D.D. Walden, G.J. Roedler, K.J. Forsberg, R.D. Hamelin, and T.M. Shortell. San Diego, CA: International Council on Systems Engineering (INCOSE). | |

| − | + | ISO/IEC/IEEE. 2015. ''[[ISO/IEC/IEEE 15288|Systems and Software Engineering -- System Life Cycle Processes]]''. Geneva, Switzerland: International Organisation for Standardisation / International Electrotechnical Commissions / Institute of Electrical and Electronics Engineers. ISO/IEC/IEEE 15288:2015. | |

| − | + | Kahneman, D. 2011. "Thinking Fast and Slow." New York, NY: Farrar, Straus, and Giroux. | |

| − | + | Keeney, R.L. and H. Raiffa. 1976. ''Decisions with Multiple Objectives - Preferences and Value Tradeoffs.'' New York, NY: Wiley. | |

| − | + | Keeney, R.L. 1992. ''Value-Focused Thinking: A Path to Creative Decision-Making.'' Cambridge, MA: Harvard University Press. | |

| − | + | Keeney, R.L. 1993. "Creativity in MS/OR: Value-focused thinking—Creativity directed toward decision making." ''Interfaces'', 23(3), p.62–67. | |

| − | + | Leveson, N. 1995. ''Safeware: System Safety and Computers''. Reading, MA: Addison Wesley. | |

| − | + | McCreary, J., M. Pollard, K. Stevenson, and M.B. Wilson. 1998. "Human Factors: Tenerife Revisited." ''Journal of Air Transportation World Wide''. 3(1). | |

| − | + | Murata, A. 2017. "Cultural Difference and Cognitive Biases as a Trigger of Critical Crashes or Disasters - Evidence from Case Studies of Human Factors Analysis." ''Journal of Behavioral and Brain Science.'' 7:299-415. | |

| − | + | Murata, A., T. Nakamura, and W. Karwowski. 2015. "Influences of Cognitive Biases in Distorting Decision Making and Leading to Critical Unfavorable Incidents." ''Safety.'' 1:44-58. | |

| − | + | Parnell, G.S. 2009. "Decision Analysis in One Chart," ''Decision Line, Newsletter of the Decision Sciences Institute''. May 2009. | |

| − | + | Parnell, G.S., P.J. Driscoll, and D.L Henderson (eds). 2011. ''Decision Making for Systems Engineering and Management'', 2nd ed. Wiley Series in Systems Engineering. Hoboken, NJ: Wiley & Sons Inc. | |

| − | + | Parnell, G.S., T. Bresnick, S. Tani, and E. Johnson. 2013. ''Handbook of Decision Analysis.'' Hoboken, NJ: Wiley & Sons. | |

| − | + | Thaler, Richard H., and Cass R. Sunstein. 2008. Nudge: Improving Decisions About Health, Wealth, and Happiness. New York: Penguin Books. | |

===Primary References=== | ===Primary References=== | ||

| − | + | Buede, D.M. 2004. "[[On Trade Studies]]." Proceedings of the 14th Annual International Council on Systems Engineering International Symposium, 20-24 June, 2004, Toulouse, France. | |

| + | |||

| + | Keeney, R.L. 2004. "[[Making Better Decision Makers]]." ''Decision Analysis'', 1(4), pp.193–204. | ||

| − | + | Keeney, R.L. & R.S. Gregory. 2005. "[[Selecting Attributes to Measure the Achievement of Objectives]]". ''Operations Research'', 53(1), pp.1–11. | |

| − | + | Kirkwood, C.W. 1996. ''[[Strategic Decision Making]]: Multiobjective Decision Analysis with Spreadsheets.'' Belmont, California: Duxbury Press. | |

| − | + | ===Additional References=== | |

| + | Buede, D.M. and R.W. Choisser. 1992. "Providing an Analytic Structure for Key System Design Choices." ''Journal of Multi-Criteria Decision Analysis'', 1(1), pp.17–27. | ||

| − | + | Felix, A. 2004. "Standard Approach to Trade Studies." Proceedings of the International Council on Systems Engineering (INCOSE) Mid-Atlantic Regional Conference, November 2-4 2004, Arlington, VA. | |

| − | + | Felix, A. 2005. "How the Pro-Active Program (Project) Manager Uses a Systems Engineer’s Trade Study as a Management Tool, and not just a Decision Making Process." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 10-15, 2005, Rochester, NY. | |

| − | + | Jackson, S. 2017. "Irrationality in Decision Making: A Systems Engineering Perspective." INCOSE ''Insight'', 74. | |

| − | |||

| − | + | Jackson, S. 2018. "Cognitive Bias: A Game-Changer for Decision Management?" INCOSE ''Insight'', 41-42. | |

| − | + | Jackson, S. and A. Harel. 2017. "Systems Engineering Decision Analysis can benefit from Added Consideration of Cognitive Sciences." ''Systems Engineering.'' 55, 19 July. | |

| − | + | Miller, G.A. 1956. "The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information." ''Psychological Review'', 63(2), p.81. | |

| − | + | Ross, A.M. and D.E. Hastings. 2005. "Tradespace Exploration Paradigm." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 10-15, 2005, Rochester, NY. | |

| − | + | Sproles, N. 2002. "Formulating Measures of Effectiveness." ''Systems Engineering", 5(4), p. 253-263.'' | |

| − | + | Silletto, H. 2005. "Some Really Useful Principles: A new look at the scope and boundaries of systems engineering." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 10-15, 2005, Rochester, NY. | |

| − | + | Ullman, D.G. and B.P. Spiegel. 2006. "Trade Studies with Uncertain Information." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 9-13, 2006, Orlando, FL. | |

---- | ---- | ||

| − | <center>[[ | + | <center>[[Assessment and Control|< Previous Article]] | [[Systems Engineering Management|Parent Article]] | [[Risk Management|Next Article >]]</center> |

| − | + | <center>'''SEBoK v. 2.9, released 20 November 2023'''</center> | |

[[Category: Part 3]][[Category:Topic]] | [[Category: Part 3]][[Category:Topic]] | ||

[[Category:Systems Engineering Management]] | [[Category:Systems Engineering Management]] | ||

Latest revision as of 22:22, 18 November 2023

Lead Author: Ray Madachy, Contributing Authors: Garry Roedler, Greg Parnell, Scott Jackson

Many systems engineering decisions are difficult because they include numerous stakeholders, multiple competing objectives, substantial uncertainty, and significant consequences. In these cases, good decision making requires a formal decision management process. The purpose of the decision management process is:

“…to provide a structured, analytical framework for objectively identifying, characterizing and evaluating a set of alternatives for a decision at any point in the life cycle and select the most beneficial course of action.”(ISO/IEC/IEEE 15288)

Decision situations (opportunities) are commonly encountered throughout a system’s lifecycle. The decision management method most commonly employed by systems engineers is the trade study. Trade studies aim to define, measure, and assess shareholder and stakeholder value to facilitate the decision maker’s search for an alternative that represents the best balance of competing objectives. By providing techniques for decomposing a trade decision into logical segments and then synthesizing the parts into a coherent whole, a decision management process allows the decision maker to work within human cognitive limits without oversimplifying the problem. Furthermore, by decomposing the overall decision problem, experts can provide assessments of alternatives in their area of expertise.

Decision Management Process

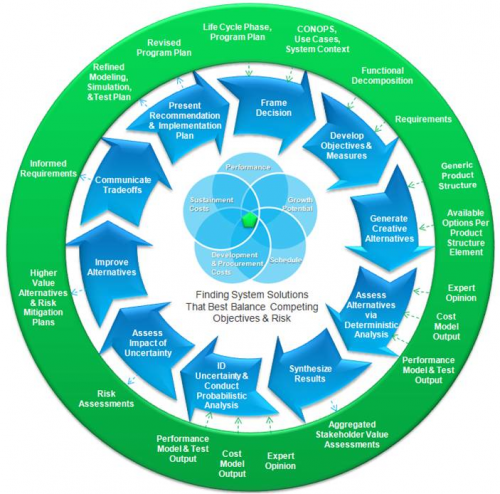

The decision analysis process is depicted in Figure 1 below. The decision management process is based on several best practices, including:

- Utilizing sound mathematical technique of decision analysis for trade studies. Parnell (2009) provided a list of decision analysis concepts and techniques.

- Developing one master decision model, followed by its refinement, update, and use, as required for trade studies throughout the system life cycle.

- Using Value-Focused Thinking (Keeney 1992) to create better alternatives.

- Identifying uncertainty and assessing risks for each decision.

The center of the diagram shows the five trade space objectives (listed clockwise): Performance, Growth Potential, Schedule, Development & Procurement Costs, and Sustainment Costs . The ten blue arrows represent the decision management process activities and the white text within the green ring represents SE process elements. Interactions are represented by the small, dotted green or blue arrows. The decision analysis process is an iterative process. A hypothetical UAV decision problem is used to illustrate each of the activities in the following sections.

Framing and Tailoring the Decision

To ensure the decision team fully understands the decision context, the analyst should describe the system baseline, boundaries and interfaces. The decision context includes: the system definition, the life cycle stage, decision milestones, a list of decision makers and stakeholders, and available resources. The best practice is to identify a decision problem statement that defines the decision in terms of the system life cycle.

Developing Objectives and Measures

Defining how an important decision will be made is difficult. As Keeney (2002) puts it:

Most important decisions involve multiple objectives, and usually with multiple-objective decisions, you can't have it all. You will have to accept less achievement in terms of some objectives in order to achieve more on other objectives. But how much less would you accept to achieve how much more?

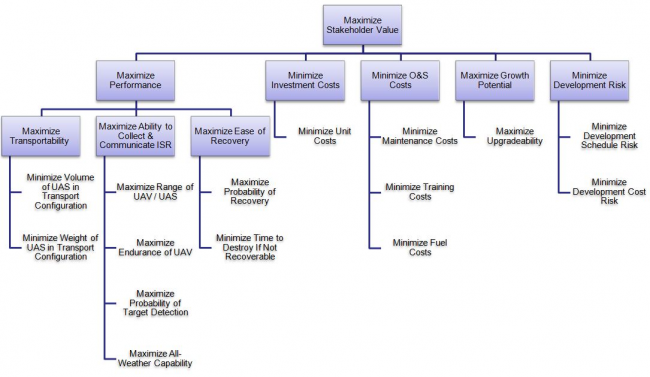

The first step is to develop objectives and measures using interviews and focus groups with subject matter experts (SMEs) and stakeholders. For systems engineering trade-off analyses, stakeholder value often includes competing objectives of performance, development schedule, unit cost, support costs, and growth potential. For corporate decisions, shareholder value would also be added to this list. For performance, a functional decomposition can help generate a thorough set of potential objectives. Test this initial list of fundamental objectives by checking that each fundamental objective is essential and controllable and that the set of objectives is complete, non-redundant, concise, specific, and understandable (Edwards et al. 2007). Figure 2 provides an example of an objectives hierarchy.

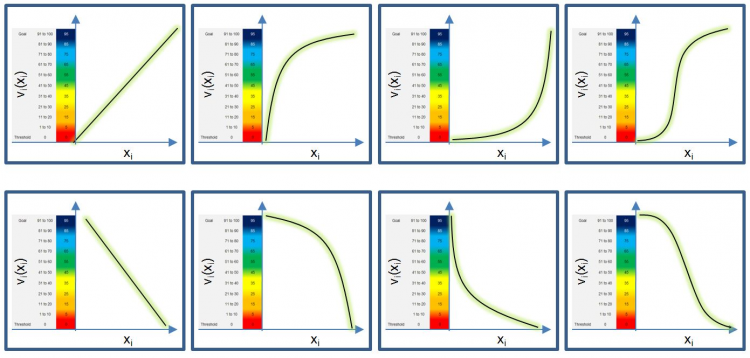

For each objective, a measure must be defined to assess the value of each alternative for that objective. A measure (attribute, criterion, and metric) must be unambiguous, comprehensive, direct, operational, and understandable (Keeney & Gregory 2005). A defining feature of multi-objective decision analysis is the transformation from measure space to value space. This transformation is performed by a value function which shows returns to scale on the measure range. When creating a value function, the walk-away point on the measure scale (x-axis) must be ascertained and mapped to a 0 value on the value scale (y-axis). A walk-away point is the measure score where regardless of how well an alternative performs in other measures, the decision maker will walk away from the alternative. He or she does this through working with the user, finding the measure score beyond, at which point an alternative provides no additional value, and labeling it "stretch goal" (ideal) and then mapping it to 100 (or 1 and 10) on the value scale (y-axis). Figure 3 provides the most common value curve shapes. The rationale for the shape of the value functions should be documented for traceability and defensibility (Parnell et al. 2011).

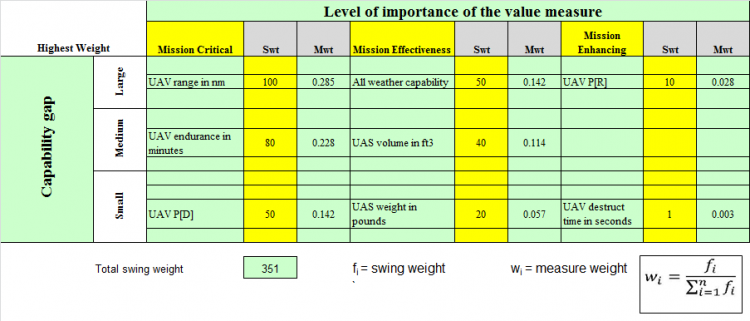

The mathematics of multiple objective decision analysis (MODA) requires that the weights depend on importance of the measure and the range of the measure (walk away to stretch goal). A useful tool for determining priority weighting is the swing weight matrix (Parnell et al. 2011). For each measure, consider its importance through determining whether the measure corresponds to a defining, critical, or enabling function and consider the gap between the current capability and the desired capability; finally, put the name of the measure in the appropriate cell of the matrix (Figure 4). The highest priority weighting is placed in the upper-left corner and assigned an unnormalized weight of 100. The unnormalized weights are monotonically decreasing to the right and down the matrix. Swing weights are then assessed by comparing them to the most important value measure or another assessed measure. The swing weights are normalized to sum to one for the additive value model used to calculate value in a subsequent section.

Generating Creative Alternatives

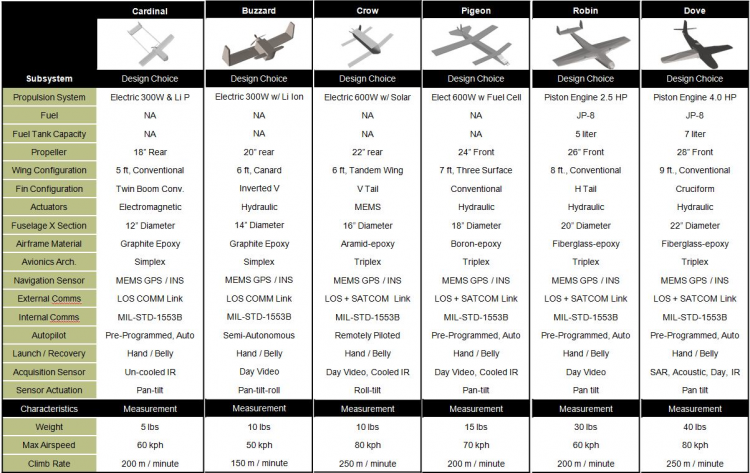

To help generate a creative and comprehensive set of alternatives that span the decision space, consider developing an alternative generation table (also called a morphological box) (Buede, 2009; Parnell et al. 2011). It is a best practice to establish a meaningful product structure for the system and to be reported in all decision presentations (Figure 5).

Assessing Alternatives via Deterministic Analysis

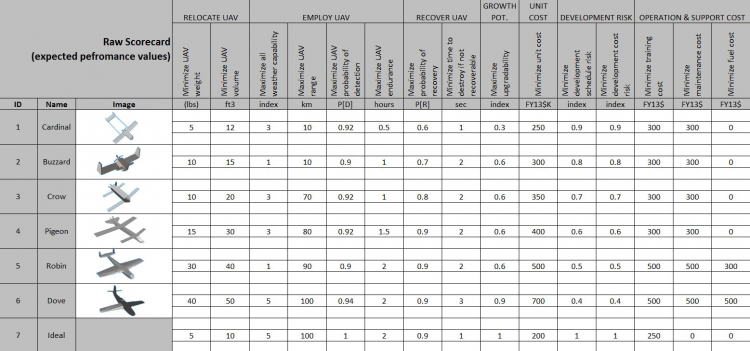

With objectives and measures established and alternatives having been defined, the decision team should engage SMEs, equipped with operational data, test data, simulations, models, and expert knowledge. Scores are best captured on scoring sheets for each alternative/measure combination which document the source and rationale. Figure 6 provides a summary of the scores.

Note that in addition to identified alternatives, the score matrix includes a row for the ideal alternative. The ideal is a tool for value-focused thinking, which will be covered later.

Synthesizing Results

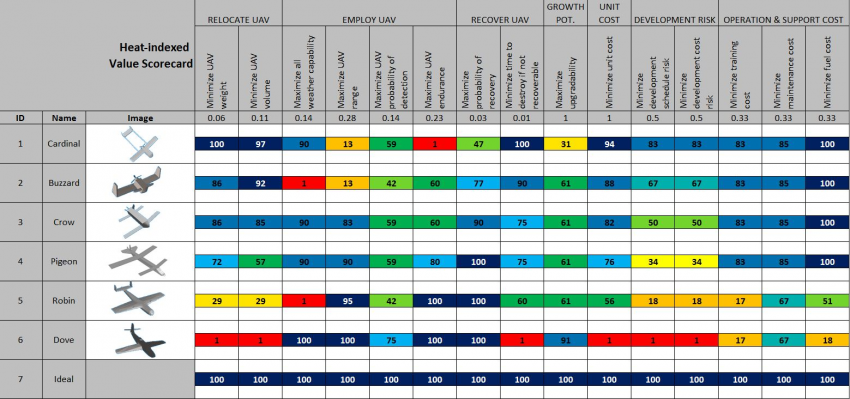

Next, one can transform the scores into a value table, by using the value functions developed previously. A color heat map can be useful to visualize value tradeoffs between alternatives and identify where alternatives need improvement (Figure 7).

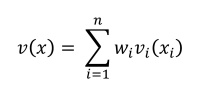

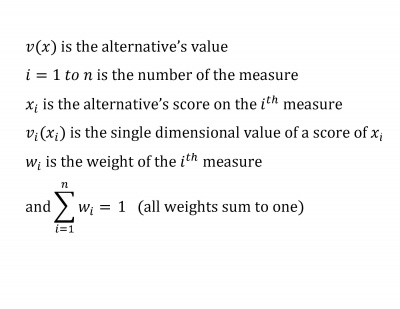

The additive value model uses the following equation to calculate each alternative’s value:

where

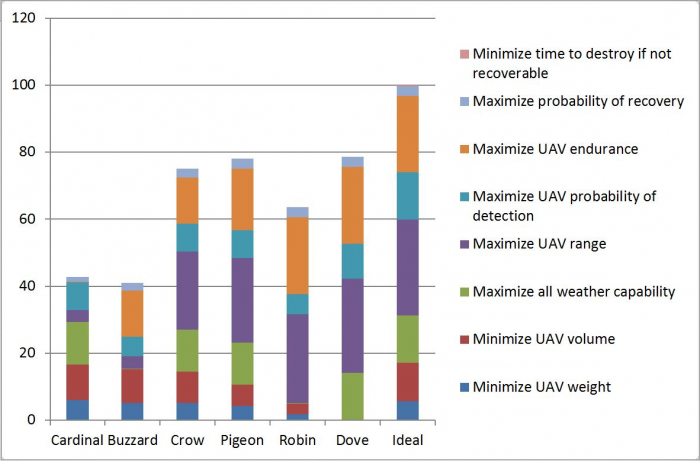

The value component chart (Figure 8) shows the total value and the weighted value measure contribution of each alternative (Parnell et al. 2011).

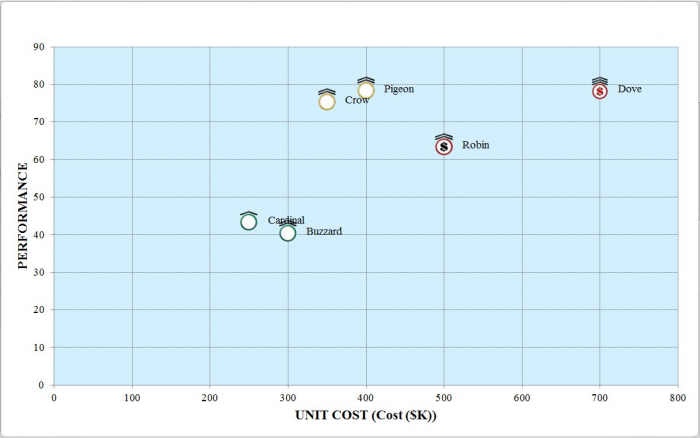

The heart of a decision management process for system engineering trade off analysis is the ability to assess all dimensions of shareholder and stakeholder value. The stakeholder value scatter plot in Figure 9 shows five dimensions: unit cost, performance, development risk, growth potential, and operation and support costs for all alternatives.

Each system alternative is represented by a scatter plot marker (Figure 9). An alternative’s unit cost and performance value are indicated by x and y positions respectively. An alternative’s development risk is indicated by the color of the marker (green = low, yellow= medium, red = high), while the growth potential is shown as the number of hats above the circular marker (1 hat = low, 2 hats = moderate, 3 hats = high).

Identifying Uncertainty and Conducting Probabilistic Analysis

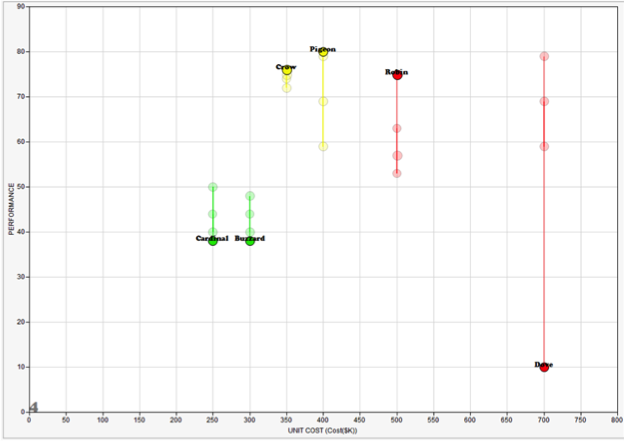

As part of the assessment, the SME should discuss the potential uncertainty of the independent variables. The independent variables are the variables that impact one or more scores; the scores that are independent scores. Many times the SME can assess an upper, nominal, and lower bound by assuming low, moderate, and high performance. Using this data, a Monte Carlo Simulation summarizes the impact of the uncertainties and can identify the uncertainties that have the most impact on the decision.

Accessing Impact of Uncertainty - Analyzing Risk and Sensitivity

Decision analysis uses many forms of sensitivity analysis including line diagrams, tornado diagrams, waterfall diagrams and several uncertainty analyses including Monte Carlo Simulation, decision trees, and influence diagrams (Parnell et al. 2013). A line diagram is used to show the sensitivity to the swing weight judgment (Parnell et al. 2011). Figure 10 shows the results of a Monte Carlo Simulation of performance value.

Improving Alternatives

Mining the data generated for the alternatives will likely reveal opportunities to modify some design choices to claim untapped value and/or reduce risk. Taking advantage of initial findings to generate new and creative alternatives starts the process of transforming the decision process from "alternative-focused thinking" to "value-focused thinking" (Keeney 1993).

Communicating Tradeoffs

This is the point in the process where the decision analysis team identifies key observations about tradeoffs and the important uncertainties and risks.

Presenting Recommendations and Implementing Action Plan

It is often helpful to describe the recommendation(s) in the form of a clearly-worded, actionable task-list in order to increase the likelihood of the decision implementation. Reports are important for historical traceability and future decisions. Take the time and effort to create a comprehensive, high-quality report detailing study findings and supporting rationale. Consider static paper reports augmented with dynamic hyper-linked e-reports.

The Cognitive Bias Effect on Decisions

Research by (Kahneman 2011) and (Thaler and Sunstein 2008) has concluded that cognitive bias can seriously distort decisions made by any decision maker. Both Kahneman and Thaler were awarded the Nobel prize for their work. The cause of this distortion is called the cognitive bias. These distorted decisions have contributed to major catastrophes, such as Challenger and Columbia. Other sources attributing major catastrophes are (Murata, Nakamura, and Karwowski 2015) and (Murata 2017).

(Kahneman 2011) and (Thaler and Sunstein 2008) have identified a large number of individual biases, the most well-known of which is the confirmation bias. This bias states that humans have a tendency to interpret new evidence as confirmation of one's existing beliefs or theories. Regarding mitigation of theses biases, there is general agreement that self-mitigation by the decision-maker is not feasible for most biases. (Thaler and Sunstein 2008) provide methods to influence the mitigation of most biases. They refer to these influences as “nudges”.

Considering cognitive biases in a systems engineering is discussed by (Jackson 2017, Jackson and Harel 2017), and (Jackson 2018). The primary theme of these references is that rational decisions are rarely possible and that cognitive bias must be taken into account.

Decisions with Cognitive Bias

According to (INCOSE 2015) ideal decisions are made while “objectively identifying, characterizing, and evaluating a set of alternatives for a decision…” Research in the field of behavioral economics has shown that these decisions can be distorted by a phenomenon known as cognitive bias. Furthermore, most decision makers are unaware of these biases. The literature also provides methods for mitigating these biases.

According to (Haselton, Nettle, and Andrews 2005, p. 2) a cognitive bias represents a situation in which “human cognition reliably produces representations that are systematically distorted compared to some aspect of objective reality.” Cognitive biases are typically stimulated by emotion and prior belief. The literature reveals large numbers of cognitive biases of which the following three are typical:

- The rankism bias. According to (Fuller 2011), rankism is simply the idea that persons of higher rank in an organization are better able to assert their authority over persons of lower rank regardless of the decision involved. Rankism frequently occurs in aircraft cockpits. According to (McCreary et al. 1998), rankism was a factor in the famous Tenerife disaster.

- The complacency bias. According to (Leveson 1995, pp. 54-55), complacency is the disregard for safety and the belief that current safety measures are adequate. According to (Leveson 1995, pp. 54-55), complacency played a role in the Three Mile Island and Bhopal disasters.

- The optimism bias. According to (Leveson 1995, pp. 54-55), famous physicist Richard Feynman states that NASA “exaggerates the reliability of the system.” This is an example of the optimism bias.

Mitigation of Cognitive Bias

Various sources have suggested methods to mitigate the effects of cognitive bias. Following are some of the major ones.

- Independent Review. The idea of independent review is that advice on decisions should come from an outside body, called by the Columbia Accident Investigation Board (CAIB) (NASA 2003, 227) as the Independent Technical Authority (ITA). This authority must be both organizationally and financially independent of the program in question. That is, the ITA cannot be subordinate to the program manager.

- Crew Resource Management. Following a period of high accident rate, several airlines have adopted the crew resource management (CRM) method. The primary purposes of this method are first to assure that all crew members do their job properly and secondly that they communicate with the pilot effectively when they have a concern. The impetus for this method was the judgment that many pilots were experiencing the rankism bias or were preoccupied with other tasks and simple did not understand the concerns of the other crew members. The result is that this strategy has been successful, and that the accident rate has fallen.

- The Premortem. (Kahneman 2011) (pp. 264-265) suggests this method of nudging in an organizational context. This method, like others, requires a certain amount of willingness on the part of the decision-maker to participate in this process. It calls for decision-makers to surround themselves with trusted experts in advance of major decisions. According to Kahneman the primary job of the experts is to present the negative argument against any decision. For example, the decision-maker should not authorize the launch now, perhaps later.

References

Works Cited

Buede, D.M. 2009. The engineering design of systems: Models and methods. 2nd ed. Hoboken, NJ: John Wiley & Sons Inc.

Edwards, W., R.F. Miles Jr., and D. Von Winterfeldt. 2007. Advances In Decision Analysis: From Foundations to Applications. New York, NY: Cambridge University Press.

Fuller, R.W. 2011. "What is Rankism and Why to We "Do" It?" Psychology Today. 25 May 2011. https://www.psychologytoday.com/us/blog/somebodies-and-nobodies/201002/what-is-rankism-and-why-do-we-do-it

Haselton, M.G., D. Nettle, and P.W. Andrews. 2005. "The Evolution of Cognitive Bias." Handbook of Psychology.

INCOSE. 2015. Systems Engineering Handbook, 4th Ed. Edited by D.D. Walden, G.J. Roedler, K.J. Forsberg, R.D. Hamelin, and T.M. Shortell. San Diego, CA: International Council on Systems Engineering (INCOSE).

ISO/IEC/IEEE. 2015. Systems and Software Engineering -- System Life Cycle Processes. Geneva, Switzerland: International Organisation for Standardisation / International Electrotechnical Commissions / Institute of Electrical and Electronics Engineers. ISO/IEC/IEEE 15288:2015.

Kahneman, D. 2011. "Thinking Fast and Slow." New York, NY: Farrar, Straus, and Giroux.

Keeney, R.L. and H. Raiffa. 1976. Decisions with Multiple Objectives - Preferences and Value Tradeoffs. New York, NY: Wiley.

Keeney, R.L. 1992. Value-Focused Thinking: A Path to Creative Decision-Making. Cambridge, MA: Harvard University Press.

Keeney, R.L. 1993. "Creativity in MS/OR: Value-focused thinking—Creativity directed toward decision making." Interfaces, 23(3), p.62–67.

Leveson, N. 1995. Safeware: System Safety and Computers. Reading, MA: Addison Wesley.

McCreary, J., M. Pollard, K. Stevenson, and M.B. Wilson. 1998. "Human Factors: Tenerife Revisited." Journal of Air Transportation World Wide. 3(1).

Murata, A. 2017. "Cultural Difference and Cognitive Biases as a Trigger of Critical Crashes or Disasters - Evidence from Case Studies of Human Factors Analysis." Journal of Behavioral and Brain Science. 7:299-415.

Murata, A., T. Nakamura, and W. Karwowski. 2015. "Influences of Cognitive Biases in Distorting Decision Making and Leading to Critical Unfavorable Incidents." Safety. 1:44-58.

Parnell, G.S. 2009. "Decision Analysis in One Chart," Decision Line, Newsletter of the Decision Sciences Institute. May 2009.

Parnell, G.S., P.J. Driscoll, and D.L Henderson (eds). 2011. Decision Making for Systems Engineering and Management, 2nd ed. Wiley Series in Systems Engineering. Hoboken, NJ: Wiley & Sons Inc.

Parnell, G.S., T. Bresnick, S. Tani, and E. Johnson. 2013. Handbook of Decision Analysis. Hoboken, NJ: Wiley & Sons.

Thaler, Richard H., and Cass R. Sunstein. 2008. Nudge: Improving Decisions About Health, Wealth, and Happiness. New York: Penguin Books.

Primary References

Buede, D.M. 2004. "On Trade Studies." Proceedings of the 14th Annual International Council on Systems Engineering International Symposium, 20-24 June, 2004, Toulouse, France.

Keeney, R.L. 2004. "Making Better Decision Makers." Decision Analysis, 1(4), pp.193–204.

Keeney, R.L. & R.S. Gregory. 2005. "Selecting Attributes to Measure the Achievement of Objectives". Operations Research, 53(1), pp.1–11.

Kirkwood, C.W. 1996. Strategic Decision Making: Multiobjective Decision Analysis with Spreadsheets. Belmont, California: Duxbury Press.

Additional References

Buede, D.M. and R.W. Choisser. 1992. "Providing an Analytic Structure for Key System Design Choices." Journal of Multi-Criteria Decision Analysis, 1(1), pp.17–27.

Felix, A. 2004. "Standard Approach to Trade Studies." Proceedings of the International Council on Systems Engineering (INCOSE) Mid-Atlantic Regional Conference, November 2-4 2004, Arlington, VA.

Felix, A. 2005. "How the Pro-Active Program (Project) Manager Uses a Systems Engineer’s Trade Study as a Management Tool, and not just a Decision Making Process." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 10-15, 2005, Rochester, NY.

Jackson, S. 2017. "Irrationality in Decision Making: A Systems Engineering Perspective." INCOSE Insight, 74.

Jackson, S. 2018. "Cognitive Bias: A Game-Changer for Decision Management?" INCOSE Insight, 41-42.

Jackson, S. and A. Harel. 2017. "Systems Engineering Decision Analysis can benefit from Added Consideration of Cognitive Sciences." Systems Engineering. 55, 19 July.

Miller, G.A. 1956. "The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information." Psychological Review, 63(2), p.81.

Ross, A.M. and D.E. Hastings. 2005. "Tradespace Exploration Paradigm." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 10-15, 2005, Rochester, NY.

Sproles, N. 2002. "Formulating Measures of Effectiveness." Systems Engineering", 5(4), p. 253-263.

Silletto, H. 2005. "Some Really Useful Principles: A new look at the scope and boundaries of systems engineering." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 10-15, 2005, Rochester, NY.

Ullman, D.G. and B.P. Spiegel. 2006. "Trade Studies with Uncertain Information." Proceedings of the International Council on Systems Engineering (INCOSE) International Symposium, July 9-13, 2006, Orlando, FL.