Difference between revisions of "Decision Management"

| Line 133: | Line 133: | ||

[[{{TALKPAGENAME}}|[Go to discussion page]]] | [[{{TALKPAGENAME}}|[Go to discussion page]]] | ||

| − | <center>[[Measurement|<- Previous Article]] | [[Systems Engineering Management|Parent Article]] | [[Configuration Management|Next Article ->]] | + | <center>[[Measurement|<- Previous Article]] | [[Systems Engineering Management|Parent Article]] | [[Configuration Management|Next Article ->]]</center> |

| + | ==Signatures== | ||

[[Category: Part 3]][[Category:Topic]] | [[Category: Part 3]][[Category:Topic]] | ||

Revision as of 21:36, 9 August 2011

Making decisions is one of the most important processes practiced by systems engineers, project managers, and all team members. Sound decisions are based on good judgment and experience. There are concepts, methods, processes, and tools that can assist in the process of decision making, especially in making comparisons of decision alternatives. These tools can also assist in building team consensus in selecting and supporting the decision made and in defending it to others.

Decision Judgment Methods

Common alternative judgment methods range from indifference (“I don’t care what we decide to do, just do something…”) to probability based judgement. Methods that the practitioner should be aware of include: • Emotion based judgment • Intuition based judgment • Expert based judgment • Fact based judgment • Probability based judgment These are elaborated upon in the following paragraphs.

- Emotion Based Judgment While most people would claim that their decisions are based on sound rationale, once a decision is made public (even within a small team) the decision-makers will vigorously defend their choice, often even in the face of contrary evidence. It is easy to become emotionally tied to your decision, and refuse to consider alternates that are later proven to be superior. Another phenomenon is that people often need “permission” to support an action or idea, as explained by Cialdini (Cialdini 2006), and this inherent human trait also suggests why teams often resist new ideas.

- Intuition Based Judgment Intuition plays a key role in leading development teams to creative solutions. Malcolm Gladwell (Gladwell 2005) makes the strong argument that we intuitively see the powerful benefits or fatal flaws inherent in a newly proposed solution. Kelly Johnson, the founder of the highly successful and creative Skunk Works at the Lockheed Aircraft Corporation (Rich and Janos 1996), was an amazing practitioner of intuitive decisions, but he always had his decisions backed up with detailed studies of alternatives, and these then became fact-based decisions. Intuition can be an excellent guide when based on relevant past experience but it may blind you to as-yet undiscovered concepts. And even when it is appropriate, it is a starting point, not an end point. Ideas generated based on intuition should be considered seriously, but should be treated as an output of a brainstorming session, and evaluated using one of the three approaches in the next three subsections.

- Expert based Judgment For certain problems, especially ones involving technical expertise outside your field, calling in experts is a cost effective approach. When facing decisions such as surgery consultation, automobile repair, or electronic component troubleshooting, it makes sense to benefit from expert knowledge. The decision-making challenge is to establish perceptive criteria for selecting the right experts.

- Fact Based Judgment This is the most common situation. This will be discussed in more detail in section 6.6.4.

Probability Based Judgment

Probability based decisions are made when there is uncertainty. Decision management techniques and tools for decisions based on uncertainty include probability theory, utility functions, decision tree analysis, models, and simulations. A classic mathematically oriented reference in the area of decision analysis is (Raiffa 1997) for understanding decision trees and probability analysis. Another classic introduction is (Schlaiffer 1969) with more of an applied focus. The aspect of modeling and simulation is covered in the popular textbook (Law 2007), which also has good coverage of Monte Carlo analysis. Some of these more commonly used and fundamental methods are overviewed below.

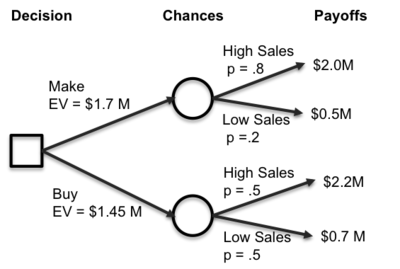

Decision trees and influence diagrams are visual analytical decision support tools where the expected values (or expected utility) of competing alternatives are calculated. A decision tree uses a tree-like graph or model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. Influence diagrams are used for decision models as alternate, more compact graphical representations of decision trees.

The figure below demonstrates a simplified make vs. buy decision analysis tree and the associated calculations. Suppose making a product costs $200K more than buying an alternative off the shelf, reflected as a difference in the net payoffs in the figure. The custom development is also expected to be a better product with a corresponding larger probability of high sales at 80% vs. 50% for the bought alternative. With these assumptions, the monetary expected value of the make alternative is .8*2.0M + .2*0.5M = 1.7M and the buy alternative is .5*2.2M + .5*0.7M = 1.45M.

Influence diagrams focus attention on the issues and relationships between events. They are generalizations of Bayesian networks whereby maximum expected utility criteria can be modeled. A good reference is (Detwarasiti and Shachter 2005, 207-228) for using influence diagrams in team decision analysis.

Expected utility is more general than expected value. Utility is a measure of relative satisfaction that takes into account the decision maker's preference function, which may be nonlinear. Expected utility theory deals with the analysis of choices with multidimensional outcomes. The analyst should determine the decision-maker's utility for money and select the alternative course of action that yields the highest expected utility, rather than the highest expected monetary value. A classic reference on applying multiple objective methods, utility functions, and allied techniques is (Kenney and Raiffa 1976). References with applied examples of decision tree analysis and utility functions include (Samson 1988) and (Skinner 1999).

(Blanchard 2004b) shows a variety of these decision analysis methods in many technical decision scenarios. A comprehensive reference demonstrating decision analysis methods for software-intensive systems is (Boehm 1981, 32-41). It is a major treatment of multiple goal decision analysis, dealing with uncertainties, risks, and the value of information. Facets of a decision situation which cannot be explained by a quantitative model should be reserved for intuition and judgment applied by the decision maker. Sometimes outside parties are also called upon. One method to canvas experts is the Delphi Technique procedure for organizing and sharing expert forecasts about the future outcomes or parameter values. The Delphi Technique is a method of group decision-making and forecasting that involves successively collating the judgments of experts. A variant called the Wideband Delphi technique is described in (Boehm 1981, 32-41) for improving upon the standard Delphi with more rigorous iterations of statistical analysis and feedback forms.

General tools, such as spreadsheets and simulation packages, can be used with these methods. There are also tools targeted specifically for aspects of decision analysis such as decision trees, evaluation of probabilities, Bayesian influence networks, and others. The INCOSE website for the tools database (INCOSE 2010, 1)) has an extensive list of analysis tools.

Linkages to Other Systems Engineering Management Topics

The Decision Management process is closely coupled with the Measurement, Planning, Assessment and Control, and Risk Management processes. The Measurement process describes how to derive quantitative indicators as input to decisions. Refer to the Planning process area for more information about incorporating decision results into project plans.

Practical Considerations

Key pitfalls and good practices related to decision analysis are described below.

Pitfalls

Some of the key pitfalls are:

- False confidence in the accuracy of values used in decisions.

- Not engaging experts and holding peer reviews. The decision-maker should engage experts to validate decision values.

- Prime sources of errors in risky decision-making include false assumptions, not having an accurate estimation of the probabilities, relying on expectations, difficulties in measuring the utility function, and forecast errors.

- The analytical hierarchy process may not handle real-life situations taking into account the theoretical difficulties in using eigenvectors.

Good Practices

Some good practices are below.

| Name | Description |

|---|---|

| Progressive Decision Modeling |

|

| Necessary Measurements |

|

| Define Selection Criteria |

|

References

Please make sure all references are listed alphabetically and are formatted according to the Chicago Manual of Style (15th ed). See the BKCASE Reference Guidance for additional information.

Citations

List all references cited in the article. Note: SEBoK 0.5 uses Chicago Manual of Style (15th ed). See the BKCASE Reference Guidance for additional information.

Primary References

Cialdini, Robert B. 2006. Influence: The Psychology of Persuasion. Collins Business Essentials.

Forsberg, K., H. Mooz, H. Cotterman. 2005. Visualizing Project Management, 3rd Ed. John Wiley and Sons. pg 154-155.

Gladwell, Malcolm. 2005. Blink: the Power of Thinking without Thinking. Little, Brown & Co.

Kepner, C. H., B. B. Tregoe. 1997. The New Rational Manager. Princeton University Press.

Raiffa, H. 1997. Decision Analysis: Introductory Lectures on Choices under Uncertainty. New York, NY: McGraw-Hill.

Rich, Ben, Leo Janos. 1996. Skunk Works. Little, Brown & Company Saaty, Thomas L. 2008. Decision Making for Leaders: The Analytic Hierarchy Process for Decisions in a Complex World. Pittsburgh, Pennsylvania: RWS Publications. ISBN 0-9620317-8-X.

Wikipedia. 2011. Decision making software.

Schlaiffer, R. 1969. Analysis of Decisions under Uncertainty. New York, NY: McGraw-Hill book Company.

Additional References

All additional references should be listed in alphabetical order.

Blanchard, B. S. 2004. Systems engineering management. 3rd ed. New York, NY: John Wiley & Sons.

Boehm, B. 1981. Software risk management: Principles and practices. IEEE Software 8 (1) (January 1991): 32-41.

Detwarasiti, A., and R. D. Shachter. 2005. Influence diagrams for team decision analysis. Decision Analysis 2 (4): 207-28.

INCOSE. 2011. INCOSE systems engineering handbook, version 3.2.1. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.

Kenney, R. L., and H. Raiffa. 1976. Decision with multiple objectives: Preferences and value- trade-offs. New York, NY: John Wiley & Sons.

Law, A. 2007. Simulation Modeling and Analysis. 4th ed. New York, NY: McGraw Hill.

Parnell, G. S., P. J. Driscoll, and D. L. Henderson. 2010. Decision Making in Systems Engineering and Management. New York, NY: John Wiley & Sons.

Samson, D. 1988. Managerial decision analysis. New York, NY: Richard D. Irwin, Inc.

Skinner, D. 1999. Introduction to decision analysis. 2nd ed. Sugar Land, TX, USA: Probabilistic Publishing.