Difference between revisions of "System Analysis"

| Line 1: | Line 1: | ||

| − | System analysis allows the developers of systems to carry out (in an objective way) assessments | + | System analysis allows the developers of systems to carry out (in an objective way) quantitative assessments in order to select and/or update the most efficient system [[Architecture (glossary)|architecture]] and to generate derived engineering data. |

| − | During engineering, assessments should be performed every time technical choices or decisions | + | During engineering, assessments should be performed every time technical choices or decisions are made, to determine compliance with system requirements. System analysis provides a rigorous approach to technical decision-making. It is used to perform trade-off studies, and includes modeling and simulation, cost analysis, technical risks analysis, and effectiveness analysis. |

==Principles Governing System Analysis== | ==Principles Governing System Analysis== | ||

| Line 10: | Line 10: | ||

*to decide. | *to decide. | ||

| − | The number and importance of assessment criteria to be used depends on the type of system and its context of operational use. | + | The number and importance of assessment criteria to be used depends on the type of system and its context of operational use. |

| − | |||

===Trade-off studies=== | ===Trade-off studies=== | ||

| − | In the context of the definition of a system, a trade-off study consists of comparing the characteristics of each candidate | + | In the context of the definition of a system, a trade-off study consists of comparing the characteristics of each candidate system element to determine the solution that best globally balances the assessment criteria. The various characteristics analyzed are gathered in cost analysis, technical risks analysis, and effectiveness analysis (NASA 2007). Each class of analysis is the subject of the following topics: |

#[[Assessment Criterion (glossary)|Assessment criteria]] are used to classify the various candidate solutions between themselves. They are absolute or relative. For example: maximum cost per unit produced is cc$, cost reduction shall be x%, effectiveness improvement is y%, and risk mitigation is z%. | #[[Assessment Criterion (glossary)|Assessment criteria]] are used to classify the various candidate solutions between themselves. They are absolute or relative. For example: maximum cost per unit produced is cc$, cost reduction shall be x%, effectiveness improvement is y%, and risk mitigation is z%. | ||

#'''Boundaries''' identify and limit the characteristics or criteria to be taken into account in the analysis. For example: kind of costs to be taken into account, acceptable technical risks, and type and level of effectiveness. | #'''Boundaries''' identify and limit the characteristics or criteria to be taken into account in the analysis. For example: kind of costs to be taken into account, acceptable technical risks, and type and level of effectiveness. | ||

| Line 23: | Line 22: | ||

*Subjective criteria: for example, the component has to be beautiful. What is a beautiful component? | *Subjective criteria: for example, the component has to be beautiful. What is a beautiful component? | ||

*Uncertain data: for example, inflation has to be taken into account to estimate the cost of maintenance during the complete life cycle. What will be inflation for the next five years? | *Uncertain data: for example, inflation has to be taken into account to estimate the cost of maintenance during the complete life cycle. What will be inflation for the next five years? | ||

| − | *Sensitivity analysis: a global assessment score associated to every candidate solution is not absolute; it is recommended to get a robust selection by performing sensitivity analysis that | + | *Sensitivity analysis: a global assessment score associated to every candidate solution is not absolute; it is recommended to get a robust selection by performing sensitivity analysis that considers small variations of assessment criteria values (weights). The selection is robust if the variations do not change the order of scores. |

| − | A | + | A thorough trade-off study specifies the assumptions, variables, and confidence intervals of the results. |

===Cost Analysis === | ===Cost Analysis === | ||

| Line 32: | Line 31: | ||

[[File:SEBoKv05_KA-SystDef_Types_of_Costs.png|650px|center]] | [[File:SEBoKv05_KA-SystDef_Types_of_Costs.png|650px|center]] | ||

| − | |||

'''Table 1. Types of Costs.''' | '''Table 1. Types of Costs.''' | ||

| − | |||

| − | |||

Methods for determining cost are described in the [[Planning]] topic. | Methods for determining cost are described in the [[Planning]] topic. | ||

| Line 43: | Line 39: | ||

*Analysis of potential threats or undesired events and their probability of occurrence. | *Analysis of potential threats or undesired events and their probability of occurrence. | ||

*Analysis of the consequences of these threats or undesired events and their classification on a scale of gravity. | *Analysis of the consequences of these threats or undesired events and their classification on a scale of gravity. | ||

| − | * | + | *Mitigation to reduce the probabilities of threats and/or the levels of harmful effect to acceptable values. |

| − | The technical risks appear when the system cannot satisfy the system requirements any longer. The causes reside in the solution itself and/or in the requirements. They are expressed in the form of insufficient effectiveness and can have multiple causes; for example, incorrect assessment of the technological capabilities, over-estimation of the technical maturity of a system element, failure of parts, breakdowns, breakage, obsolescence of equipment, parts, or software, weakness from the supplier (non-compliant parts, delay for supply, etc.), human factors (insufficient training, wrong tunings, error handling, unsuited procedures, malice), etc | + | The technical risks appear when the system cannot satisfy the system requirements any longer. The causes reside in the solution itself and/or in the requirements. They are expressed in the form of insufficient effectiveness and can have multiple causes; for example, incorrect assessment of the technological capabilities, over-estimation of the technical maturity of a system element, failure of parts, breakdowns, breakage, obsolescence of equipment, parts, or software, weakness from the supplier (non-compliant parts, delay for supply, etc.), human factors (insufficient training, wrong tunings, error handling, unsuited procedures, malice), etc. |

| − | + | Technical risks are not to be confused with project risks even if the method to manage them is the same. Technical risks address the system itself, not the process for its development. Of course, technical risks may lead to project risks. | |

See [[Risk Management]] for more details. | See [[Risk Management]] for more details. | ||

===Effectiveness Analysis=== | ===Effectiveness Analysis=== | ||

| − | Effectiveness studies use the | + | Effectiveness studies use the requirements as a starting point. The effectiveness of the system includes several essential characteristics that are generally gathered in the following list of analyses, including but not limited to: performance, usability, dependability, manufacturing, maintenance or support, environment, etc. These analyses highlight candidate solutions under various aspects. |

| + | |||

| + | It is essential to establish a classification in order to limit the number of analysis to the really significant aspects, such as key performance parameters. The main difficulties of the effectiveness analysis are to sort and select the right set of effectiveness aspects; for example, if the product is made for a single use,maintainability will not be a relevant criterion. | ||

==Process Approach - System Analysis== | ==Process Approach - System Analysis== | ||

| Line 86: | Line 84: | ||

#Costs, risks, effectiveness analysis reports | #Costs, risks, effectiveness analysis reports | ||

#Justification reports | #Justification reports | ||

| − | |||

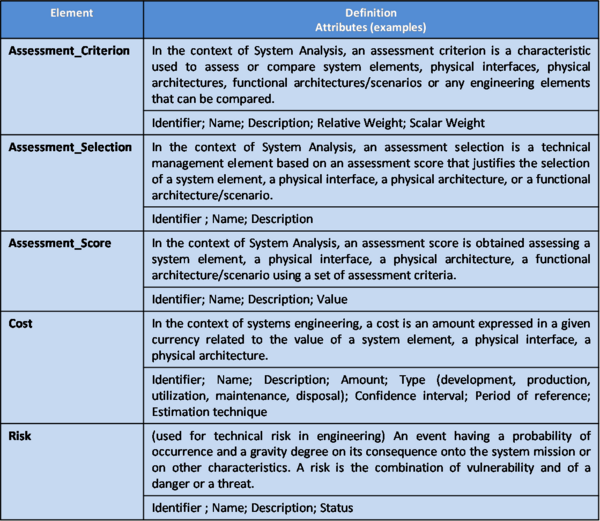

This process handles the ontology elements of Table 2 within system analysis. | This process handles the ontology elements of Table 2 within system analysis. | ||

[[File:SEBoKv05_KA-SystDef_ontology_elements_System_Analysis.png|600px|center|Main Ontology Elements as Handled within System Analysis]] | [[File:SEBoKv05_KA-SystDef_ontology_elements_System_Analysis.png|600px|center|Main Ontology Elements as Handled within System Analysis]] | ||

| − | |||

'''Table 2. Main Ontology Elements As Handled Within System Analysis.''' | '''Table 2. Main Ontology Elements As Handled Within System Analysis.''' | ||

| Line 98: | Line 94: | ||

[[File:SEBoKv05_KA-SystDef_System_Analysis_relationships.png|550px|center|System Analysis Elements Relationships with Other Engineering Elements ]] | [[File:SEBoKv05_KA-SystDef_System_Analysis_relationships.png|550px|center|System Analysis Elements Relationships with Other Engineering Elements ]] | ||

| − | |||

'''Figure 1. System Analysis Elements Relationships With Other Engineering Elements''' (Faisandier 2011). | '''Figure 1. System Analysis Elements Relationships With Other Engineering Elements''' (Faisandier 2011). | ||

| Line 111: | Line 106: | ||

*Sensitivity of solutions' scores related to assessment criteria weights. | *Sensitivity of solutions' scores related to assessment criteria weights. | ||

| − | See | + | See (Ring, Eisner, and Maier 2010) for additional perspective. |

===Methods and Modeling Techniques=== | ===Methods and Modeling Techniques=== | ||

| Line 136: | Line 131: | ||

[[File:SEBoKv05_KA-SystDef_Common_Analytical_Models.png|650px|center]] | [[File:SEBoKv05_KA-SystDef_Common_Analytical_Models.png|650px|center]] | ||

| − | |||

'''Table 3. Often Used Analytical Models in the Context of System Analysis.''' | '''Table 3. Often Used Analytical Models in the Context of System Analysis.''' | ||

| − | |||

| − | |||

| − | |||

| − | |||

==Practical Considerations about System Analysis== | ==Practical Considerations about System Analysis== | ||

| Line 148: | Line 138: | ||

[[File:SEBoKv05_KA-SystDef_pitfalls_System_Analysis.png|600px|center|Pitfalls with System Analysis]] | [[File:SEBoKv05_KA-SystDef_pitfalls_System_Analysis.png|600px|center|Pitfalls with System Analysis]] | ||

| − | |||

'''Table 4. Pitfalls with System Analysis.''' | '''Table 4. Pitfalls with System Analysis.''' | ||

| − | |||

| − | |||

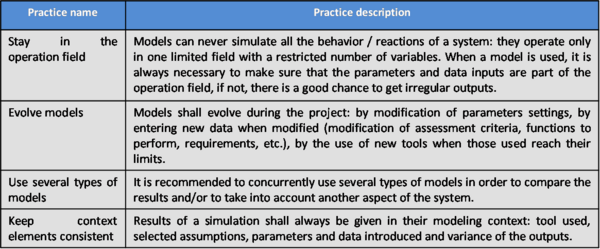

Proven practices with system analysis are presented in Table 5. | Proven practices with system analysis are presented in Table 5. | ||

[[File:SEBoKv05 KA-SystDef practices System Analysis.png|600px|center|Proven practices with System Analysis]] | [[File:SEBoKv05 KA-SystDef practices System Analysis.png|600px|center|Proven practices with System Analysis]] | ||

| − | |||

'''Table 5. Proven Practices with System Analysis.''' | '''Table 5. Proven Practices with System Analysis.''' | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

==References== | ==References== | ||

This article relies heavily on limited sources. Reviewers are requested to identify additional sources. | This article relies heavily on limited sources. Reviewers are requested to identify additional sources. | ||

Revision as of 16:22, 15 September 2011

System analysis allows the developers of systems to carry out (in an objective way) quantitative assessments in order to select and/or update the most efficient system architecture and to generate derived engineering data.

During engineering, assessments should be performed every time technical choices or decisions are made, to determine compliance with system requirements. System analysis provides a rigorous approach to technical decision-making. It is used to perform trade-off studies, and includes modeling and simulation, cost analysis, technical risks analysis, and effectiveness analysis.

Principles Governing System Analysis

One of the major tasks of the systems engineer is to evaluate the artifacts created during the SE process. The evaluations are at the center of system analysis, providing means and techniques:

- to define assessment criteria based on system requirements;

- to assess design properties of each candidate solution in comparison to these criteria;

- to score globally the candidate solutions and to justify the scores; and

- to decide.

The number and importance of assessment criteria to be used depends on the type of system and its context of operational use.

Trade-off studies

In the context of the definition of a system, a trade-off study consists of comparing the characteristics of each candidate system element to determine the solution that best globally balances the assessment criteria. The various characteristics analyzed are gathered in cost analysis, technical risks analysis, and effectiveness analysis (NASA 2007). Each class of analysis is the subject of the following topics:

- Assessment criteria are used to classify the various candidate solutions between themselves. They are absolute or relative. For example: maximum cost per unit produced is cc$, cost reduction shall be x%, effectiveness improvement is y%, and risk mitigation is z%.

- Boundaries identify and limit the characteristics or criteria to be taken into account in the analysis. For example: kind of costs to be taken into account, acceptable technical risks, and type and level of effectiveness.

- Scales are used to quantify the characteristics, properties, and/or criteria and to make comparisons. Their definition requires knowing the highest and lowest limits as well as the type of evolution of the characteristic (linear, logarithmic, etc.).

- An assessment score is assigned to a characteristic or criterion for each candidate solution. The goal of the trade-off study is to succeed in quantifying the three variables (and their decomposition in sub-variables) of cost, risk, and effectiveness for each candidate solution. This operation is generally complex and requires the use of models.

- The optimization of the characteristics or properties improves the scoring of interesting solutions.

A decision-making process is not an accurate science and trade-off studies have limits. The following concerns should be taken into account:

- Subjective criteria: for example, the component has to be beautiful. What is a beautiful component?

- Uncertain data: for example, inflation has to be taken into account to estimate the cost of maintenance during the complete life cycle. What will be inflation for the next five years?

- Sensitivity analysis: a global assessment score associated to every candidate solution is not absolute; it is recommended to get a robust selection by performing sensitivity analysis that considers small variations of assessment criteria values (weights). The selection is robust if the variations do not change the order of scores.

A thorough trade-off study specifies the assumptions, variables, and confidence intervals of the results.

Cost Analysis

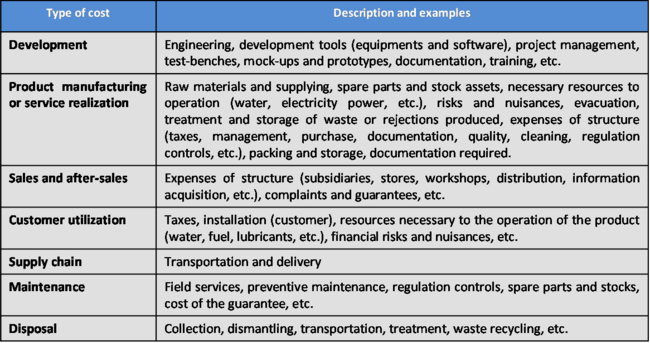

A cost analysis considers the full life cycle costs. The cost baseline can be adapted according to the project and the system. The global life cycle cost (LCC), or total ownership cost (TOC) may includes example labor and non-labor cost items such as indicated in the Table 1.

Table 1. Types of Costs.

Methods for determining cost are described in the Planning topic.

Technical Risks Analysis

Every risk analysis concerning every domain is based on three things:

- Analysis of potential threats or undesired events and their probability of occurrence.

- Analysis of the consequences of these threats or undesired events and their classification on a scale of gravity.

- Mitigation to reduce the probabilities of threats and/or the levels of harmful effect to acceptable values.

The technical risks appear when the system cannot satisfy the system requirements any longer. The causes reside in the solution itself and/or in the requirements. They are expressed in the form of insufficient effectiveness and can have multiple causes; for example, incorrect assessment of the technological capabilities, over-estimation of the technical maturity of a system element, failure of parts, breakdowns, breakage, obsolescence of equipment, parts, or software, weakness from the supplier (non-compliant parts, delay for supply, etc.), human factors (insufficient training, wrong tunings, error handling, unsuited procedures, malice), etc.

Technical risks are not to be confused with project risks even if the method to manage them is the same. Technical risks address the system itself, not the process for its development. Of course, technical risks may lead to project risks.

See Risk Management for more details.

Effectiveness Analysis

Effectiveness studies use the requirements as a starting point. The effectiveness of the system includes several essential characteristics that are generally gathered in the following list of analyses, including but not limited to: performance, usability, dependability, manufacturing, maintenance or support, environment, etc. These analyses highlight candidate solutions under various aspects.

It is essential to establish a classification in order to limit the number of analysis to the really significant aspects, such as key performance parameters. The main difficulties of the effectiveness analysis are to sort and select the right set of effectiveness aspects; for example, if the product is made for a single use,maintainability will not be a relevant criterion.

Process Approach - System Analysis

Purpose and principles of the approach

The system analysis process is used to: (1) provide a rigorous basis for technical decision making, resolution of requirement conflicts, and assessment of alternative physical solutions; (2) determine progress in satisfying system requirements and derived requirements; (3) support risk management; and (4) ensure that decisions are made only after evaluating the cost, schedule, performance, and risk effects on the engineering or reengineering of the system (ANSI/EIA 1998).

This process is named the "Decision Analysis Process" in by NASA (2007, 1-360). The Decision Analysis Process is used to help evaluate technical issues, alternatives, and their uncertainties to support decision-making. See Decision Management for more details.

The system analysis supports other system definition processes:

- Stakeholder requirements definition and system requirements definition processes use system analysis to solve issues relating to conflicts among the set of requirements, in particular those related to costs, technical risks, and effectiveness (performances, operational conditions, and constraints). System requirements subject to high risks or which would require different architectures are discussed.

- The architectural design process uses it to assess characteristics or design properties of candidate functional and physical architectures, providing arguments for selecting the most efficient one in terms of costs, technical risks, and effectiveness (e.g., performances, dependability, human factors, etc.).

Like any system definition process, the system analysis process is iterative. Each operation is carried out several times; each step improves the precision of analysis.

Activities of the Process

Major activities and tasks performed during this process include:

- Planning the trade-off studies:

- Determine the number of candidate solutions to analyze, the methods and procedures to be used, the expected results (objects to be selected: functional architecture/scenario, physical architecture, system element, etc.), and the justification items.

- Schedule the analyses according to the availability of models, engineering data (system requirements, Design Properties), skilled personnel, and procedures.

- Define the selection criteria model:

- Select the Assessment Criteria from non-functional requirements (performances, operational conditions, constraints, etc.), and/or from design properties.

- Sort and order the assessment criteria.

- Establish a scale of comparison for each assessment criterion and weigh every assessment criterion according to its level of relative importance with the others.

- Identify candidate solutions, related models, and data.

- Assess candidate solutions using previously defined methods or procedures:

- Carry out costs analysis, technical risks analysis, and effectiveness analysis placing every candidate solution on every assessment criterion comparison scale.

- Score every candidate solution as an assessment score.

- Provide results to the calling process: assessment criteria, comparison scales, solutions’ scores, assessment selection, and eventually recommendations and related arguments.

Artifacts and Ontology Elements

This process may create several artifacts such as:

- Selection criteria model (list, scales, weighing)

- Costs, risks, effectiveness analysis reports

- Justification reports

This process handles the ontology elements of Table 2 within system analysis.

Table 2. Main Ontology Elements As Handled Within System Analysis.

The main relationships between ontology elements of system analysis are presented in Figure 1.

Figure 1. System Analysis Elements Relationships With Other Engineering Elements (Faisandier 2011).

Checking and Correctness of System Analysis

The main items to be checked during system analysis in order to get validated arguments are:

- Relevance of the models and data in the context of use of the system.

- Relevance of assessment criteria related to the context of use of the system.

- Reproducibility of simulation results and of calculations.

- Precision level of comparisons' scales.

- Confidence of estimates.

- Sensitivity of solutions' scores related to assessment criteria weights.

See (Ring, Eisner, and Maier 2010) for additional perspective.

Methods and Modeling Techniques

- General usage of models: Various types of models can be used in the context of System Analysis:

- Physical models are scale models allowing simulation of physical phenomena; they are specific to each discipline; associated tools are for example mocks-up, vibration tables, test benches, prototypes, decompression chamber, wind tunnels, etc.

- Representation models are mainly used to simulate the behavior of a system; for example, Enhanced Functional Flow Block Diagrams (EFFBD), statecharts, state machine diagram (SysML), etc.

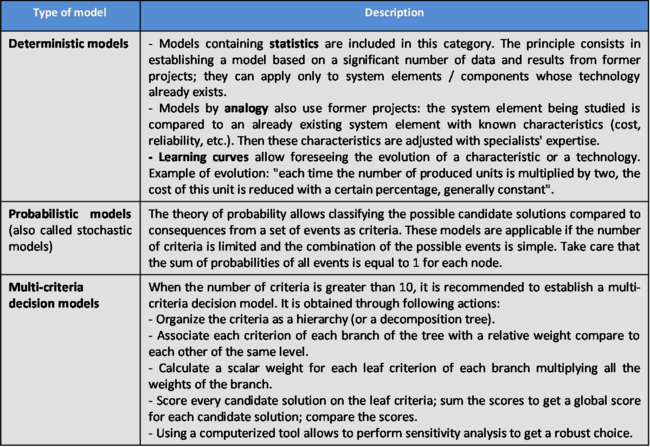

- Analytical models are mainly used to establish values of estimates, and we can consider the deterministic models and probabilistic models (also known as stochastic models). Analytical models use equations or diagrams to approach the real operation of the system. They can be from simplest (addition) to most complicated (probabilistic distribution with several variables).

- Use right models depending on the project progress:

- At the beginning of the project, first studies use simple tools, allowing rough approximations which have the advantage of not requiring too much time and effort; these approximations are often sufficient to eliminate unrealistic or outgoing candidate solutions.

- Progressively with the progress of the project it is necessary to improve precision of data to compare the candidate solutions still competing. The work is more complicated if the level of innovation is high.

- A system engineer alone cannot model a complex system; he has to be supported by skilled people from different disciplines involved.

- Specialist expertise: When the values of assessment criteria cannot be given in an objective or precise way, or because the subjective aspect is dominating, we can ask specialists for expertise. The estimates proceed in four steps:

- Select interviewees to collect the opinion of qualified people for the considered field.

- Draft a questionnaire; a precise questionnaire allows an easy analysis, but a questionnaire that is too closed risks the neglection of significant points.

- Interview a limited number of specialists with the questionnaire and have an in-depth discussion to get precise opinions.

- Analyze the data with several different people and compare their impressions until an agreement on a classification of assessment criteria and/or candidate solutions is reached.

Often used analytical models in the context of system analysis are summarized in Table 3.

Table 3. Often Used Analytical Models in the Context of System Analysis.

Practical Considerations about System Analysis

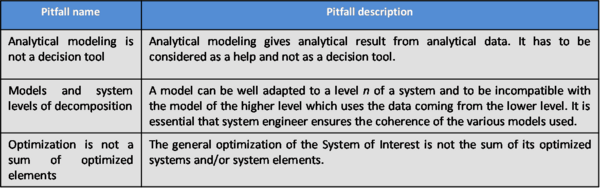

Major pitfalls encountered with system analysis are presented in Table 4.

Table 4. Pitfalls with System Analysis.

Proven practices with system analysis are presented in Table 5.

Table 5. Proven Practices with System Analysis.

References

This article relies heavily on limited sources. Reviewers are requested to identify additional sources.

Citations

ANSI/EIA. 1998. Processes for Engineering a System. Philadelphia, PA, USA: American National Standards Institute (ANSI)/Electronic Industries Association (EIA), ANSI/EIA-632-1998.

NASA. 2007. Systems Engineering Handbook. Washington, D.C.: National Aeronautics and Space Administration (NASA), NASA/SP-2007-6105.

Ring, J, H. Eisner, and M. Maier. 2010. "Key Issues of Systems Engineering, Part 3: Proving Your Design." INCOSE Insight 13(2).

Primary References

ANSI/EIA. 1998. Processes for Engineering a System. Philadelphia, PA, USA: American National Standards Institute (ANSI)/Electronic Industries Association (EIA), ANSI/EIA 632-1998.

Blanchard, B.S., and W.J. Fabrycky. 2010. Systems Engineering and Analysis. 5th ed. Prentice-Hall International Series in Industrial and Systems Engineering. Englewood Cliffs, NJ, USA: Prentice-Hall.

NASA. 2007. Systems Engineering Handbook. Washington, D.C., USA: National Aeronautics and Space Administration (NASA), NASA/SP-2007-6105.

Additional References

Faisandier, A. 2011 (unpublished). Engineering and Architecting Multidisciplinary Systems.

Ring, J, H. Eisner, and M. Maier. 2010. "Key Issues of Systems Engineering, Part 3: Proving Your Design." INCOSE Insight 13(2).

Article Discussion

Signatures

--Dholwell 12:58, 6 September 2011 (UTC) core edit

--Jgercken 10:43, 14 September 2011 (UTC)