System Verification

Introductory Paragraph(s)

Introductory Paragraph(s)

System Validation

Introduction, Definition and Purpose

Introduction

Validation is a set of actions used to check the compliance of any element to its purpose. The elements can be a Component, a system, a document, a service, a task, a System Requirement, etc. These actions are planned and carried out throughout the life cycle of the system. Validation is a generic term that needs to be instantiated within the context it occurs.

Validation understood as a process is a transverse activity to every life cycle stage of the system. In particular during the development cycle of the system, the Validation Process is performed in parallel of the System Definition and System Realization processes, and applies onto any activity and product resulting from this activity. The Validation Process generally occurs at the end of a set of life cycle tasks or activities, and at least at the end of every milestone of a development project.

The Validation Process is not limited to a phase at the end of the development of the system. It might be performed on an iterative basis on every produced engineering element during the development and might begin with the validation of the expressed Stakeholders' Requirements as engineering elements.

The Validation Process applied onto the system when completely integrated is often called Final Validation – see System Integration section 3.4.4.2.1 Figure 7.

Definition and Purpose

The purpose of Validation, as a generic action, is to establish the compliance of any activity output, compared to the inputs of this activity. Validation is used to prove that the transformation of inputs produced the expected, the "right" result.

The validation is based on tangible evidences; this means based on information whose veracity can be demonstrated, based on factual results obtained by techniques or methods such as inspection, measurement, test, analysis, calculation, etc.

So, validate a system (product, service, enterprise) consists in demonstrating that the product, service or enterprise satisfies its System Requirements. System Validation is related first to System Requirements, and eventually to Stakeholders Requirements depending of the contractual practices of the concerned industrial sector. From a purpose and a global point of view, validate a system consists in acquiring confidence in its ability to achieve its intended mission or use under specific operational conditions.

There are several books and standards that provide different definition of Validation. The most and general accepted definition can be found in [ISO-IEC 12207:2008, ISO-IEC 15288:2008, ISO25000:2005, ISO 9000:2005]:

Validation: confirmation, through the provision of objective evidence, that the requirements for a specific intended use or application have been fulfilled. With a note added in ISO 9000:2005: Validation is the set of activities ensuring and gaining confidence that a system is able to accomplish its intended use, goals and objectives (i.e., meet stakeholder requirements) in the intended operational environment.

Principles

Concept of Validation

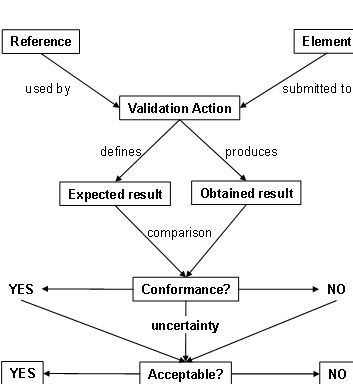

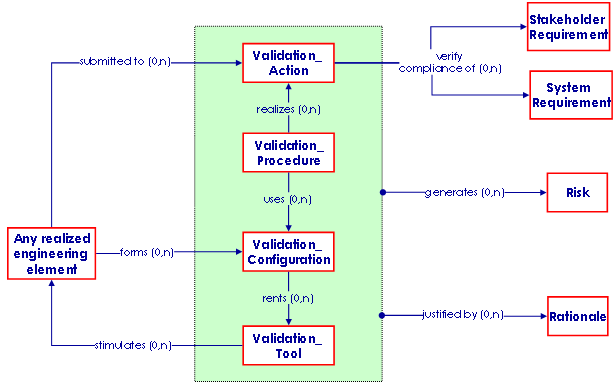

Validation Action – The term "validation" is generic and is used in association with other terms to define engineering elements – see section 3.4.6.3.3. So one uses hereafter the term Validation Action to mention an action of validation. A Validation Action is defined then performed. The definition of a Validation Action applied to an engineering element includes – see Figure 12:

- to identify the element on which the Validation Action will be performed,

- to identify the reference/baseline in order to define the expected result of the Validation Action.

The performance of the Validation Action includes:

- to obtain a result from the performance of the Validation Action onto the submitted element,

- to compare the obtained result with the expected result,

- to deduce a degree of conformance/compliance of the submitted element,

- to decide about the acceptability of this conformance/compliance, because sometimes the result of the comparison may require a judgment of value regarding the relevance in the context of use to accept or not the obtained result (generally analyzing it against a threshold, a limit).

Note: If there is uncertainty about the conformance/compliance, the cause could come from ambiguity in the requirements; the typical example is the case of a measure of effectiveness expressed without a "limit of acceptance" (above or below threshold the measure is declared unfulfilled).

What to validate? – Any engineering element can be validated using a specific reference for comparison: Stakeholder Requirement, System Requirement, Function, Component, Document, etc. Examples:

- Validate a Stakeholder Requirement is to make sure its content is justified and relevant to stakeholders expectations, expressed in the language of the customer or end user.

- Validate a System Requirement is to make sure its content translates correctly and/or accurately a Stakeholder Requirement in the language of the supplier.

- Validate the design of a system (functional and physical architectures) is to demonstrate it satisfies its System Requirements.

- Validate a system (product, service, enterprise) is to demonstrate that the product, the service, the enterprise satisfies its System Requirements, and/or it’s Stakeholders Requirements.

- Validate an activity or a task is to make sure its outputs are compliant with its inputs.

- Validate a Document is to make sure its content is compliant with the inputs of the task that produced this document.

- Validate a Process is to make sure its outcomes are compliant with its purpose.

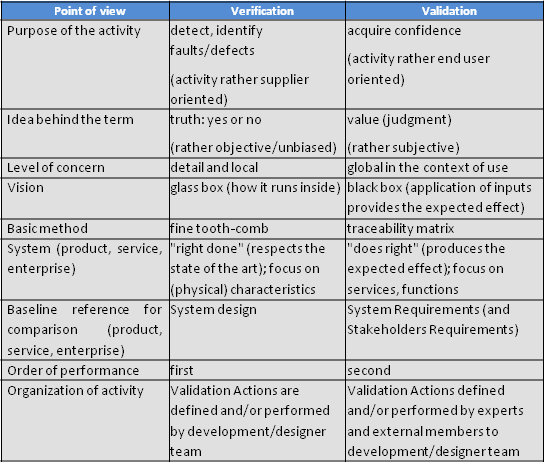

Validation versus Verification

The section discusses the fundamental differences between the two concepts and associated processes. The following table provides supplementary and synthetic information to help understanding the differences depending of the point of view.

According to the NASA Systems Engineering Handbook from a process perspective, the product verification and product validation processes may be similar in nature, but the objectives are fundamentally different. (NASA December 2007, 1-360)

System Validation, Final Validation, Operational Validation

The System Validation concerns the global system (product, service, enterprise) seen as a whole and is based on the totality of the requirements (System Requirements, Stakeholders Requirements). But it is obtained gradually throughout the development stage of the system by pursuing three non exclusive ways:

- first by cumulating the Verification Actions and Validation Actions results provided by the application of the Verification Process and the Validation Process to every definition element and to every integration element;

- second by performing final Validation Actions onto the complete integrated system in an industrial environment (as close as possible from the operational environment);

Operational Validation actions relate to the operational mission of the system and relate to the acceptance of the system ready for use or for production. For example, operational Validation Actions will force to show in the operational environment that a vehicle has the expected autonomy (is able to cover a defined distance), can cross obstacles, performs safety scenarios as required, etc. See right part of Figure 14.

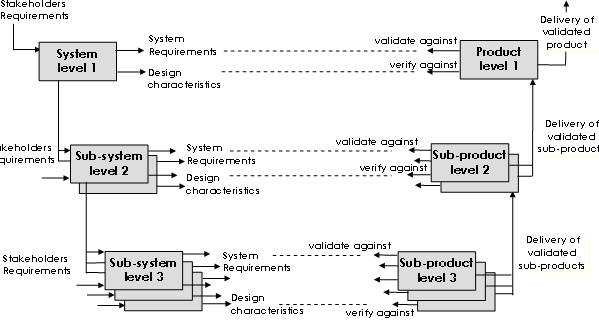

Integration, Verification and Validation level per level

It is impossible to carry out only a single global Validation Action on a complete integrated complex system. The sources of faults/defects could be important and it would be impossible to determine the causes of a non conformance raised during this global check. As generally the System-of-Interest has been decomposed during design in a set of blocks and layers of systems and system elements, thus every sub-system (system, system element) is verified, validated and possibly corrected before being integrated into the parent system block of the higher level, as shown on Figure 13.

As necessary, the sub-systems (systems, system elements) are partially integrated in sub-sets in order to limit the number of properties/characteristics to be verified within a single step - see System Integration section 3.4.4.2. For each level, it is necessary to make sure by a set of final Validation Actions that the features stated at the preceding level are not damaged. Moreover, a compliant result obtained in a given environment (for example: final validation environment) can turn into non compliant if the environment changes (for example: operational validation environment). So, as long as the sub-system is not completely integrated and/or does not operate in the real operational environment, no result must be regarded as definitive.

During modifications made to a sub-system, the temptation is to focus on the new adapted configuration forgetting the environment and the other configurations. However, a modification can have significant consequences on other configurations. Thus, any modification requires regression Verification Actions and Validation Actions (often called Regression Testing).

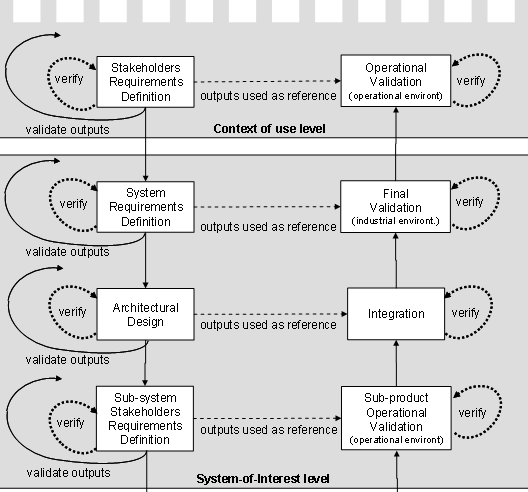

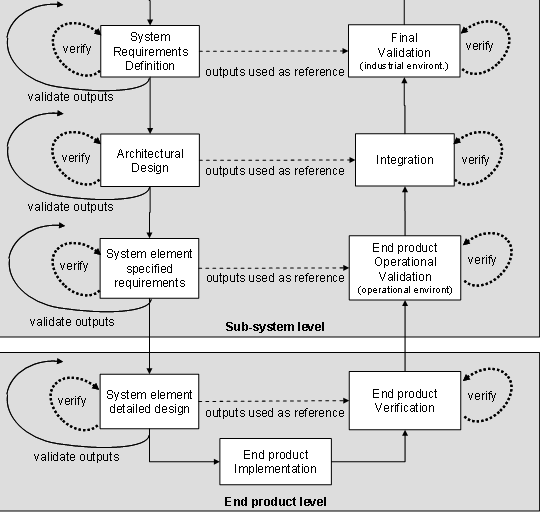

Verification Actions and Validation Actions inside and transverse to levels

Inside each level of system decomposition, Verification Actions and Validation Actions are performed during System Definition and System Realization as represented in Figure 14 for the upper levels and in Figure 15 for lower levels. The Stakeholders Requirements Definition and the Operational Validation make the link between two levels of the system decomposition.

The Specified Requirements Definition of the system elements and the End products Operational Validation make the link between the two lower levels of the decomposition – see Figure 15.

Note 1: The two figures above show a perfect dispatching of verification and validation activities on the right part, using the corresponding references provided by the System Definition processes on the left part. Some times in the real practices, the outputs of the Stakeholders Requirements Definition Process are not sufficiently formalized or do not contain sufficient operational scenarios and cannot serve as a reference to define operational Validation Actions (to be performed in the operational environment). In this case, the System Requirements Definition Process outputs may be used in place of.

Note 2: The last level of the system decomposition is dedicated to the Realization of the system elements, and the vocabulary and the number of activities used on the Figure 15 may be different – see Implementation section 3.4.3

Verification and Validation strategy

The notion of Verification and Validation strategy has been introduced in section 3.4.5.2.3. The difference between verification and validation is especially useful for elaborating the Integration strategy, the Verification strategy, and the Validation strategy. In fact the efficiency of the System Realization is gained optimizing the three strategies together to form what is often called Verification & Validation strategy. The optimization consists to define and to perform the minimum of Verification Actions and Validation Actions but detecting the maximum of errors/faults/defects and getting the maximum of confidence in the use of the product, service or enterprise. Of course the optimization takes into account the potential risks potentially generated if Verification Actions or Validation Actions are dropped out.

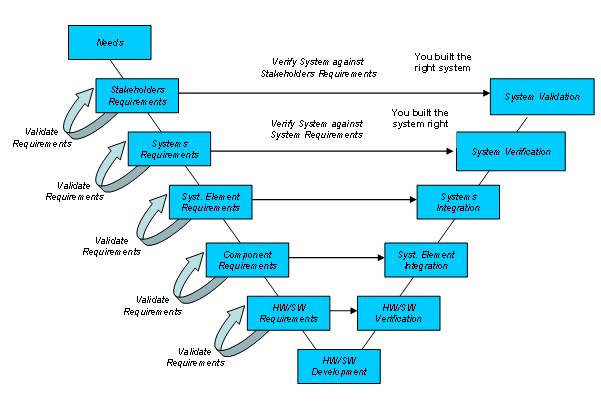

Other similar approaches and adaptation

What is presented in the section above represents the formalized approach that is well implemented in several industrial sectors, and its application has demonstrated the efficiency. Because of history, some industrial sectors may have (or seem having) different practices, but their analysis shows that the principles presented in the present book are applied more or less and that they distinguish actions more or less as identified here. The vocabulary may be a little bit different: terms verification and validation may be inverted or not so well differentiated as explained above, as well as the reference/baselines names used for comparison. Example - An example of application that shows different vocabulary is illustrated on Figure 16 and in text below.

Validation is a continuous process, starting at the beginning of product development, with the stakeholder requirements (upper part of the V-model) running at each level of the system/product hierarchy to ensure requirement cascading is properly done to all system components (the left part of the V-model), and ending with the verification of the final product (upper part of the right V-cycle) against the stakeholders requirements. Validation and verification are therefore concurrent activities which are embedded in the development cycle and difficult to isolate. For this reason, they are very often grouped together, and generally expressed as “V&V”. The Figure 16 focuses on two major aspects of validation: validation of requirements and validation of the system.

In the present case, the purpose of requirement validation is to ensure that requirements are correct and complete so that the product will meet upper level requirement and user needs. Requirement validation might be done at all levels of the system hierarchy, using a top-down approach. Validation of requirements and assumptions at higher levels serves as a basis for validation at lower levels. Requirement validation shall be performed as soon as a consistent set of requirements has been developed, and is not to be started once the complete set of requirements have been established.

In the present case, system validation will follow a bottom-up approach and will be possible only when all system elements and lower level will have been verified. System (final) validation is therefore the ultimate step of verification, which will demonstrate that the system accomplishes its final purpose as stated in the stakeholders' requirements.

Process Approach

====Purpose and Principle of the approach

The purpose of the [System] Validation Process is to provide objective evidence that the services provided by a system when in use comply with stakeholder requirements, achieving its intended use in its intended operational environment. (ISO/IEC 2008)

This process performs a comparative assessment and confirms that the stakeholders' requirements are correctly defined. Where variances are identified, these are recorded and guide corrective actions. System validation is ratified by stakeholders. (ISO/IEC 2008)

The validation process demonstrates that the realized end product satisfies its stakeholders' (customers and other interested parties) expectations within the intended operational environments, with validation performed by anticipated operators and/or users. (NASA December 2007, 1-360)

It is possible to generalize the process using an extended purpose as follows: the purpose of the Validation Process applied to any element is to demonstrate or prove that this element complies with its applicable requirements achieving its intended use in its intended operational environment.

Each system element, sub-system, and the complete system are compared against their own applicable requirements (System Requirements, Stakeholders' Requirements) – see section 3.4.6.2.1. This means that the Validation Process is instantiated as many times as necessary during the global development of the system. The Validation Process occurs at every different level of the system decomposition and as necessary all along the system development. Because of the generic nature of a process, the Validation Process can be applied to any engineering element that has conducted to the definition and realization of the system elements, the sub-systems and the system itself.

In order to ensure that validation is feasible, the implementation of requirements must be verifiable onto the submitted element. Ensuring that requirements are properly written, i.e. quantifiable, measurable, unambiguous, etc., is essential. In addition, verification/validation requirements are often written in conjunction with Stakeholders and System Requirements and provide the method for demonstrating the implementation of each System Requirement or Stakeholder requirement.

The generic inputs are the baseline references of requirements applicable to the submitted element. If the element is a system, the inputs are the System Requirements and Stakeholders' Requirements.

The generic outputs are the Validation Plan that includes the validation strategy, the selected Validation Actions, the Validation Procedures, the Validation Tools, the validated element or system, the validation reports, the issue/trouble reports and change requests on the requirements or on the product, service or enterprise.

Activities of the Process

Major activities and tasks performed during this process include:

- Establish a validation strategy drafted in a Validation Plan (this activity is carried out concurrently to System Definition activities) obtained by the following tasks:

- Identify the validation scope that is represented by the [system and or stakeholders] requirements; normally, every requirement should be checked; the number of Validation Actions can be high;

- Identify the constraints according to their origin (technical feasibility, management constraints as cost, time, availability of validation means or qualified personnel, contractual constraints as criticality of the mission) that limit or increase potentially the Validation Actions;

- Define the appropriate verification/validation techniques to be applied such as inspection, analysis, simulation, review, testing, etc., depending of the best step of the project to perform every Validation Action according to constraints;

- Trade off of what should be validated (scope) taking into account all the constraints or limits and deduce what can be validated objectively; the selection of Validation Actions would be made according to the type of system, objectives of the project, acceptable risks and constraints;

- Optimize the validation strategy defining the most appropriate verification/validation technique for every Validation Action, defining the necessary validation means (tools, test-benches, personnel, location, facilities) according to the selected validation technique, scheduling the Validation Actions execution in the project steps or milestones, defining the configuration of the elements submitted to Validation Actions (mainly about testing on physical elements).

- Perform the Validation Actions includes the following tasks:

- Detail each Validation Action, in particular the expected results, the verification/validation technique to be applied and corresponding means (equipments, resources and qualified personnel);

- Acquire the validation means used during the system definition steps (qualified personnel, modeling tools, mocks-up, simulators, facilities); then those during the integration, final and operational steps (qualified personnel, Validation Tools, measuring equipments, facilities, Validation Procedures, etc.);

- Carry out the Validation Procedures at the right time, in the expected environment, with the expected means, tools and techniques;

- Capture and record the results obtained when performing the Validation Actions using Validation Procedures and means.

- Analyze obtained results and compare them to the expected results; decide about the acceptability of the conformance/compliance – see section 3.4.6.2.1; record the decision and the status compliant or not; generate validation reports and potential issue/trouble reports and change requests on the [System or Stakeholder] Requirements as necessary.

- Control the process includes the following tasks:

- Update the Validation Plan according to the progress of the project; in particular the planned Validation Actions can be redefined because of unexpected events (addition, deletion or modification of actions);

- Coordinate the validation activities with the project manager for schedule, acquisition of means, personnel and resources, with the designers for issue/trouble/non conformance reports, with configuration manager for versions of physical elements, design baselines, etc.

Artifacts and Ontology Elements

This process may create several artifacts such as:

- Validation Plan (contains in particular the validation strategy with objectives, constraints, the list of the selected Validation Actions, etc.)

- Validation Matrix (contains for each Validation Action, the submitted element, the applied technique / method, the step of execution, the system block concerned, the expected result, the obtained result, etc.)

- Validation Procedures (describe the Validation Actions to be performed, the Validation Tools needed, the Validation Configuration, resources, personnel, schedule, etc.)

- Validation Reports

- Validation Tools

- Validated element (system, system element, sub-system, etc.)

- Issue / Non Conformance / Trouble Reports

- Change Requests on requirement, product, service, enterprise

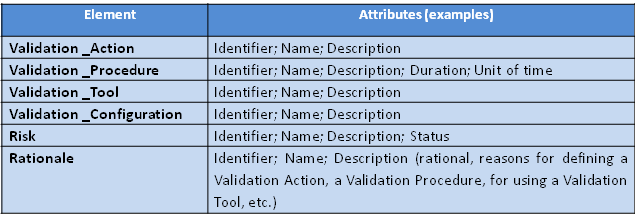

This process handles the ontology elements of the Table 5

The main relationships between ontology elements are presented in Figure 17.

====Checking and Correctness of Validation====

The main items to be checked during the validation process concern the items produced by the validation process (we could speak about verification of validation):

- The Validation Plan, the Validation Actions, the Validation Procedures, validation reports respect their corresponding template.

- Every validation activity has been planned, performed, recorded and has generated outcomes as defined in the process description above.

Methods and Techniques

There are several verification/validation techniques / method to check that an element or a system complies to its [System, Stakeholders] Requirements. These techniques are the same as those used for verification. In particular the purposes are different; verification is used to detect faults/defects, whereas validation is to prove satisfaction of [System and/or Stakeholders] Requirements. Refer to section 3.4.5.3.5.

Validation/ Traceability Matrix – The traceability matrix is introduced in the section xxxx of the Stakeholders Requirements Definition topic. It may also be extended and used to record data such as the Validation Actions list, the selected Verification / validation Technique to verify / validate the implementation of every engineering element (in particular Stakeholder and System Requirement), the expected results, the obtained results when the Validation Action has been performed. The use of such a matrix enables the development team to ensure that the selected Stakeholders' and System Requirements have been verified, or to evaluate the percentage of Validation Actions completed. In addition, the matrix helps to check the performed Validation activities against the planned activities as outlined in the Validation Plan, and finally to ensure that System Validation has been appropriately conducted.

Application to Product systems, Service systems, Enterprise systems

See section 3.4.5.4

Practical Considerations

Pitfalls encountered with system validation:

- A common mistake is to wait until the system has been entirely integrated and tested (design is qualified) to perform any sort of validation. Validation should occur as early as possible in the [product] life cycle. (Martin 1997)

- Use only testing as a validation technique. Testing requires checking products and services only when they are implemented. Consider other techniques earlier during design; analysis and inspections are cost effective and allow discovering early potential errors, faults or failures.

- Stop the performance of Validation Actions when budget and/or time are consumed. Prefer using criteria such as coverage rates to end validation activity.

Proven practices:

- The more the characteristics of an element are verified and validated early in the project,the more the corrections are easy to do and less the error will have consequences on schedule and costs.

- It is recommended to start the drafting of the Verification and Validation Plan as soon as the first requirements applicable to the system are known. If the writer of the requirements immediately puts the question to know how to verify/validate whether the future system will answer the requirements, it is possible to:

- detect the unverifiable requirements,

- anticipate, estimate cost and start the design of verification / validation means (as needed) such as test-benches, simulators, …

- avoid cost overruns and schedule slippages.

- According to Buede, a requirement is verifiable if a “finite, cost-effective process has been defined to check that the requirement has been attained.” (Buede 2009) Generally, this means that each requirement should be quantitative, measurable, unambiguous, understandable, and testable. It is generally much easier and more cost-effective to ensure that requirements meet these criteria while they are being written. Requirements adjustments made after implementation and/or integration are generally much more costly and may have wide-reaching redesign implications. There are several resources which provide guidance on creating appropriate requirements - see the System Definition knowledge area, Stakeholder Requirements and System Requirements topics for additional information.

- It is important to document both the Validation Actions performed and the results obtained. This provides accountability regarding the extent to which system, system elements, subsystems fulfill System Requirements and Stakeholders' Requirements. These data can be used to investigate why the system, system elements, subsystems do not match the requirements and to detect potential faults/defects. When requirements are met, these data may be reported to organization parties. For example, in a safety-critical system, it may be necessary to report the results of safety demonstrations to a Certification organization. Validation results may be reported to the acquirer for contractual aspects, or, to internal company for business purpose.

INCOSE. 2010. INCOSE systems engineering handbook, version 3.2. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.

ISO/IEC. 2008. Systems and software engineering - system life cycle processes. Geneva, Switzerland: International Organization for Standardization (ISO)/International Electronical Commission (IEC), ISO/IEC 15288:2008 (E).

NASA.. Systems Engineering Handbook. Washington, D.C.: National Aeronautics and Space Administration (NASA), NASA/SP-2007-6105, December 2007.

Practical Considerations

The following are elements that should be considered when practicing any of the activities discussed as part of system realization:

- Validation will often involve going back directly to the users to have them perform some sort of acceptance test under their own local conditions.

- Mixing verification and validation is a common issue. Validation demonstrates that the product, service, enterprise as provided, fulfils its intended use, whereas verification addresses whether a local work product properly reflects its specified requirements. In other words, verification ensures that “one built the system right” whereas validation ensures that “one built the right system.” Validation Actions use the same techniques as the Verification Actions (e.g., test, analysis, inspection, demonstration, or simulation).

Often the end users and other relevant stakeholders are involved in the validation activities. Both validation and verification activities (often) run concurrently and may use portions of the same environment. (SEI 2007)

- Include identification of the document(s)/drawing(s) to more easily make the comparison between what is required versus what is being inspected.

- Identify the generic name of the analysis (like Failure Modes Effects Analysis), analytical/computer tools, and/or numeric methods, the source of input data, and how raw data will be analyzed. Ensure agreement with the acquirer that the analysis methods and tools, including simulations, are acceptable for the provision of objective proof of requirements compliance

- State who the witnesses will be for the purpose of collecting the evidence of success, what general steps will be followed, and what special resources are needed, such as instrumentation, special test equipment or facilities, simulators, specific data gathering, or rigorous analysis of demonstration results.

- Identify the test facility, test equipment, any unique resource needs and environmental conditions, required qualifications and test personnel, general steps that will be followed, specific data to be collected, criteria for repeatability of collected data, and methods for analyzing the results.

Primary References

Buede, D. M. 2009. The engineering design of systems: Models and methods. 2nd ed. Hoboken, NJ: John Wiley & Sons Inc.

DAU. February 19, 2010. Defense acquisition guidebook (DAG). Ft. Belvoir, VA, USA: Defense Acquisition University (DAU)/U.S. Department of Defense.

ECSS. 6 March 2009. Systems engineering general requirements. Noordwijk, Netherlands: Requirements and Standards Division, European Cooperation for Space Standardization (ECSS), ECSS-E-ST-10C.

INCOSE. 2010. INCOSE systems engineering handbook, version 3.2. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.

ISO/IEC. 2008. Systems and software engineering - system life cycle processes. Geneva, Switzerland: International Organization for Standardization (ISO)/International Electronical Commission (IEC), ISO/IEC 15288:2008 (E).

NASA. December 2007. Systems engineering handbook. Washington, D.C.: National Aeronautics and Space Administration (NASA), NASA/SP-2007-6105.

SAE International. 1996. Certification considerations for highly-integrated or complex aircraft systems. Warrendale, PA, USA: SAE International, ARP475

Additional References and Readings

DAU. Your acquisition policy and discretionary best practices guide. In Defense Acquisition University (DAU)/U.S. Department of Defense (DoD) [database online]. Ft Belvoir, VA, USA, 2009 Available from https://dag.dau.mil/Pages/Default.aspx (accessed 2010).

Gold-Bernstein, B., and W. A. Ruh. 2004. Enterprise integration: The essential guide to integration solutions. Boston, MA, USA: Addison Wesley Professional.

Grady, J. O. 1994. System integration. Boca Raton, FL, USA: CRC Press, Inc.

Martin, J. N. 1997. Systems engineering guidebook: A process for developing systems and products. 1st ed. Boca Raton, FL, USA: CRC Press.

Prosnik, G. 2010. Materials from "systems 101: Fundamentals of systems engineering planning, research, development, and engineering". DAU distance learning program. eds. J. Snoderly, B. Zimmerman. Ft. Belvoir, VA, USA: Defense Acquisition University (DAU)/U.S. Department of Defense (DoD).

SEI. 2007. Capability maturity model integrated (CMMI) for development, version 1.2, measurement and analysis process area. Pittsburg, PA, USA: Software Engineering Institute (SEI)/Carnegie Mellon University (CMU).

Glossary

Acronyms

Acronym Definition DAU U.S. Defense Acquisition University DoD U.S. Department of Defense ESB Enterprise Service Bus INCOSE International Council on Systems Engineering IV&V Integration, Verification, & Validation NASA U.S. National Aeronautics and Space Administration PHS&T Packaging, Handling, Storage, and Transportation SOI System-of-Interest SoS System-of-Systems V&V Verification & Validation

Terminology

Aggregate—an aggregate is a subset of the system made up of several physical Components and Links (indiscriminately system elements or sub-systems) on which a set of Verification Actions is applied.

Assembly procedure—an assembly procedure groups a set of elementary assembly actions to build an Aggregate of physical Components and Links.

Assembly tool—an assembly tool is a physical tool used to connect, assemble or link several Components and Links to build Aggregates (specific tool, harness, etc.).

Implementation—the process that actually yields the lowest-level system elements in the system hierarchy (system breakdown structure)

Integration—a process that combines system elements to form complete or partial system configurations in order to create a product specified in the system requirements. (ISO/IEE 2008)

System realization—includes the activities required to build a system, integrate disparate system elements, and ensure that the system both meets the Stakeholders Requirements and System requirements, and aligns with the design properties identified or defined in the System Definition processes.

Validation—the process of ensuring that the system achieved its intended use in its operational environment and conditions.

Validation plan—A document which explains how the validation data will be used to determine that the realized system (product, service, or enterprise) complies with the System Requirements and/or Stakeholders Requirements.

Verification—the process of ensuring that a system is built according to its specified requirements and/or design characteristics.

Validation action—A validation action describes what must be validated (the element as reference), on which element, the expected result, the validation/verification technique to apply, on which level of decomposition.

Vreification action—A verification action describes what must be verified (the element as reference), on which element, the expected result, the verification technique to apply, on which level of decomposition.

Validation configuration—A validation configuration groups all physical elements (system elements, sub-systems, system and Validation Tools) necessary to perform a Validation Procedure.

Verification configuration—A verification configuration groups all physical elements (Aggregates and Verification Tools) necessary to perform a Verification Procedure.

Validation procedure—a validation procedure groups a set of Validation Actions performed together (as a scenario of tests) in a given Validation Configuration.

Verification procedure—a verification procedure groups a set of Verification Actions performed together (as a scenario of tests) in a given Verification Configuration.

Validation tool—a validation tool is a device or physical tool used to perform Validation Procedures (test bench, simulator, cap/stub, launcher, etc.).

Verification tool—a verification tool is a device or physical tool used to perform Verification Procedures (test bench, simulator, cap/stub, launcher, etc.).