Decision Management

Many systems engineering decisions are difficult because they include numerous stakeholders, multiple competing objectives, substantial uncertainty, and significant consequences. In these cases, good decision making requires a formal decision management process. The purpose of the decision management process is

“…to provide a structured, analytical framework for identifying, characterizing and evaluating a set of alternatives for a decision at any point in the life-cycle and select the most beneficial course of action.” (ISO/IEC 15288:2008)

Decision situations (opportunities) are commonly encountered throughout a system’s lifecycle. The decision management method most commonly employed by systems engineers is the trade study. Trade studies aim to define, measure, and assess shareholder and stakeholder value to facilitate the decision maker’s search for an alternative that represents the best balance of competing objectives. By providing techniques to decompose a trade decision into logical segments and then synthesize the parts into a coherent whole, a decision management process allows the decision maker to work within human cognitive limits without oversimplifying the problem. Furthermore, by decomposing the overall decision problem, experts can provide assessments of alternatives in their area of expertise.

Decision Management Process

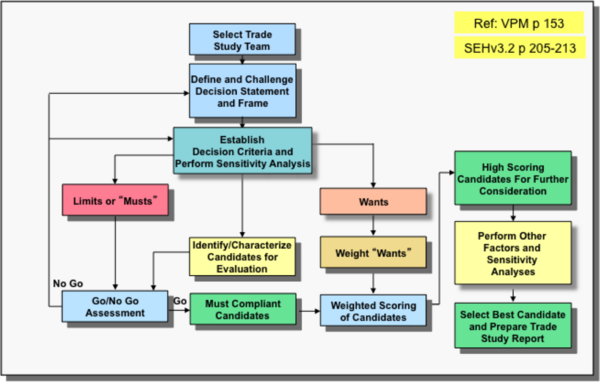

The decision analysis process is depicted in Figure 1 below. The decision management approach is based on several best practices:

- Use sound mathematical technique of decision analysis for trade off studies. Parnell (2009) provided a list of decision analysis concepts and techniques.

- Develop one master decision model and refine, update, and use it as required for trade studies throughout the system development life cycle.

- Use Value-Focused Thinking (Keeney, 1992) to create better alternatives

- Identify uncertainty and assess risks for each decision.

Figure 1 Decision Management Process

The center of the diagram shows the five trade space objectives. The ten blue arrows represent the Decision Management Process activities and the white text within the green ring represents SE process elements. Interactions are represented by the small, dotted green or blue arrows. The decision analysis process is an iterative process. A hypothetical UAV decision problem is used to illustrate each of the activities in the following sections.

Framing & Tailoring Decision

To ensure the decision makers and stakeholders fully understand the decision context; the analyst should describe the system baseline, boundaries and interfaces. Decision context includes the decision milestones, a list of decision makers and stakeholders, and available resources. The best practice is to identify a decision problem statement that defines the decision in terms of the system life cycle.

Developing Objectives & Measures

Defining how an important decision will be made is difficult.. As Keeney puts it,

Most important decisions involve multiple objectives, and usually with multiple-objective decisions, you can't have it all. You will have to accept less achievement in terms of some objectives in order to achieve more on other objectives. But how much less would you accept to achieve how much more? (Keeney 2002)

The first step is to develop objectives and measures using interviews and focus groups with subject matter experts (SMEs) and stakeholders. For systems engineering trade-off analyses, stakeholder value often includes competing objectives of performance, development schedule, unit cost, support costs, and growth potential. For corporate decisions, shareholder value would be added to this list. For performance, a functional decomposition can help generate a thorough set of potential objectives. Test this initial list of fundamental objectives by checking that each fundamental objective is essential and controllable and that the set of objectives is complete, non-redundant, concise, specific, and understandable. (Edwards et al. 2007) Figure 2 provides an objectives hierarchy example.

The next step is to define the desirable characteristics of the solution and develop a relative weighting. If no weighting is used, it implies all criteria are of equal importance. One fatal flaw is if the team creates too many criteria (15 or 20 or more), as this tends to obscure important differences in the candidate solutions. When the relative comparison is completed, scores within five percent of each other are essentially equal. An opportunity and risk assessment should be performed on the best candidates, as well as a sensitivity analysis on the scores and weights to ensure that the robustness (or fragility) of the decision is known.

There are a number of approaches, starting with Pugh’s method in which each candidate solution is compared to a reference standard, and is rated as equal, better, or inferior for each of the criteria (Pugh 1981). An alternative approach is the Kepner Tragoe decision matrix, which is described by Forsberg, Mooz, and Cotterman (2005, 154-155).

In the preceding example all criteria are compared to the highest-value criterion. Another approach is to create a full matrix of pair-wise comparisons of all criteria against each other, and from that, using the analytical hierarchy process (AHP), the relative importance is calculated. The process is still judgmental, and the results are no more accurate, but several computerized decision support tools have made effective use of this process (Saaty 2008).

Probability-Based Decision Analysis

Probability-based decisions are made when there is uncertainty. Decision management techniques and tools for decisions based on uncertainty include probability theory, utility functions, decision tree analysis, modeling, and simulation. A classic mathematically oriented reference for understanding decision trees and probability analysis is explained by Raiffa (1997). Another classic introduction is presented by Schlaiffer (1969) with more of an applied focus. The aspect of modeling and simulation is covered in the popular textbook Simulation Modeling and Analysis (Law 2007), which also has good coverage of Monte Carlo analysis. Some of these more commonly used and fundamental methods are overviewed below.

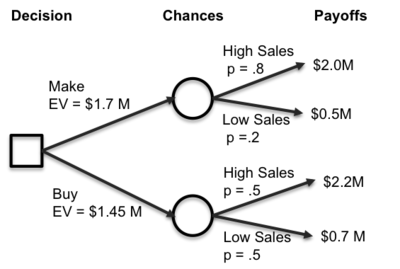

Decision trees and influence diagrams are visual analytical decision support tools where the expected values (or expected utility) of competing alternatives are calculated. A decision tree uses a tree-like graph or model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. Influence diagrams are used for decision models as alternate, more compact, graphical representations of decision trees.

Figure 2 below demonstrates a simplified make vs. buy decision analysis tree and the associated calculations. Suppose making a product costs $200K more than buying an alternative off the shelf, reflected as a difference in the net payoffs in the figure. The custom development is also expected to be a better product with a corresponding larger probability of high sales at 80% vs. 50% for the bought alternative. With these assumptions, the monetary expected value of the make alternative is (.8*2.0M) + (.2*0.5M) = 1.7M and the buy alternative is (.5*2.2M) + (.5*0.7M) = 1.45M.

Influence diagrams focus attention on the issues and relationships between events. They are generalizations of Bayesian networks; whereby, maximum expected utility criteria can be modeled. A good reference is presented by Detwarasiti and Shachter (2005, 207-228) for using influence diagrams in team decision analysis. Utility is a measure of relative satisfaction that takes into account the decision-maker’s preferred function, which may be nonlinear. Expected utility theory deals with the analysis of choices with multidimensional outcomes. When dealing with monetary value, the analyst can determine the decision-maker’s utility for money and select the alternative course of action that yields the highest expected utility rather than the highest expected monetary value. Decision with Multiple Objectives: Preferences and Value- Trade-Offs is a classic reference on applying multiple objective methods, utility functions, and allied techniques (Kenney and Raiffa 1976). References with applied examples of decision tree analysis and utility functions include Managerial Decision Analysis (Samson 1988) and Introduction to Decision Analysis (Skinner 1999).

Systems engineers often have to consider many different criteria when making choices about system tradeoffs. Taken individually, these criteria could lead to very different choices. A weighted objectives analysis is a rational basis for incorporating the multiple criteria into the decision. In this analysis, each criterion is weighted depending on its importance relative to the others. Table 1 below shows an example deciding between two alternatives using their criteria weights, ratings, weighted ratings, and weighted totals to decide between the alternatives.

| Alternative A | Alternative B | ||||

|---|---|---|---|---|---|

| Criteria | Weight | Rating | Weight * Rating | Rating | Weight * Rating |

| Better | 0.5 | 4 | 2.0 | 10 | 5.0 |

| Faster | 0.3 | 8 | 2.4 | 5 | 21.5 |

| Cheaper | 0.2 | 5 | 1.0 | 3 | 0.6 |

| Total Weighted Score | 5.4 | 7.1 | |||

There are numerous other methods used in decision analysis. One of the simplest is sensitivity analysis, which looks at the relationships between the outcomes and their probabilities to find how sensitive a decision point is to changes in input values. Value of information methods concentrates its effort on data analysis and modeling to improve the optimum expected value. Multi-Attribute Utility Analysis (MAUA) is a method that develops equivalencies between dissimilar units of measure. Systems Engineering Management (Blanchard 2004b) shows a variety of these decision analysis methods in many technical decision scenarios. A comprehensive reference demonstrating decision analysis methods for software-intensive systems is "Software Risk Management: Principles and Practices" (Boehm 1981, 32-41). It is a major for information pertaining to multiple goal decision analysis, dealing with uncertainties, risks, and the value of information.

Facets of a decision situation which cannot be explained by a quantitative model should be reserved for the intuition and judgment that are applied by the decision maker. Sometimes outside parties are also called upon. One method to canvas experts, known as the Delphi Technique, is a method of group decision-making and forecasting that involves successively collecting and analyzing the judgments of experts. A variant called the Wideband Delphi Technique is described by Boehm (1981, 32-41) for improving upon the standard Delphi with more rigorous iterations of statistical analysis and feedback forms.

Making decisions is a key process practiced by systems engineers, project managers, and all team members. Sound decisions are based on good judgment and experience. There are concepts, methods, processes, and tools that can assist in the process of decision-making, especially in making comparisons of decision alternatives. These tools can also assist in building team consensus, in selecting and supporting the decision made, and in defending it to others. General tools, such as spreadsheets and simulation packages, can be used with these methods. There are also tools targeted specifically for aspects of decision analysis such as decision trees, evaluation of probabilities, Bayesian influence networks, and others. The International Council on Systems Engineering (INCOSE) tools database (INCOSE 2011, 1) has an extensive list of analysis tools.

Linkages to Other Systems Engineering Management Topics

Decision management is used in many other process areas due to numerous contexts for a formal evaluation process, both in technical and management, and is closely coupled with other management areas. Risk Management in particular uses decision analysis methods for risk evaluation, mitigation decisions, and a formal evaluation process to address medium or high risks. The measurement process describes how to derive quantitative indicators as input to decisions. Project assessment and control uses decision results. Refer to the planning process area for more information about incorporating decision results into project plans.

Practical Considerations

Key pitfalls and good practices related to decision analysis are described below.

Pitfalls

Some of the key pitfalls are below in Table 2.

| Name | Description |

|---|---|

| False Confidence |

|

| No External Validation |

|

| Errors and False Assumptions |

|

| Impractical Application |

|

Good Practices

Some good practices are below in Table 3.

| Name | Description |

|---|---|

| Progressive Decision Modeling |

|

| Necessary Measurements |

|

| Define Selection Criteria |

|

Additional good practices can be found in ISO/IEC/IEEE (2009, Clause 6.3) and INCOSE (2011, Section 5.3.1.5). Parnell, Driscoll, and Henderson (2008) provide a thorough overview.

References

Works Cited

Blanchard, B.S. 2004. Systems Engineering Management, 3rd ed. New York, NY,USA: John Wiley & Sons.

Boehm, B. 1981. "Software risk management: Principles and practices." IEEE Software. 8 (1) (January 1991): 32-41.

Cialdini, R.B. 2006. Influence: The Psychology of Persuasion. New York, NY, USA: Collins Business Essentials.

Detwarasiti, A. and R. D. Shachter. 2005. "Influence diagrams for team decision analysis." Decision Analysis. 2 (4): 207-28.

Gladwell, M. 2005. Blink: the Power of Thinking without Thinking. Boston, MA, USA: Little, Brown & Co.

Kenney, R.L. and H. Raiffa. 1976. Decision with multiple objectives: Preferences and value- trade-offs. New York, NY: John Wiley & Sons.

INCOSE. 2012. Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities, version 3.2.2. San Diego, CA, USA: International Council on Systems Engineering (INCOSE), INCOSE-TP-2003-002-03.2.2.

Law, A. 2007. Simulation Modeling and Analysis, 4th ed. New York, NY, USA: McGraw Hill.

Pugh, S. 1981. "Concept selection: a method that works". In Hubka, V. (ed.), Review of design methodology. Proceedings of the International Conference on Engineering Design, Rome, Italy, March 1981.

Rich, B. and L. Janos. 1996. Skunk Works. Boston, MA, USA: Little, Brown & Company.

Saaty, T.L. 2008. Decision Making for Leaders: The Analytic Hierarchy Process for Decisions in a Complex World. Pittsburgh, PA, USA: RWS Publications. ISBN 0-9620317-8-X.

Raiffa, H. 1997. Decision Analysis: Introductory Lectures on Choices under Uncertainty. New York, NY, USA: McGraw-Hill.

Samson, D. 1988. Managerial Decision Analysis. New York, NY, USA: Richard D. Irwin, Inc.

Schlaiffer, R. 1969. Analysis of Decisions under Uncertainty. New York, NY, USA: McGraw-Hill Book Company.

Skinner, D. 1999. Introduction to Decision Analysis, 2nd ed. Sugar Land, TX, USA: Probabilistic Publishing.

Wikipedia contributors. "Decision making software." Wikipedia, The Free Encyclopedia. Accessed September 13, 2011. Available at: http://en.wikipedia.org/w/index.php?title=Decision_making_software&oldid=448914757.

Primary References

Forsberg, K., H. Mooz, and H. Cotterman. 2005. Visualizing Project Management, 3rd ed. Hoboken, NJ, USA: John Wiley and Sons. p. 154-155.

Law, A. 2007. Simulation Modeling and Analysis, 4th ed. New York, NY, USA: McGraw Hill.

Raiffa, H. 1997. Decision Analysis: Introductory Lectures on Choices under Uncertainty. New York, NY, USA: McGraw-Hill.

Saaty, T.L. 2008. Decision Making for Leaders: The Analytic Hierarchy Process for Decisions in a Complex World. Pittsburgh, PA, USA: RWS Publications.

Samson, D. 1988. Managerial Decision Analysis. New York, NY, USA: Richard D. Irwin, Inc.

Schlaiffer, R. 1969. Analysis of Decisions under Uncertainty. New York, NY, USA: McGraw-Hill.

Additional References

Blanchard, B.S. 2004. Systems Engineering Management, 3rd ed. New York, NY,USA: John Wiley & Sons.

Boehm, B. 1981. "Software risk management: Principles and practices." IEEE 'Software. 8(1) (January 1991): 32-41.

Detwarasiti, A. and R. D. Shachter. 2005. "Influence diagrams for team decision analysis." Decision Analysis. 2(4): 207-28.

Kenney, R.L. and H. Raiffa. 1976. Decision with multiple objectives: Preferences and value- trade-offs. New York, NY: John Wiley & Sons.

C.H. Kepner and B.B. Tregoe. 1965. The rational manager: a systematic approach to problem solving and decision making. New York: McGraw-Hill.

Parnell, G.S., P.J. Driscoll, and D.L. Henderson. 2nd Edition, 2011. Decision Making in Systems Engineering and Management. New York, NY, USA: John Wiley & Sons.

Rich, B. and L. Janos. 1996. Skunk Works. Boston, MA, USA: Little, Brown & Company.

Skinner, D. 1999. Introduction to Decision Analysis, 2nd ed. Sugar Land, TX, USA: Probabilistic Publishing.

SEBoK Discussion

Please provide your comments and feedback on the SEBoK below. You will need to log in to DISQUS using an existing account (e.g. Yahoo, Google, Facebook, Twitter, etc.) or create a DISQUS account. Simply type your comment in the text field below and DISQUS will guide you through the login or registration steps. Feedback will be archived and used for future updates to the SEBoK. If you provided a comment that is no longer listed, that comment has been adjudicated. You can view adjudication for comments submitted prior to SEBoK v. 1.0 at SEBoK Review and Adjudication. Later comments are addressed and changes are summarized in the Letter from the Editor and Acknowledgements and Release History.

If you would like to provide edits on this article, recommend new content, or make comments on the SEBoK as a whole, please see the SEBoK Sandbox.

blog comments powered by Disqus